Explore millions of high-quality primary sources and images from around the world, including artworks, maps, photographs, and more.

Explore migration issues through a variety of media types

- Part of Street Art Graphics

- Part of The Journal of Economic Perspectives, Vol. 34, No. 1 (Winter 2020)

- Part of Cato Institute (Aug. 3, 2021)

- Part of University of California Press

- Part of Open: Smithsonian National Museum of African American History & Culture

- Part of Indiana Journal of Global Legal Studies, Vol. 19, No. 1 (Winter 2012)

- Part of R Street Institute (Nov. 1, 2020)

- Part of Leuven University Press

- Part of UN Secretary-General Papers: Ban Ki-moon (2007-2016)

- Part of Perspectives on Terrorism, Vol. 12, No. 4 (August 2018)

- Part of Leveraging Lives: Serbia and Illegal Tunisian Migration to Europe, Carnegie Endowment for International Peace (Mar. 1, 2023)

- Part of UCL Press

Harness the power of visual materials—explore more than 3 million images now on JSTOR.

Enhance your scholarly research with underground newspapers, magazines, and journals.

Explore collections in the arts, sciences, and literature from the world’s leading museums, archives, and scholars.

What is Database Search?

Harvard Library licenses hundreds of online databases, giving you access to academic and news articles, books, journals, primary sources, streaming media, and much more.

The contents of these databases are only partially included in HOLLIS. To make sure you're really seeing everything, you need to search in multiple places. Use Database Search to identify and connect to the best databases for your topic.

In addition to digital content, you will find specialized search engines used in specific scholarly domains.

Related Services & Tools

Tomorrow´s Research Today

SSRN provides 1,481,614 preprints and research papers from 1,918,444 researchers in over 65 disciplines.

New Networks

Research disciplines, applied sciences.

APPLIED SCIENCES are those disciplines, including applied and pure mathematics, that apply existing scientific knowledge to develop practical applications.

Health Sciences

HEALTH SCIENCES are those disciplines that address the use of science and technology to the delivery of healthcare.

HUMANITIES are those disciplines that investigate human constructs, cultures and concerns, using critical and analytical approaches.

Life Sciences

LIFE SCIENCES are those disciplines that study living organisms, their life processes, and their relationships to each other and their environment.

Physical Sciences

PHYSICAL SCIENCES are those disciplines that study natural sciences, dealing with nonliving materials.

Social Sciences

SOCIAL SCIENCES are those disciplines that study (a) institutions and functioning of human society and the interpersonal relationships of individuals as members of society; (b) a particular phase or aspect of human society.

Products and Services

Recent announcements.

SSRN is devoted to the rapid worldwide dissemination of preprints and research papers and is composed of a number of specialized research networks.

Special thanks to:

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Trending Articles

- The multi-stage plasticity in the aggression circuit underlying the winner effect. Yan R, et al. Cell. 2024. PMID: 39406242

- IRE1α silences dsRNA to prevent taxane-induced pyroptosis in triple-negative breast cancer. Xu L, et al. Cell. 2024. PMID: 39419025

- Multiscale drug screening for cardiac fibrosis identifies MD2 as a therapeutic target. Zhang H, et al. Cell. 2024. PMID: 39413786

- A two-front nutrient supply environment fuels small intestinal physiology through differential regulation of nutrient absorption and host defense. Zhang J, et al. Cell. 2024. PMID: 39427662

- Breast Cancer in Users of Levonorgestrel-Releasing Intrauterine Systems. Mørch LS, et al. JAMA. 2024. PMID: 39412770

Latest Literature

- Am J Med (1)

- Eur J Clin Nutr (2)

- J Biol Chem (5)

- Metabolism (1)

- Methods Mol Biol (22)

- Nat Commun (31)

- Nat Rev Cancer (1)

- Sci Rep (140)

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

APA PsycInfo ®

The premier abstracting and indexing database covering the behavioral and social sciences from the authority in psychology.

Support research goals

Institutional access to APA PsycInfo provides a single source of vetted, authoritative research for users across the behavioral and social sciences. Students and researchers enjoy seamless access to cutting-edge and historical content with citations in APA Style ® .

The newest features available within APA PsycInfo leverage artificial intelligence and machine learning to equip users with a personalized research assistant that helps monitor trends, explore content analytics, and gain one-click access to full text within a centralized, essential source of credible psychology research.

Celebrating 55 years

For over 55 years, APA PsycInfo has been the most trusted index of psychological science in the world. With more than 5,000,000 interdisciplinary bibliographic records, our database delivers targeted discovery of credible and comprehensive research across the full spectrum of behavioral and social sciences. This indispensable resource continues to enhance the discovery and usage of essential psychological research to support students, scientists, and educators. Explore the past, present, and future of psychology research .

APA PsycInfo at a glance

- Over 5,000,000 peer-reviewed records

- 144 million cited references

- Spanning 600 years of content

- Updated twice-weekly

- Research in 30 languages from 50 countries

- Records from 2,400 journals

- Content from journal articles, book chapters, and dissertations

- AI and machine learning-powered research assistance

Support your campus community beyond psychology with APA PsycInfo’s broad subject coverage:

- Artificial Intelligence

- Linguistics

- Neuroscience

- Pharmacology

- Political science

- Social work

Institutional trial

Evaluate this resource free for 30 days to determine if it meets your library’s research needs.

Access options

Select from individual subscriptions or institutional licenses on your platform of choice.

Find webinars, tutorials, and guides to help promote your library’s subscription.

Key benefits of APA PsycInfo

Learn what’s new

AI-powered research tools

Join a webinar on new features courtesy of your access to APA PsycInfo

APA PsycInfo webinars

Help users search smarter this semester from APA training experts

Browse APA Databases and electronic products by publication type or subscriber type.

View Product Guide

More about APA PsycInfo

- APA PsycInfo FAQs

- Sample records

- Coverage List

- Full-Text Options

- APA PsycInfo Publishers

APA PsycInfo research services

Simplify the research process for your users with this personalized research assistant, a free tool courtesy of your institution's subscription to APA PsycInfo. This service leverages AI and machine learning to ease access to full text, content analytics, and discovery of the latest behavioral sciences research.

Get Started

Find full-text articles, book chapters, and more using APA PsycNet ® , the only search platform designed specially to deliver APA content.

MORE ABOUT APA PSYCNET

APA Publishing Blog

The blog is your source for training materials and information about APA’s databases and electronic resources. Stay up-to-date on training sessions, new features and content, and resources to support your subscription.

Follow blog

Stay connected

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Advances in database systems education: Methods, tools, curricula, and way forward

Muhammad ishaq, muhammad shoaib farooq, muhammad faraz manzoor, uzma farooq, kamran abid, mamoun abu helou.

- Author information

- Article notes

- Copyright and License information

Corresponding author.

Accepted 2022 Aug 16; Issue date 2023.

This article is made available via the PMC Open Access Subset for unrestricted research re-use and secondary analysis in any form or by any means with acknowledgement of the original source. These permissions are granted for the duration of the World Health Organization (WHO) declaration of COVID-19 as a global pandemic.

Fundamentals of Database Systems is a core course in computing disciplines as almost all small, medium, large, or enterprise systems essentially require data storage component. Database System Education (DSE) provides the foundation as well as advanced concepts in the area of data modeling and its implementation. The first course in DSE holds a pivotal role in developing students’ interest in this area. Over the years, the researchers have devised several different tools and methods to teach this course effectively, and have also been revisiting the curricula for database systems education. In this study a Systematic Literature Review (SLR) is presented that distills the existing literature pertaining to the DSE to discuss these three perspectives for the first course in database systems. Whereby, this SLR also discusses how the developed teaching and learning assistant tools, teaching and assessment methods and database curricula have evolved over the years due to rapid change in database technology. To this end, more than 65 articles related to DSE published between 1995 and 2022 have been shortlisted through a structured mechanism and have been reviewed to find the answers of the aforementioned objectives. The article also provides useful guidelines to the instructors, and discusses ideas to extend this research from several perspectives. To the best of our knowledge, this is the first research work that presents a broader review about the research conducted in the area of DSE.

Keywords: Higher education, Database, Education, Database curriculum, Tools, Teaching methods

Introduction

Database systems play a pivotal role in the successful implementation of the information systems to ensure the smooth running of many different organizations and companies (Etemad & Küpçü, 2018 ; Morien, 2006 ). Therefore, at least one course about the fundamentals of database systems is taught in every computing and information systems degree (Nagataki et al., 2013 ). Database System Education (DSE) is concerned with different aspects of data management while developing software (Park et al., 2017 ). The IEEE/ACM computing curricula guidelines endorse 30–50 dedicated hours for teaching fundamentals of design and implementation of database systems so as to build a very strong theoretical and practical understanding of the DSE topics (Cvetanovic et al., 2010 ).

Practically, most of the universities offer one user-oriented course at undergraduate level that covers topics related to the data modeling and design, querying, and a limited number of hours on theory (Conklin & Heinrichs, 2005 ; Robbert & Ricardo, 2003 ), where it is often debatable whether to utilize a design-first or query-first approach. Furthermore, in order to update the course contents, some recent trends, including big data and the notion of NoSQL should also be introduced in this basic course (Dietrich et al., 2008 ; Garcia-Molina, 2008 ). Whereas, the graduate course is more theoretical and includes topics related to DB architecture, transactions, concurrency, reliability, distribution, parallelism, replication, query optimization, along with some specialized classes.

Researchers have designed a variety of tools for making different concepts of introductory database course more interesting and easier to teach and learn interactively (Brusilovsky et al., 2010 ) either using visual support (Nagataki et al., 2013 ), or with the help of gamification (Fisher & Khine, 2006 ). Similarly, the instructors have been improvising different methods to teach (Abid et al., 2015 ; Domínguez & Jaime, 2010 ) and evaluate (Kawash et al., 2020 ) this theoretical and practical course. Also, the emerging and hot topics such as cloud computing and big data has also created the need to revise the curriculum and methods to teach DSE (Manzoor et al., 2020 ).

The research in database systems education has evolved over the years with respect to modern contents influenced by technological advancements, supportive tools to engage the learners for better learning, and improvisations in teaching and assessment methods. Particularly, in recent years there is a shift from self-describing data-driven systems to a problem-driven paradigm that is the bottom-up approach where data exists before being designed. This mainly relies on scientific, quantitative, and empirical methods for building models, while pushing the boundaries of typical data management by involving mathematics, statistics, data mining, and machine learning, thus opening a multidisciplinary perspective. Hence, it is important to devote a few lectures to introducing the relevance of such advance topics.

Researchers have provided useful review articles on other areas including Introductory Programming Language (Mehmood et al., 2020 ), use of gamification (Obaid et al., 2020 ), research trends in the use of enterprise service bus (Aziz et al., 2020 ), and the role of IoT in agriculture (Farooq et al., 2019 , 2020 ) However, to the best of our knowledge, no such study was found in the area of database systems education. Therefore, this study discusses research work published in different areas of database systems education involving curricula, tools, and approaches that have been proposed to teach an introductory course on database systems in an effective manner. The rest of the article has been structured in the following manner: Sect. 2 presents related work and provides a comparison of the related surveys with this study. Section 3 presents the research methodology for this study. Section 4 analyses the major findings of the literature reviewed in this research and categorizes it into different important aspects. Section 5 represents advices for the instructors and future directions. Lastly, Sect. 6 concludes the article.

Related work

Systematic Literature Reviews have been found to be a very useful artifact for covering and understanding a domain. A number of interesting review studies have been found in different fields (Farooq et al., 2021 ; Ishaq et al., 2021 ). Review articles are generally categorized into narrative or traditional reviews (Abid et al., 2016 ; Ramzan et al., 2019 ), systematic literature review (Naeem et al., 2020 ) and meta reviews or mapping study (Aria & Cuccurullo, 2017 ; Cobo et al., 2012 ; Tehseen et al., 2020 ). This study presents a systematic literature review on database system education.

The database systems education has been discussed from many different perspectives which include teaching and learning methods, curriculum development, and the facilitation of instructors and students by developing different tools. For instance, a number of research articles have been published focusing on developing tools for teaching database systems course (Abut & Ozturk, 1997 ; Connolly et al., 2005 ; Pahl et al., 2004 ). Furthermore, few authors have evaluated the DSE tools by conducting surveys and performing empirical experiments so as to gauge the effectiveness of these tools and their degree of acceptance among important stakeholders, teachers and students (Brusilovsky et al., 2010 ; Nelson & Fatimazahra, 2010 ). On the other hand, some case studies have also been discussed to evaluate the effectiveness of the improvised approaches and developed tools. For example, Regueras et al. ( 2007 ) presented a case study using the QUEST system, in which e-learning strategies are used to teach the database course at undergraduate level, while, Myers and Skinner ( 1997 ) identified the conflicts that arise when theories in text books regarding the development of databases do not work on specific applications.

Another important facet of DSE research focuses on the curriculum design and evolution for database systems, whereby (Alrumaih, 2016 ; Bhogal et al., 2012 ; Cvetanovic et al., 2010 ; Sahami et al., 2011 ) have proposed solutions for improvements in database curriculum for the better understanding of DSE among the students, while also keeping the evolving technology into the perspective. Similarly, Mingyu et al. ( 2017 ) have shared their experience in reforming the DSE curriculum by adding topics related to Big Data. A few authors have also developed and evaluated different tools to help the instructors teaching DSE.

There are further studies which focus on different aspects including specialized tools for specific topics in DSE (Mcintyre et al, 1995 ; Nelson & Fatimazahra, 2010 ). For instance, Mcintyre et al. ( 1995 ) conducted a survey about using state of the art software tools to teach advanced relational database design courses at Cleveland State University. However, the authors did not discuss the DSE curricula and pedagogy in their study. Similarly, a review has been conducted by Nelson and Fatimazahra ( 2010 ) to highlight the fact that the understanding of basic knowledge of database is important for students of the computer science domain as well as those belonging to other domains. They highlighted the issues encountered while teaching the database course in universities and suggested the instructors investigate these difficulties so as to make this course more effective for the students. Although authors have discussed and analyzed the tools to teach database, the tools are yet to be categorized according to different methods and research types within DSE. There also exists an interesting systematic mapping study by Taipalus and Seppänen ( 2020 ) that focuses on teaching SQL which is a specific topic of DSE. Whereby, they categorized the selected primary studies into six categories based on their research types. They utilized directed content analysis, such as, student errors in query formulation, characteristics and presentation of the exercise database, specific or non-specific teaching approach suggestions, patterns and visualization, and easing teacher workload.

Another relevant study that focuses on collaborative learning techniques to teach the database course has been conducted by Martin et al. ( 2013 ) This research discusses collaborative learning techniques and adapted it for the introductory database course at the Barcelona School of Informatics. The motive of the authors was to introduce active learning methods to improve learning and encourage the acquisition of competence. However, the focus of the study was only on a few methods for teaching the course of database systems, while other important perspectives, including database curricula, and tools for teaching DSE were not discussed in this study.

The above discussion shows that a considerable amount of research work has been conducted in the field of DSE to propose various teaching methods; develop and test different supportive tools, techniques, and strategies; and to improve the curricula for DSE. However, to the best of our knowledge, there is no study that puts all these relevant and pertinent aspects together while also classifying and discussing the supporting methods, and techniques. This review is considerably different from previous studies. Table 1 highlights the differences between this study and other relevant studies in the field of DSE using ✓ and – symbol reflecting "included" and "not included" respectively. Therefore, this study aims to conduct a systematic mapping study on DSE that focuses on compiling, classifying, and discussing the existing work related to pedagogy, supporting tools, and curricula.

Comparison with other related research articles

| Study | (Mcintyre et al., ) | (Myers & Skinner, ) | (Beecham et al., ) | (Dietrich et al., ) | (Regueras et al., ) | (Nelson & Fatimazahra, ) | (Martin et al., ) | (Abbasi et al., ) | (Luxton-Reilly et al., ) | (Taipalus & Seppänen, ) | This article |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Focus | Database | Database | Software Engineering | Database | Database | Database | Database | OOP | Programming | Data Base | Database System |

| Research Types Classifications | – | – | – | – | – | – | – | – | – | ||

| Teaching Methods | – | – | – | – | – | – | |||||

| Tools to aid teaching | - | – | |||||||||

| Curricula considered | – | – | – | – | – | – | – | ||||

| Evolution | – | – | – | – | – | – | – | – | – | – | |

| Year | 1995 | 1997 | 2008 | 2008 | 2009 | 2015 | 2013 | 2017 | 2018 | 2020 | 2022 |

Research methodology

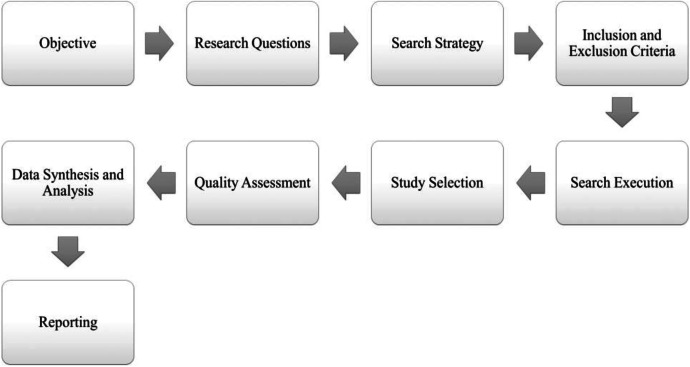

In order to preserve the principal aim of this study, which is to review the research conducted in the area of database systems education, a piece of advice has been collected from existing methods described in various studies (Elberzhager et al., 2012 ; Keele et al., 2007 ; Mushtaq et al., 2017 ) to search for the relevant papers. Thus, proper research objectives were formulated, and based on them appropriate research questions and search strategy were formulated as shown in Fig. 1 .

Research objectives

The Following are the research objectives of this study:

To find high quality research work in DSE.

To categorize different aspects of DSE covered by other researchers in the field.

To provide a thorough discussion of the existing work in this study to provide useful information in the form of evolution, teaching guidelines, and future research directions of the instructors.

Research questions

In order to fulfill the research objectives, some relevant research questions have been formulated. These questions along with their motivations have been presented in Table 2 .

Study selection results

| No | Research questions | Motivations |

|---|---|---|

| RQ1 | What are the developments in DSE with respect to tools, methods, and curriculum? | - Identify focal areas of research in DSE - Discuss the work done in each area |

| RQ2 | How the research in DSE evolved in past 25 years? | - Discuss the focus of research in different time spans while mapping it onto the technological advancement |

Search strategy

The Following search string used to find relevant articles to conduct this study. “Database” AND (“System” OR “Management”) AND (“Education*” OR “Train*” OR “Tech*” OR “Learn*” OR “Guide*” OR “Curricul*”).

Articles have been taken from different sources i.e. IEEE, Springer, ACM, Science Direct and other well-known journals and conferences such as Wiley Online Library, PLOS and ArXiv. The planning for search to find the primary study in the field of DSE is a vital task.

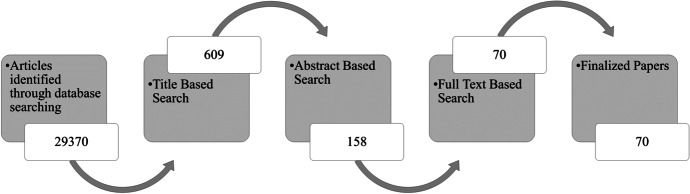

Study selection

A total of 29,370 initial studies were found. These articles went through a selection process, and two authors were designated to shortlist the articles based on the defined inclusion criteria as shown in Fig. 2 . Their conflicts were resolved by involving a third author; while the inclusion/exclusion criteria were also refined after resolving the conflicts as shown in Table 3 . Cohen’s Kappa coefficient 0.89 was observed between the two authors who selected the articles, which reflects almost perfect agreement between them (Landis & Koch, 1977 ). While, the number of papers in different stages of the selection process for all involved portals has been presented in Table 4 .

Selection criteria

| IC | Inclusion criteria |

|---|---|

| IC 1 | The study related to the database and education |

| IC 2 | The years of research publication must be from 1995 to 2022 |

| IC 3 | Only full length papers are included |

| IC 4 | Research papers written in English language are included |

| EC | Exclusion criteria |

| EC1 | Incomplete papers, i.e., presentation, posters or essay |

| EC2 | Research articles without abstract |

| EC3 | Research articles other than English language |

| EC4 | Papers that do not include education as their primary focus |

| Phase | Process | Selection stage | IEEE | Springer | ACM | Elsevier | Others | Total |

|---|---|---|---|---|---|---|---|---|

| 1 | Search | Search string | 500 | 5312 | 10,802 | 5696 | 7045 | 29,370 |

| 2 | Screening | Title | 153 | 121 | 115 | 133 | 87 | 609 |

| 3 | Screening | Abstract | 45 | 23 | 29 | 21 | 40 | 158 |

| 4 | Screening | Full text | 10 | 1 | 20 | 2 | 37 | 70 |

Title based search: Papers that are irrelevant based on their title are manually excluded in the first stage. At this stage, there was a large portion of irrelevant papers. Only 609 papers remained after this stage.

Abstract based search: At this stage, abstracts of the selected papers in the previous stage are studied and the papers are categorized for the analysis along with research approach. After this stage only 152 papers were left.

Full text based analysis: Empirical quality of the selected articles in the previous stage is evaluated at this stage. The analysis of full text of the article has been conducted. The total of 70 papers were extracted from 152 papers for primary study. Following questions are defined for the conduction of final data extraction.

Quality assessment criteria

Following are the criteria used to assess the quality of the selected primary studies. This quality assessment was conducted by two authors as explained above.

The study focuses on curricula, tools, approach, or assessments in DSE, the possible answers were Yes (1), No (0)

The study presents a solution to the problem in DSE, the possible answers to this question were Yes (1), Partially (0.5), No (0)

The study focuses on empirical results, Yes (1), No (0)

The study is published in a well reputed venue that is adjudged through the CORE ranking of conferences, and Scientific Journal Ranking (SJR). The possible answers to this question are given in Table 5 .

Score pattern of publication channels

| Channel type | Quartile number | Score |

|---|---|---|

| Journal Quartile Ranking | Q1 | 2 |

| Q2 | 1.5 | |

| Q3 | 1 | |

| Q4 | 0.5 | |

| Other | 0 | |

| Conference/Workshop/ Symposium/Core Ranking | Core A | 1.5 |

| Core B | 1 | |

| Core C | 0.5 | |

| Other | 0 |

Almost 50.00% of papers had scored more than average and 33.33% of papers had scored between the average range i.e., 2.50–3.50. Some articles with the score below 2.50 have also been included in this study as they present some useful information and were published in education-based journals. Also, these studies discuss important demography and technology based aspects that are directly related to DSE.

Threats to validity

The validity of this study could be influenced by the following factors during the literature of this publication.

Construct validity

In this study this validity identifies the primary study for research (Elberzhager et al., 2012 ). To ensure that many primary studies have been included in this literature two authors have proposed possible search keywords in multiple repetitions. Search string is comprised of different terms related to DS and education. Though, list might be incomplete, count of final papers found can be changed by the alternative terms (Ampatzoglou et al., 2013 ). IEEE digital library, Science direct, ACM digital library, Wiley Online Library, PLOS, ArXiv and Google scholar are the main libraries where search is done. We believe according to the statistics of search engines of literature the most research can be found on these digital libraries (Garousi et al., 2013 ). Researchers also searched related papers in main DS research sites (VLDB, ICDM, EDBT) in order to minimize the risk of missing important publication.

Including the papers that does not belong to top journals or conferences may reduce the quality of primary studies in this research but it indicates that the representativeness of the primary studies is improved. However, certain papers which were not from the top publication sources are included because of their relativeness wisth the literature, even though they reduce the average score for primary studies. It also reduces the possibility of alteration of results which might have caused by the improper handling of duplicate papers. Some cases of duplications were found which were inspected later whether they were the same study or not. The two authors who have conducted the search has taken the final decision to the select the papers. If there is no agreement between then there must be discussion until an agreement is reached.

Internal validity

This validity deals with extraction and data analysis (Elberzhager et al., 2012 ). Two authors carried out the data extraction and primary studies classification. While the conflicts between them were resolved by involving a third author. The Kappa coefficient was 0.89, according to Landis and Koch ( 1977 ), this value indicates almost perfect level of agreement between the authors that reduces this threat significantly.

Conclusion validity

This threat deals with the identification of improper results which may cause the improper conclusions. In this case this threat deals with the factors like missing studies and wrong data extraction (Ampatzoglou et al., 2013 ). The objective of this is to limit these factors so that other authors can perform study and produce the proper conclusions (Elberzhager et al., 2012 ).

Interpretation of results might be affected by the selection and classification of primary studies and analyzing the selected study. Previous section has clearly described each step performed in primary study selection and data extraction activity to minimize this threat. The traceability between the result and data extracted was supported through the different charts. In our point of view, slight difference based on the publication selection and misclassification would not alter the main results.

External validity

This threat deals with the simplification of this research (Mateo et al., 2012 ). The results of this study were only considered that related to the DSE filed and validation of the conclusions extracted from this study only concerns the DSE context. The selected study representativeness was not affected because there was no restriction on time to find the published research. Therefore, this external validity threat is not valid in the context of this research. DS researchers can take search string and the paper classification scheme represented in this study as an initial point and more papers can be searched and categorized according to this scheme.

Analysis of compiled research articles

This section presents the analysis of the compiled research articles carefully selected for this study. It presents the findings with respect to the research questions described in Table 2 .

Selection results

A total of 70 papers were identified and analyzed for the answers of RQs described above. Table 6 represents a list of the nominated papers with detail of the classification results and their quality assessment scores.

Classification and quality assessment of selected articles

| Ref | Channel | Year | Research Type | a | b | c | d | Total |

|---|---|---|---|---|---|---|---|---|

| Tools | Quality Assessment | |||||||

| (Mcintyre et al., ) | Journal | 1995 | Review | 1 | 1 | 0 | 2 | 4 |

| (Abut & Ozturk, ) | Conference | 1997 | Experiment | 1 | 1 | 0 | 0 | 2 |

| (Yau & Karim, ) | Conference | 2003 | Experiment | 1 | 0.5 | 0 | 1 | 2.5 |

| (Pahl et al., ) | Journal | 2004 | Experiment | 1 | 1 | 0 | 0 | 2 |

| (Connolly et al., ) | Conference | 2005 | Experiment | 1 | 0.5 | 1 | 1 | 3.5 |

| (Regueras et al., ) | Conference | 2007 | Case Study | 1 | 1 | 1 | 0 | 3 |

| (Sciore, ) | Symposium | 2007 | Case Study | 1 | 0 | 1 | 1.5 | 3.5 |

| (Holliday & Wang, ) | Conference | 2009 | Experiment | 1 | 0.5 | 1 | 0.5 | 3 |

| (Brusilovsky et al., ) | Journal | 2010 | Experiment | 1 | 1 | 1 | 2 | 5 |

| (Cvetanovic et al., ) | Journal | 2010 | Experiment | 1 | 1 | 0 | 2 | 4 |

| (Nelson & Fatimazahra, ) | Journal | 2010 | Review | 1 | 1 | 0 | 1 | 3 |

| (Wang et al., ) | Conference | 2010 | Experiment | 1 | 1 | 0 | 1.5 | 3.5 |

| (Nagataki et al., ) | Journal | 2013 | Experiment | 0 | 1 | 1 | 2 | 4 |

| (Yue, ) | Journal | 2013 | Experiment | 1 | 1 | 1 | 1.5 | 4.5 |

| (Abelló Gamazo et al., ) | Journal | 2016 | Experiment | 1 | 1 | 1 | 2 | 5 |

| (Taipalus & Perälä, ) | Symposium | 2019 | Review | 1 | 1 | 1 | 1.5 | 4.5 |

| Methods | Quality Assessment | |||||||

| (Dietrich & Urban, ) | Conference | 1996 | Review | 1 | 1 | 0 | 1.5 | 3.5 |

| (Urban & Dietrich, ) | Journal | 1997 | Experiment | 1 | 1 | 0 | 0 | 2 |

| (Nelson et al., ) | Workshop | 2003 | Review | 1 | 1 | 0 | 0 | 2 |

| (Amadio, ) | Conference | 2003 | Experiment | 1 | 0.5 | 1 | 0.5 | 3 |

| (Connolly & Begg, ) | Journal | 2006 | Experiment | 1 | 1 | 0 | 2 | 4 |

| (Morien, ) | Journal | 2006 | Experiment | 1 | 0.5 | 1 | 2 | 4.5 |

| (Prince & Felder, ) | Journal | 2006 | Review | 0 | 0.5 | 0 | 2 | 2.5 |

| (Martinez-González & Duffing, ) | Journal | 2007 | Review | 1 | 1 | 0 | 2 | 4 |

| (Gudivada et al., ) | Conference | 2007 | Review | 1 | 0.5 | 0 | 0 | 1.5 |

| (Svahnberg et al., ) | Symposium | 2008 | Review | 1 | 0 | 0 | 1.5 | 2.5 |

| (Brusilovsky et al., ) | Conference | 2008 | Experiment | 1 | 0.5 | 1 | 1.5 | 4 |

| (Dominguez & Jaime, ) | Journal | 2010 | Experiment | 1 | 1 | 1 | 2 | 5 |

| (Efendiouglu & Yelken ) | Journal | 2010 | Experiment | 1 | 1 | 1 | 0 | 3 |

| (Hou & Chen, ) | Conference | 2010 | Review | 1 | 0.5 | 1 | 0 | 2.5 |

| (Yuelan et al., ) | Conference | 2011 | Experiment | 1 | 0.5 | 0 | 0 | 1.5 |

| (Zheng & Dong, ) | Conference | 2011 | Review | 1 | 1 | 0 | 1 | 3 |

| (Al-Shuaily, ) | Workshop | 2012 | Review | 1 | 1 | 1 | 0 | 3 |

| (Juxiang & Zhihong, ) | Conference | 2012 | Review | 1 | 0.5 | 0 | 0 | 1.5 |

| (Chen et al., ) | Journal | 2012 | Review | 1 | 1 | 1 | 2 | 5 |

| (Martin et al., ) | Journal | 2013 | Review | 1 | 1 | 1 | 2 | 5 |

| (Rashid & Al-Radhy, ) | conference | 2014 | Review | 1 | 0.5 | 1 | 0 | 2.5 |

| (Wang & Chen, ) | Conference | 2014 | Experiment | 1 | 0 | 1 | 0 | 2 |

| (Dicheva et al., ) | Journal | 2015 | Review | 1 | 1 | 0 | 1 | 3 |

| (Rashid, ) | Journal | 2015 | Review | 1 | 0.5 | 1 | 2 | 4.5 |

| (Etemad & Küpçü, ) | Journal | 2018 | Experiment | 0 | 0.5 | 1 | 2 | 3.5 |

| (Kui et al., ) | Conference | 2018 | Experiment | 1 | 1 | 0 | 1 | 3 |

| (Taipalus et al., ) | Journal | 2018 | Review | 1 | 1 | 0 | 2 | 4 |

| (Zhang et al., ) | conference | 2018 | Experiment | 1 | 1 | 1 | 0 | 3 |

| (Shebaro, ) | Journal | 2018 | Review | 1 | 0.5 | 1 | 0 | 2.5 |

| (Cai & Gao, ) | Conference | 2019 | Review | 1 | 1 | 0 | 0 | 2 |

| (Kawash et al., ) | Symposium | 2020 | Experiment | 1 | 1 | 1 | 1.5 | 4.5 |

| (Taipalus & Seppänen, ) | Journal | 2020 | Review | 1 | 1 | 1 | 2 | 5 |

| (Canedo et al., ) | Journal | 2021 | Experiment | 1 | 1 | 1 | 1 | 4 |

| (Naik & Gajjar, ) | Journal | 2021 | Case Study | 1 | 1 | 1 | 0 | 3 |

| (Ko et al., ) | Journal | 2021 | Review | 1 | 1 | 1 | 2 | 5 |

| (Sibia et al., ) | Workshop | 2022 | Case Study | 1 | 1 | 1 | 0 | 3 |

| Curriculum | Quality Assessment | |||||||

| (Dean & Milani, ) | Conference | 1995 | Experiment | 1 | 0.5 | 1 | 0.5 | 3 |

| (Urban & Dietrich, ) | Symposium | 2001 | Case Study | 1 | 0 | 1 | 1.5 | 3.5 |

| (Calero et al., ) | Journal | 2003 | Review | 1 | 1 | 0 | 2 | 4 |

| (Robbert & Ricardo, ) | Conference | 2003 | Review | 1 | 1 | 0 | 1.5 | 3.5 |

| (Adams et al., ) | Journal | 2004 | Experiment | 1 | 1 | 0 | 0 | 2 |

| (Conklin & Heinrichs, ) | Journal | 2005 | Review | 1 | 1 | 1 | 0 | 3 |

| (Dietrich et al., ) | Journal | 2008 | Case Study | 0 | 1 | 1 | 2 | 4 |

| (Luo et al., ) | Conference | 2008 | Experiment | 1 | 1 | 1 | 0 | 3 |

| (Marshall, ) | Conference | 2011 | Review | 1 | 1 | 1 | 0 | 3 |

| (Bhogal et al., ) | Workshop | 2012 | Case Study | 1 | 1 | 0 | 0 | 2 |

| (Picciano, ) | Journal | 2012 | Review | 1 | 1 | 0 | 0 | 2 |

| (Abid et al., ) | Journal | 2015 | Review | 1 | 1 | 1 | 1 | 4 |

| (Taipalus & Seppänen, ) | Journal | 2015 | Experiment | 1 | 1 | 1 | 2 | 5 |

| (Abourezq & Idrissi, ) | Journal | 2016 | Experiment | 1 | 1 | 0 | 0.5 | 2.5 |

| (Silva et al., ) | Conference | 2016 | Experiment | 1 | 1 | 0 | 1.5 | 3.5 |

| (Zhanquan et al., ) | Journal | 2016 | Review | 1 | 1 | 1 | 0 | 3 |

| (Mingyu et al., ) | Conference | 2017 | Experiment | 1 | 1 | 1 | 0 | 3 |

| (Andersson et al., ) | Conference | 2019 | Review | 1 | 0.5 | 0 | 0 | 1.5 |

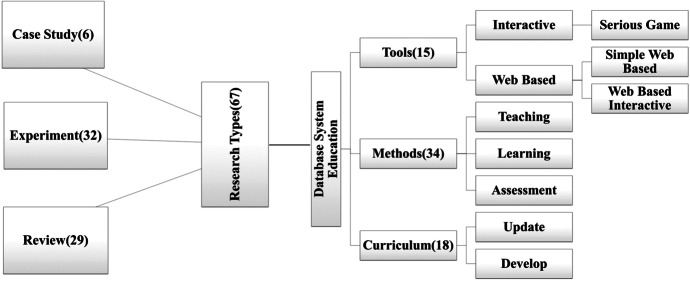

RQ1.Categorization of research work in DSE field

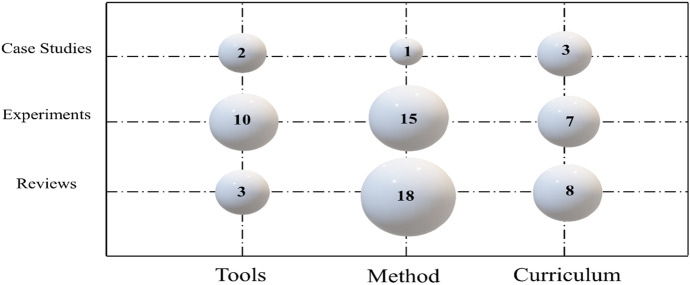

The analysis in this study reveals that the literature can be categorized as: Tools: any additional application that helps instructors in teaching and students in learning. Methods: any improvisation aimed at improving pedagogy or cognition. Curriculum: refers to the course content domains and their relative importance in a degree program, as shown in Fig. 3 .

Taxonomy of DSE study types

Most of the articles provide a solution by gathering the data and also prove the novelty of their research through results. These papers are categorized as experiments w.r.t. their research types. Whereas, some of them case study papers which are used to generate an in depth, multifaceted understanding of a complex issue in its real-life context, while few others are review studies analyzing the previously used approaches. On the other hand, a majority of included articles have evaluated their results with the help of experiments, while others conducted reviews to establish an opinion as shown in Fig. 4 .

Cross Mapping of DSE study type and research Types

Educational tools, especially those related to technology, are making their place in market faster than ever before (Calderon et al., 2011 ). The transition to active learning approaches, with the learner more engaged in the process rather than passively taking in information, necessitates a variety of tools to help ensure success. As with most educational initiatives, time should be taken to consider the goals of the activity, the type of learners, and the tools needed to meet the goals. Constant reassessment of tools is important to discover innovation and reforms that improve teaching and learning (Irby & Wilkerson, 2003 ). For this purpose, various type of educational tools such as, interactive, web-based and game based have been introduced to aid the instructors in order to explain the topic in more effective way.

The inclusion of technology into the classroom may help learners to compete in the competitive market when approaching the start of their career. It is important for the instructors to acknowledge that the students are more interested in using technology to learn database course instead of merely being taught traditional theory, project, and practice-based methods of teaching (Adams et al., 2004 ). Keeping these aspects in view many authors have done significant research which includes web-based and interactive tools to help the learners gain better understanding of basic database concepts.

Great research has been conducted with the focus of students learning. In this study we have discussed the students learning supportive with two major finding’s objectives i.e., tools which prove to be more helpful than other tools. Whereas, proposed tools with same outcome as traditional classroom environment. Such as, Abut and Ozturk ( 1997 ) proposed an interactive classroom environment to conduct database classes. The online tools such as electronic “Whiteboard”, electronic textbooks, advance telecommunication networks and few other resources such as Matlab and World Wide Web were the main highlights of their proposed smart classroom. Also, Pahl et al. ( 2004 ) presented an interactive multimedia-based system for the knowledge and skill oriented Web-based education of database course students. The authors had differentiated their proposed classroom environment from traditional classroom-based approach by using tool mediated independent learning and training in an authentic setting. On the other hand, some authors have also evaluated the educational tools based on their usage and impact on students’ learning. For example, Brusilovsky et al. ( 2010 )s evaluated the technical and conceptual difficulties of using several interactive educational tools in the context of a single course. A combined Exploratorium has been presented for database courses and an experimental platform, which delivers modified access to numerous types of interactive learning activities.

Also, Taipalus and Perälä ( 2019 ) investigated the types of errors that are persistent in writing SQL by the students. The authors also contemplated the errors while mapping them onto different query concepts. Moreover, Abelló Gamazo et al. ( 2016 ) presented a software tool for the e-assessment of relational database skills named LearnSQL. The proposed software allows the automatic and efficient e-learning and e-assessment of relational database skills. Apart from these, Yue ( 2013 ) proposed the database tool named Sakila as a unified platform to support instructions and multiple assignments of a graduate database course for five semesters. According to this study, students find this tool more useful and interesting than the highly simplified databases developed by the instructor, or obtained from textbook. On the other hand, authors have proposed tools with the main objective to help the student’s grip on the topic by addressing the pedagogical problems in using the educational tools. Connolly et al. ( 2005 ) discussed some of the pedagogical problems sustaining the development of a constructive learning environment using problem-based learning, a simulation game and interactive visualizations to help teach database analysis and design. Also, Yau and Karim ( 2003 ) proposed smart classroom with prevalent computing technology which will facilitate collaborative learning among the learners. The major aim of this smart classroom is to improve the quality of interaction between the instructors and students during lecture.

Student satisfaction is also an important factor for the educational tools to more effective. While it supports in students learning process it should also be flexible to achieve the student’s confidence by making it as per student’s needs (Brusilovsky et al., 2010 ; Connolly et al., 2005 ; Pahl et al., 2004 ). Also, Cvetanovic et al. ( 2010 ) has proposed a web-based educational system named ADVICE. The proposed solution helps the students to reduce the gap between DBMS, theory and its practice. On the other hand, authors have enhanced the already existing educational tools in the traditional classroom environment to addressed the student’s concerns (Nelson & Fatimazahra, 2010 ; Regueras et al., 2007 ) Table 7 .

Tools: Adopted in DSE and their impacts

| Objective | Findings | References | Target Topic/ exposition platform |

|---|---|---|---|

| Support of Students’ learning | More supportive | • (Abut & Ozturk, ) | • Data models and data modelling principles • IDLE (the Interactive Database Learning Environment) |

| • (Pahl et al., ) | • Data models • IDLE | ||

| • (Brusilovsky et al., ) | • SQL • SQL-Knot, SQL-Lab | ||

| • Conceptual database design, Logical database design, Physical database design • Online games | • SQL • Interactive | ||

| • (Abbasi et al., ) | • Relational Database • LearnSQL | ||

| • (Yue, ) | • Relational Calculus, XML generation, XPath, and XQuery • Sakila | ||

| • (Nelson & Fatimazahra, ) | • Introductory Database topics • TLAD | ||

| Same as others | • (Connolly et al., ) | • Conceptual database design, Logical database design, Physical database design • Online games | |

| • (Yau & Karim, ) | • Introductory Database topics • RCSM | ||

| Students’ Satisfaction | Satisfied | • (Brusilovsky et al., ) | • SQL • SQL-Knot, SQL-Lab |

| • (Cvetanovic et al., ) | • SQL, formal query languages, and normalization • ADVICE | ||

| • (Connolly et al., ) | |||

| • (Pahl et al., ) | • Data models • IDLE | ||

| Similar satisfaction as compared to traditional classroom environment | • (Nelson & Fatimazahra, ) | • Introductory Database topics • TLAD | |

| • (Regueras et al., ) | • Entity Relationship Model • QUEST | ||

| Students’ motivation towards database development | Same impact as other approaches | • (Nagataki et al., ) | • SQL • sAccess |

| Helped students to develop better database development strategies | • (Brusilovsky et al., ) | • SQL • SQL-Knot, SQL-Lab | |

| • (Mcintyre et al., ) | • Relational Database Design • Expert IT system | ||

| Students’ course performance | Better performance | • (Cvetanovic et al., ) | • SQL, formal query languages, and normalization • ADVICE |

| • (Wang et al., ) | • Entity Relationship Model, SQL • MeTube | ||

| • (Holliday & Wang, ) | • MySQL • MeTube | ||

| • (Taipalus & Perälä, ) | • SQL • Interactive | ||

| Same performance as other approaches | • (Pahl et al., ) | • Data models • IDLE | |

| • (Yue, ) | • Relational Calculus, XML generation, XPath, and XQuery • Sakila | ||

| Student and instructor interaction percentage | Increased | • (Abut & Ozturk, ) | • Introductory Database topics • “Whiteboard” |

| • (Yau & Karim, ) | • Introductory Database topics • RCSM | ||

| • (Taipalus & Perälä, ) | • SQL • Interactive |

Hands on database development is the main concern in most of the institute as well as in industry. However, tools assisting the students in database development and query writing is still major concern especially in SQL (Brusilovsky et al., 2010 ; Nagataki et al., 2013 ).

Student’s grades reflect their conceptual clarity and database development skills. They are also important to secure jobs and scholarships after passing out, which is why it is important to have the educational learning tools to help the students to perform well in the exams (Cvetanovic et al., 2010 ; Taipalus et al., 2018 ). While, few authors (Wang et al., 2010 ) proposed Metube which is a variation of YouTube. Subsequently, existing educational tools needs to be upgraded or replaced by the more suitable assessment oriented interactive tools to attend challenging students needs (Pahl et al., 2004 ; Yuelan et al., 2011 ).

One other objective of developing the educational tools is to increase the interaction between the students and the instructors. In the modern era, almost every institute follows the student centered learning(SCL). In SCL the interaction between students and instructor increases with most of the interaction involves from the students. In order to support SCL the educational based interactive and web-based tools need to assign more roles to students than the instructors (Abbasi et al., 2016 ; Taipalus & Perälä, 2019 ; Yau & Karim, 2003 ).

Theory versus practice is still one of the main issues in DSE teaching methods. The traditional teaching method supports theory first and then the concepts learned in the theoretical lectures implemented in the lab. Whereas, others think that it is better to start by teaching how to write query, which should be followed by teaching the design principles for database, while a limited amount of credit hours are also allocated for the general database theory topics. This part of the article discusses different trends of teaching and learning style along with curriculum and assessments methods discussed in DSE literature.

A variety of teaching methods have been designed, experimented, and evaluated by different researchers (Yuelan et al., 2011 ; Chen et al., 2012 ; Connolly & Begg, 2006 ). Some authors have reformed teaching methods based on the requirements of modern way of delivering lectures such as Yuelan et al. ( 2011 ) reform teaching method by using various approaches e.g. a) Modern ways of education: includes multimedia sound, animation, and simulating the process and working of database systems to motivate and inspire the students. b) Project driven approach: aims to make the students familiar with system operations by implementing a project. c) Strengthening the experimental aspects: to help the students get a strong grip on the basic knowledge of database and also enable them to adopt a self-learning ability. d) Improving the traditional assessment method: the students should turn in their research and development work as the content of the exam, so that they can solve their problem on their own.

The main aim of any teaching method is to make student learn the subject effectively. Student must show interest in order to gain something from the lectures delivered by the instructors. For this, teaching methods should be interactive and interesting enough to develop the interest of the students in the subject. Students can show interest in the subject by asking more relative questions or completing the home task and assignments on time. Authors have proposed few teaching methods to make topic more interesting such as, Chen et al. ( 2012 ) proposed a scaffold concept mapping strategy, which considers a student’s prior knowledge, and provides flexible learning aids (scaffolding and fading) for reading and drawing concept maps. Also, Connolly & Begg (200s6) examined different problems in database analysis and design teaching, and proposed a teaching approach driven by principles found in the constructivist epistemology to overcome these problems. This constructivist approach is based on the cognitive apprenticeship model and project-based learning. Similarly, Domínguez & Jaime ( 2010 ) proposed an active method for database design through practical tasks development in a face-to-face course. They analyzed results of five academic years using quasi experimental. The first three years a traditional strategy was followed and a course management system was used as material repository. On the other hand, Dietrich and Urban ( 1996 ) have described the use of cooperative group learning concepts in support of an undergraduate database management course. They have designed the project deliverables in such a way that students develop skills for database implementation. Similarly, Zhang et al. ( 2018 ) have discussed several effective classroom teaching measures from the aspects of the innovation of teaching content, teaching methods, teaching evaluation and assessment methods. They have practiced the various teaching measures by implementing the database technologies and applications in Qinghai University. Moreover, Hou and Chen ( 2010 ) proposed a new teaching method based on blending learning theory, which merges traditional and constructivist methods. They adopted the method by applying the blending learning theory on Access Database programming course teaching.

Problem solving skills is a key aspect to any type of learning at any age. Student must possess this skill to tackle the hurdles in institute and also in industry. Create mind and innovative students find various and unique ways to solve the daily task which is why they are more likeable to secure good grades and jobs. Authors have been working to introduce teaching methods to develop problem solving skills in the students(Al-Shuaily, 2012 ; Cai & Gao, 2019 ; Martinez-González & Duffing, 2007 ; Gudivada et al., 2007 ). For instance, Al-Shuaily ( 2012 ) has explored four cognitive factors such as i) Novices’ ability in understanding, ii) Novices’ ability to translate, iii) Novice’s ability to write, iv) Novices’ skills that might influence SQL teaching, and learning methods and approaches. Also, Cai and Gao ( 2019 ) have reformed the teaching method in the database course of two higher education institutes in China. Skills and knowledge, innovation ability, and data abstraction were the main objective of their study. Similarly, Martinez-González and Duffing ( 2007 ) analyzed the impact of convergence of European Union (EU) in different universities across Europe. According to their study, these institutes need to restructure their degree program and teaching methodologies. Moreover, Gudivada et al. ( 2007 ) proposed a student’s learning method to work with the large datasets. they have used the Amazon Web Services API and.NET/C# application to extract a subset of the product database to enhance student learning in a relational database course.

On the other hand, authors have also evaluated the traditional teaching methods to enhance the problem-solving skills among the students(Eaglestone & Nunes, 2004 ; Wang & Chen, 2014 ; Efendiouglu & Yelken, 2010 ) Such as, Eaglestone and Nunes ( 2004 ) shared their experiences of delivering a database design course at Sheffield University and discussed some of the issues they faced, regarding teaching, learning and assessments. Likewise, Wang and Chen ( 2014 ) summarized the problems mainly in teaching of the traditional database theory and application. According to the authors the teaching method is outdated and does not focus on the important combination of theory and practice. Moreover, Efendiouglu and Yelken ( 2010 ) investigated the effects of two different methods Programmed Instruction (PI) and Meaningful Learning (ML) on primary school teacher candidates’ academic achievements and attitudes toward computer-based education, and to define their views on these methods. The results show that PI is not favoured for teaching applications because of its behavioural structure Table 8 .

Methods: Teaching approaches adopted in DSE

| Objective | Findings | References | Target Topic/ Approach or Method |

|---|---|---|---|

| Develop interest in Subject | Students begin to ask more relative questions | • (Chen et al., ) | • Data modeling, relational databases, database query languages • Scaffolded Concept |

| • (Connolly & Begg, ) | • Database concepts, Database Analysis and Design, Implementation • Constructivist-Based Approach | ||

| • (Dominguez & Jaime, ) | • Database design • Project-based learning | ||

| • (Rashid & Al-Radhy, ) | • Database Analysis and Design • Project based learning, Assessment based learning | ||

| • (Yuelan et al., ) | • Principles of Database, SQL Server • Project-driven approach | ||

| • (Taipalus & Seppänen, ) | • SQL • Group learning and projects | ||

| • (Brusilovsky et al., ) | • SQL • SQL Exploratorium | ||

| • (Hou & Chen, ) | • Access • Blending Learning | ||

| Same effect as others traditional teaching methods | • (Dietrich & Urban, ) | • ER Model, Relational Design, SQL • Teaching and learning strategies | |

| • (Kui et al., ) | • E-R model, relational model, SQL • Flipped Classroom | ||

| • (Rashid, ) | • Entity Relational Database, Relational Algebra, Normalization, • Learning and Assessment Methods | ||

| • (Zhang et al., ) | • Data Models, Physical Data Design • Project teaching mode, Discussion teaching mode, Demonstrative teaching mode | ||

| Develop problem solving skills | Students become creative and try new methods to solve tasks | • (Al-Shuaily, ) | • SQL • Cognitive task, Comprehension Task |

| • (Cai & Gao, ) | • E-R model, relational model, SQL • Database Course for Liberal Arts Majors | ||

| • (Martin et al., ) | • SQL and relational algebra, The relational model, Transaction management • Collaborative Learning | ||

| • (Martinez-González & Duffing, ) | • Data Models, Physical Data Design, SQL • European convergence in higher education | ||

| • (Prince & Felder, ) | • SQL • Inductive teaching and learning | ||

| • (Urban & Dietrich, ) | • Relational database mapping and prototyping, Database system implementation • cooperative group project based learning | ||

| • (Gudivada et al., ) | • SQL, Logical design, Physical Design • Working with large datasets from Amazon | ||

| Use same methods as mentioned in books | • (Eaglestone & Nunes, ) | • SQL, ER Model • Pedagogical model, teaching and learning strategies | |

| • (Wang et al., ) | • SQL Server and Oracle • Refine Teaching Method | ||

| • (Efendiouglu & Yelken ) | • SQL • Programmed instruction and meaningful learning | ||

| Motivate students to explore topics through independent study | Students begin to read books and internet to enhance their knowledge independently or in groups | • (Cai & Gao, ) | • SQL, E-R model, relational model • Database Course for Liberal Arts Majors |

| • (Kawash et al., ) | • SQL, Entity Relationship, Relational model • Group Exams | ||

| • (Martin et al., ) | • SQL, Relational Model, UML • Collaborative Learning | ||

| • (Martinez-González & Duffing, ) | • SQL, Data Models, Physical Data Design • European convergence in higher education | ||

| • (Amadio, ) | • SQL Programming • Team Learning | ||

| Students stick to the course content | • (Morien, ) | • Entity modeling, relational modelling • Teaching Reform | |

| • (Eaglestone & Nunes, ) | • SQL, ER Model • Pedagogical model, teaching and learning strategies | ||

| • (Zheng & Dong, ) | • SQL, ER Model • Teaching Reform and Practice | ||

| Focus on theory and practical Gap | Students begin to apply theoretical knowledge on developing database applications | • (Al-Shuaily, ) | • SQL • Cognitive task, Comprehension Task |

| • (Etemad & Küpçü, ) | • SQL • cooperative group project-based learning | ||

| • (Svahnberg et al., ) | • SQL • Industrial project-based learning | ||

| • (Taipalus et al., ) | • SQL • Group learning and projects | ||

| • (Juxiang & Zhihong, ) | • SQL, ER Model • Computational Thinking | ||

| • (Connolly & Begg, ) | • Database concepts, Database Analysis and Design, Implementation • Constructivist-Based Approach | ||

| • (Rashid & Al-Radhy, ) | • Database Analysis and Design • Project based learning, Assessment based learning | ||

| • (Naik & Gajjar, ) | • database designing, transaction management, SQL • ENABLE, Project based learning | ||

| Students only focus on theory to clear exams | • (Wang et al., ) | • SQL Server and Oracle • Refine Teaching Method | |

| • (Zheng & Dong, ) | • SQL, ER Model • Teaching Reform and Practice | ||

| • (Nelson et al., ) | • Advanced relational design, UML, data warehousing • Teaching Methods, Assessment Methods |

Students become creative and innovative when the try to study on their own and also from different resources rather than curriculum books only. In the modern era, there are various resources available on both online and offline platforms. Modern teaching methods must emphasize on making the students independent from the curriculum books and educate them to learn independently(Amadio et al., 2003 ; Cai & Gao, 2019 ; Martin et al., 2013 ). Also, in the work of Kawash et al. ( 2020 ) proposed he group study-based learning approach called Graded Group Activities (GGAs). In this method students team up in order to take the exam as a group. On the other hand, few studies have emphasized on course content to prepare students for the final exams such as, Zheng and Dong ( 2011 ) have discussed the issues of computer science teaching with particular focus on database systems, where different characteristics of the course, teaching content and suggestions to teach this course effectively have been presented.

As technology is evolving at rapid speed, so students need to have practical experience from the start. Basic theoretical concepts of database are important but they are of no use without its implementation in real world projects. Most of the students study in the institutes with the aim of only clearing the exams with the help of theoretical knowledge and very few students want to have practical experience(Wang & Chen, 2014 ; Zheng & Dong, 2011 ). To reduce the gap between the theory and its implementation, authors have proposed teaching methods to develop the student’s interest in the real-world projects (Naik & Gajjar, 2021 ; Svahnberg et al., 2008 ; Taipalus et al., 2018 ). Moreover, Juxiang and Zhihong ( 2012 ) have proposed that the teaching organization starts from application scenarios, and associate database theoretical knowledge with the process from analysis, modeling to establishing database application. Also, Svahnberg et al. ( 2008 ) explained that in particular conditions, there is a possibility to use students as subjects for experimental studies in DSE and influencing them by providing responses that are in line with industrial practice.

On the other hand, Nelson et al. ( 2003 ) evaluated the different teaching methods used to teach different modules of database in the School of Computing and Technology at the University of Sunder- land. They outlined suggestions for changes to the database curriculum to further integrate research and state-of-the-art systems in databases.

Database curriculum has been revisited many times in the form of guidelines that not only present the contents but also suggest approximate time to cover different topics. According to the ACM curriculum guidelines (Lunt et al., 2008 ) for the undergraduate programs in computer science, the overall coverage time for this course is 46.50 h distributed in such a way that 11 h is the total coverage time for the core topics such as, Information Models (4 core hours), Database Systems (3 core hours) and Data Modeling (4 course hours). Whereas, the remaining hours are allocated for elective topics such as Indexing, Relational Databases, Query Languages, Relational Database Design, Transaction Processing, Distributed Databases, Physical Database Design, Data Mining, Information Storage and Retrieval, Hypermedia, Multimedia Systems, and Digital Libraries(Marshall, 2012 ). While, according to the ACM curriculum guidelines ( 2013 ) for undergraduate programs in computer science, this course should be completed in 15 weeks with two and half hour lecture per week and lab session of four hours per week on average (Brady et al., 2004 ). Thus, the revised version emphasizes on the practice based learning with the help of lab component. Numerous organizations have exerted efforts in this field to classify DSE (Dietrich et al., 2008 ). DSE model curricula, bodies of knowledge (BOKs), and some standardization aspects in this field are discussed below:

Model curricula

There are standard bodies who set the curriculum guidelines for teaching undergraduate degree programs in computing disciplines. Curricula which include the guidelines to teach database are: Computer Engineering Curricula (CEC) (Meier et al., 2008 ), Information Technology Curricula (ITC) (Alrumaih, 2016 ), Computing Curriculum Software Engineering (CCSE) (Meyer, 2001 ), Cyber Security Curricula (CSC) (Brady et al., 2004 ; Bishop et al., 2017 ).

Bodies of knowledge (BOK)

A BOK includes the set of thoughts and activities related to the professional area, while in model curriculum set of guidelines are given to address the education issues (Sahami et al., 2011 ). Database body of Knowledge comprises of (a) The Data Management Body of Knowledge (DM- BOK), (b) Software Engineering Education Knowledge (SEEK) (Sobel, 2003 ) (Sobel, 2003 ), and (c) The SE body of knowledge (SWEBOK) (Swebok Evolution: IEEE Computer Society n.d. ).

Apart from the model curricula, and bodies of knowledge, there also exist some standards related to the database and its different modules: ISO/IEC 9075–1:2016 (Computing Curricula, 1991 ), ISO/IEC 10,026–1: 1998 (Suryn, 2003 ).

We also utilize advices from some studies (Elberzhager et al., 2012 ; Keele et al., 2007 ) to search for relevant papers. In order to conduct this systematic study, it is essential to formulate the primary research questions (Mushtaq et al., 2017 ). Since the data management techniques and software are evolving rapidly, the database curriculum should also be updated accordingly to meet these new requirements. Some authors have described ways of updating the content of courses to keep pace with specific developments in the field and others have developed new database curricula to keep up with the new data management techniques.

Furthermore, some authors have suggested updates for the database curriculum based on the continuously evolving technology and introduction of big data. For instance Bhogal et al. ( 2012 ) have shown that database curricula need to be updated and modernized, which can be achieved by extending the current database concepts that cover the strategies to handle the ever changing user requirements and how database technology has evolved to meet the requirements. Likewise, Picciano ( 2012 ) examines the evolving world of big data and analytics in American higher education. According to the author, the “data driven” decision making method should be used to help the institutes evaluate strategies that can improve retention and update the curriculum that has big data basic concepts and applications, since data driven decision making has already entered in the big data and learning analytic era. Furthermore, Marshall ( 2011 ) presented the challenges faced when developing a curriculum for a Computer Science degree program in the South African context that is earmarked for international recognition. According to the author, the Curricula needs to adhere both to the policy and content requirements in order to be rated as being of a particular quality.

Similarly, some studies (Abourezq & Idrissi, 2016 ; Mingyu et al., 2017 ) described big data influence from a social perspective and also proceeded with the gaps in database curriculum of computer science, especially, in the big data era and discovers the teaching improvements in practical and theoretical teaching mode, teaching content and teaching practice platform in database curriculum. Also Silva et al. ( 2016 ) propose teaching SQL as a general language that can be used in a wide range of database systems from traditional relational database management systems to big data systems.

On the other hand, different authors have developed a database curriculum based on the different academic background of students. Such as, Dean and Milani ( 1995 ) have recommended changes in computer science curricula based on the practice in United Stated Military Academy (USMA). They emphasized greatly on the practical demonstration of the topic rather than the theoretical explanation. Especially, for the non-computer science major students. Furthermore, Urban and Dietrich ( 2001 ) described the development of a second course on database systems for undergraduates, preparing students for the advanced database concepts that they will exercise in the industry. They also shared their experience with teaching the course, elaborating on the topics and assignments. Also, Andersson et al. ( 2019 ) proposed variations in core topics of database management course for the students with the engineering background. Moreover, Dietrich et al. ( 2014 ) described two animations developed with images and color that visually and dynamically introduce fundamental relational database concepts and querying to students of many majors. The goal is that the educators, in diverse academic disciplines, should be able to incorporate these animations in their existing courses to meet their pedagogical needs.

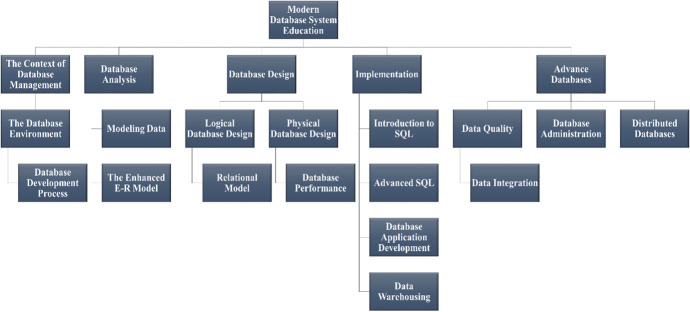

The information systems have evolved into large scale distributed systems that store and process a huge amount of data across different servers, and process them using different distributed data processing frameworks. This evolution has given birth to new paradigms in database systems domain termed as NoSQL and Big Data systems, which significantly deviate from conventional relational and distributed database management systems. It is pertinent to mention that in order to offer a sustainable and practical CS education, these new paradigms and methodologies as shown in Fig. 5 should be included into database education (Kleiner, 2015 ). Tables 9 and 10 shows the summarized findings of the curriculum based reviewed studies. This section also proposed appropriate text book based on the theory, project, and practice-based teaching methodology as shown in Table 9 . The proposed books are selected purely on the bases of their usage in top universities around the world such as, Massachusetts Institute of Technology, Stanford University, Harvard University, University of Oxford, University of Cambridge and, University of Singapore and the coverage of core topics mentioned in the database curriculum.

Concepts in Database Systems Education (Kleiner, 2015 )

Recommended text books for DSE

| Methodology | Book title | Author(s) | Edition | Year |

|---|---|---|---|---|

| Theory | Database Management Systems | Ramakrishnan, Raghu, and Johannes Gehrke | 3 | 2002 |

| Database Systems: The Complete Book | Garcia-Molina, Ullman and Widom | 2 | 2008 | |

| Introduction to Database Systems | C. J. Date Addison-Wesley | 8 | 2003 | |

| Introduction to Database Systems | S. Bressan and B. Catania | 1 | 2005 | |

| Database system concepts | Silberschatz, A., Korth, H.F. and Sudarshan, S | 7 | 2019 | |

| A first course in database systems | Ullman, J. and Widom, J | 3 | 2007 | |

| Project | Modern Database Management | Jeffrey A. Hoffer, Ramesh Venkataraman and HeikkiTopi | 12 | 2015 |

| Database Systems: A Practical Approach to Design, Implementation, and Management | Thomas M. Connolly,Carolyn E. Begg | 6 | 2015 | |

| Practice | Fundamentals of SQL Programming | R. A. Mata-Toledo and P. Cushman. Schaum’s | 1 | 2000 |

| Readings in Database Systems (The Red Book) | Hellerstein, Joseph, and Michael Stonebraker | 4 | 2005 |

Curriculum: Findings of Reviewed Literature

| Objective | Findings | References | Topic(s)/ Curricula | Standard bodies |

|---|---|---|---|---|

| Recommendations and revisions | Proposed variations based on the scope in the region | • (Abourezq & Idrissi, ) | • Big Data, SQL • Computer Science Curricula | • CS 2008 |

| • (Bhogal et al., ) | • Big Data • Computer Science/Engineering Curriculum | • CS 2008/CE 2004 | ||

| • (Mingyu et al., ) | • Big Data, NoSQL • Computer Science Curricula | • CS 2013 | ||

| • (Picciano, ) | • Big Data • Computer Science Curricula | • CS 2008 | ||

| • (Silva et al., ) | • Big Data, MapReduce, NoSQL • and NewSQL • Computer Science Curricula | • CS 2013 | ||

| • (Calero et al., ) | • Database Design, Database Administration, Database Application • SWEBOK, DBBOK | • N/A | ||

| • (Conklin & Heinrichs, ) | • Database theory and database practice • Computer Science Curricula | • IS 2002 • CC2001 • CC2004 | ||

| • (Zhanquan et al., ) | • Database principles design • Coursera, Udacity, edX | • N/A | ||

| • (Robbert & Ricardo, ) | • Data Models, Physical Data Design, SQL • Computer Science Curricula | • CC 2001 | ||

| • (Luo et al., ) | • SQL Server and Oracle • Computer Science Curricula | • CC 2004 | ||

| • (Dietrich & Urban, ) | • Object oriented database (OODB) systems; object relational database (ORDB) systems • Curriculum and Laboratory Improvement Educational Materials Development (CCLI EMD) | • N/A | ||

| • (Marshall, ) | • Data Models, Physical Data Design, Database Schema and Design, SQL • CS-BoK | • N/A | ||

| Proposed variations based on the educational background of the students | • (Dean & Milani, ) | • SQL • Computer Science Curricula | • ACM/IEEE Computing Curricula | |

| • (Dietrich et al., ) | • Relational Databases • Computer Science Curricula | • CC 2008 | ||

| • (Urban & Dietrich, ) | • Relational algebra, Relational calculus, and SQL • Engineering Curriculum 2000 | • CC 2001 | ||

| • (Andersson et al., ) | • ER Model, Relational Model, SQL • Engineering Curriculum | • CE 2000 | ||

| Relating Curriculum to assessment | Proposed variations based on the assessment methods | • (Abid et al., ) | • Data Models, Physical Data Design, Database Schema and Design, SQL • Computer Science Curricula | • CS 2008 |

| • (Adams et al., ) | • ER, EER, and UML • Computer Science Curricula | • CC 2001 |

RQ.2 Evolution of DSE research

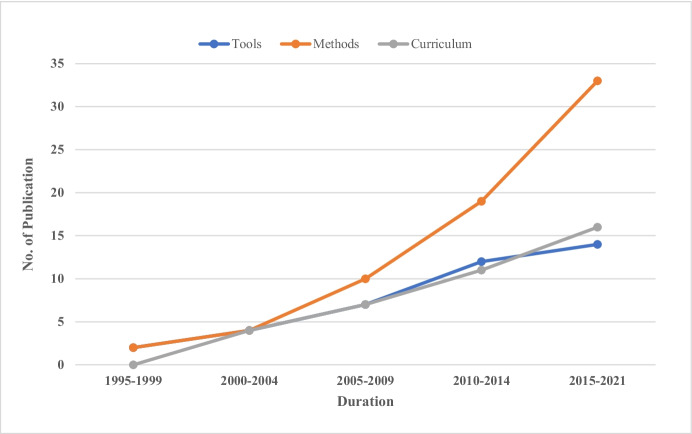

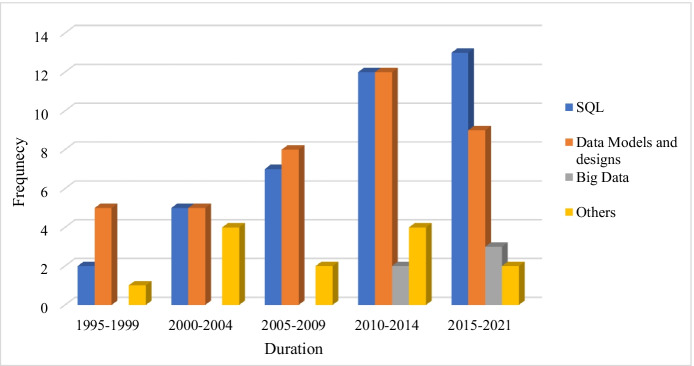

This section discusses the evolution of database while focusing the DSE over the past 25 years as shown in Fig. 6 .

Evolution of DSE studies

This study shows that there is significant increase in research in DSE after 2004 with 78% of the selected papers are published after 2004. The main reason of this outcome is that some of the papers are published in well-recognized channels like IEEE Transactions on Education, ACM Transactions on Computing Education, International Conference on Computer Science and Education (ICCSE), and Teaching, Learning and Assessment of Database (TLAD) workshop. It is also evident that several of these papers were published before 2004 and only a few articles were published during late 1990s. This is because of the fact that DSE started to gain interest after the introduction of Body of Knowledge and DSE standards. The data intensive scientific discovery has been discussed as the fourth paradigm (Hey et al., 2009 ): where the first involves empirical science and observations; second contains theoretical science and mathematically driven insights; third considers computational science and simulation driven insights; while the fourth involves data driven insights of modern scientific research.

Over the past few decades, students have gone from attending one-room class to having the world at their fingertips, and it is a great challenge for the instructors to develop the interest of students in learning database. This challenge has led to the development of the different types of interactive tools to help the instructors teach DSE in this technology oriented era. Keeping the importance of interactive tools in DSE in perspective, various authors have proposed different interactive tools over the years, such as during 1995–2003, when different authors proposed various interactive tools. Some studies (Abut & Ozturk, 1997 ; Mcintyre et al., 1995 ) introduced state of the art interactive tools to teach and enhance the collaborative learning among the students. Similarly, during 2004–2005 more interactive tools in the field of DSE were proposed such as Pahl et al. ( 2004 ), Connolly et al. ( 2005 ) introduced multimedia system based interactive model and game based collaborative learning environment.

The Internet has started to become more common in the first decade of the twenty-first century and its positive impact on the education sector was undeniable. Cost effective, student teacher peer interaction, keeping in touch with the latest information were the main reasons which made the instructors employ web-based tools to teach database in the education sector. Due to this spike in the demand of web-based tools, authors also started to introduce new instruments to assist with teaching database. In 2007 Regueras et al. ( 2007 ) proposed an e-learning tool named QUEST with a feedback module to help the students to learn from their mistakes. Similarly, in 2010, multiple authors have proposed and evaluated various web-based tools. Cvetanovic et al. ( 2010 ) proposed ADVICE with the functionality to monitor student’s progress, while, few authors (Wang et al., 2010 ) proposed Metube which is a variation of YouTube. Furthermore, Nelson and Fatimazahra ( 2010 ) evaluated different web-based tools to highlight the complexities of using these web-based instruments.

Technology has changed the teaching methods in the education sector but technology cannot replace teachers, and despite the amount of time most students spend online, virtual learning will never recreate the teacher-student bond. In the modern era, innovation in technology used in educational sectors is not meant to replace the instructors or teaching methods.

During the 1990s some studies (Dietrich & Urban, 1996 ; Urban & Dietrich, 1997 ) proposed learning and teaching methods respectively keeping the evolving technology in view. The highlight of their work was project deliverables and assignments where students progressively advanced to a step-by-step extension, from a tutorial exercise and then attempting more difficult extension of assignment.

During 2002–2007 various authors have discussed a number of teaching and learning methods to keep up the pace with the ever changing database technology, such as Connolly and Begg ( 2006 ) proposing a constructive approach to teach database analysis and design. Similarly, Prince and Felder ( 2006 ) reviewed the effectiveness of inquiry learning, problem based learning, project-based learning, case-based teaching, discovery learning, and just-in-time teaching. Also, McIntyre et al. (Mcintyre et al., 1995 ) brought to light the impact of convergence of European Union (EU) in different universities across Europe. They suggested a reconstruction of teaching and learning methodologies in order to effectively teach database.