UPDATED Sep. 12, 2024, at 3:11 PM

538’s Pollster Ratings

Based on the historical track record and methodological transparency of each polling firm’s polls..

Read more Download the data See the latest polls

538’s pollster ratings are calculated by analyzing the historical accuracy and methodological transparency of each polling organization’s polls. We define accuracy as the average adjusted error and bias of a pollster’s surveys. Error and bias scores are adjusted for polls’ sample sizes, how far before the election they were conducted, the type of election polled, the polls’ performance relative to other polls surveying the same race and other factors. Transparency Scores are based on our accounting of how much information organizations release about how each of their polls were conducted. Read more about our methodology»

Download this data on GitHub .

Design and development by Aaron Bycoffe . Research by Mary Radcliffe , Cooper Burton and Dhrumil Mehta . Statistical model by G. Elliott Morris . Transparency Score initially developed by Mark Blumenthal . Quantitative editing by Holly Fuong . Story editing by Nathaniel Rakich . Copy editing by Alex Kimball .

Send us feedback .

Related Stories

Comments .

- For educators

- English (US)

- English (India)

- English (UK)

- Greek Alphabet

This problem has been solved!

You'll get a detailed solution from a subject matter expert that helps you learn core concepts.

Question: When a research company polls residents about their voting intentions, new Canadians are under-represented. This is an example of non-response bias response bias sampling bias measurement bias

The first option is correct .

− − − − − − − − − − −

Not the question you’re looking for?

Post any question and get expert help quickly.

- Link to instagram

- Link to facebook

- Link to youtube

- Link to linkedin

Research 101: How to Read a Poll

Polls offer cold, hard data… that are open to a lot of interpretation. Context, including where you’re getting poll results, impacts polling analysis and uncovers different information that may not tell the whole story.

You may have a news source that you trust to interpret polls for you, but what if you want to look at the data yourself?

Reading polling results doesn’t have to feel like such a mysterious process. Once you know what to look out for, polls can empower you to make more informed decisions . Here, we provide some guidelines for assessing and getting the most out of polling results.

Our tips fall into three categories. First, some basics about polls in general. Second, what to keep in mind when reading a specific survey. Lastly, we discuss how to think about polls more aggregately.

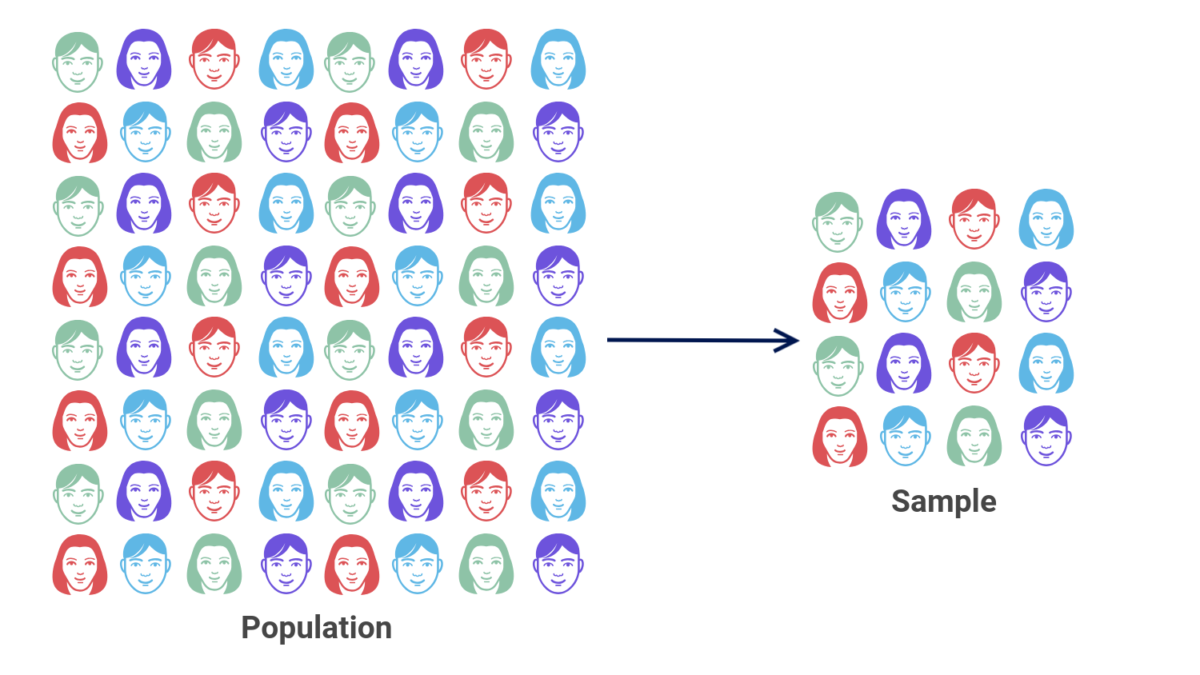

Sample: In polling, a “sample” refers to a subset of a larger population that is selected to represent the whole population’s views or characteristics. Pollsters use samples because it is often impractical or too expensive to survey an entire population. Instead, by surveying a carefully chosen sample, they can make reasonable inferences about the opinions, behaviors, or demographics of the larger population.

Sample Size: Fortunately, statistics helps us with determining how close the responses given by a sample are likely to be to the broader population our poll is trying to understand. While proper sampling techniques are critical to obtaining a representative sample, the sample size is another factor that determines how precise a poll is. A larger sample size generally leads to more accurate and reliable findings, while a smaller sample size may result in less precise or less representative results. Larger sample sizes are particularly important when looking at subgroups and crosstabs to ensure that the number of respondents in each response category doesn’t become too small to draw reasonable conclusions.

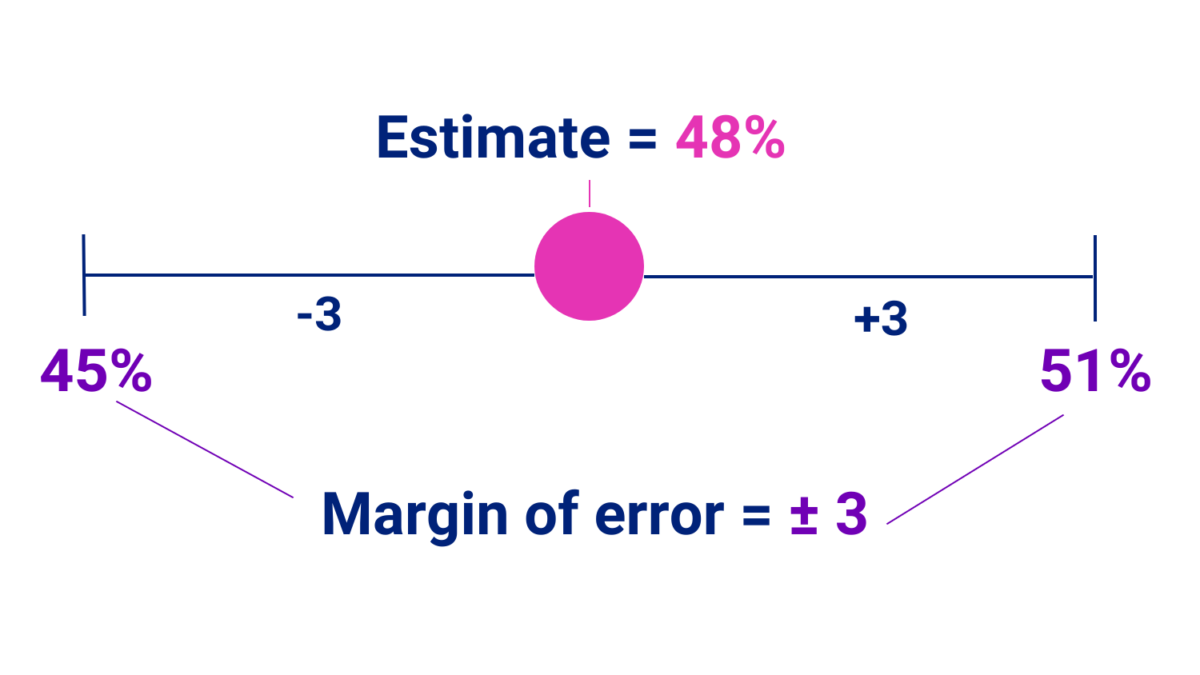

Margin of Error: The margin of error reported by a pollster accounts for the variability around your estimates, whether that’s a candidate’s level of support or the answer to a public opinion question. It represents the range within which the true population percentage is likely to fall. A smaller margin of error means there is a smaller range within which your estimates will fall if you were to sample repeatedly. Pew Research Center does a great job explaining the margin of error and its role when interpreting results: “A margin of error of plus or minus three percentage points at the 95% confidence level means that if we fielded the same survey 100 times, we would expect the result to be within three percentage points of the true population value 95 of those times.”

Sample weighting: Weighting is a procedure where pollsters take account of who did and did not respond to the survey, compare it to what they know the population looks like, and if some types of people are underrepresented in the responses they might give their responses a little more weight so that the sample can be better representative of the full population. Or, if some groups are overrepresented, responses from members of those groups can be down-weighted as well.

However, sample weighting is also often used to get a representative sample of a population whose characteristics are not precisely known, such as likely voters. Here, the skill of a given pollster in generating weights can vary quite a bit, given the challenges of applying suitable demographic or behavioral weights to achieve a representative sample. For example, pollsters could use reported voting intention, voter file history, and weights like race, education level, and gender to try to achieve a representative sample of people who are likely to vote in an upcoming election. In fact, an experiment where four different pollsters were asked to estimate election results from the same poll resulted in a five point spread in estimates due to subtle differences in how pollsters tried to identify likely voters. 1

Cross-Tabulations: Some polls provide results by demographics (age, gender, location) or other variables in the data. Analyzing “crosstabs” can give you a more detailed understanding of who supports what. In fact, they are often extremely important for fully understanding the results of the poll. For example, where a topline might say that 51% of all registered voters support a particular candidate, a crosstab by age might tell us that only 20% of 18-29 year olds support the candidate while 75% of registered voters age 65 and up support the candidate. In this case, looking at a crosstab by age provides important insights that the topline on its own obscures. If the results of a poll are presented with toplines but no crosstabs, ask yourself what the crosstabs might reveal and question why they are not being provided to the public.

Things to consider when reading a specific poll in a memo or the news

- Who conducted the poll? All pollsters are not created equal. You should remember who created a poll when you read it because pollsters’ decisions, like sampling techniques, weighting, and coding the results, can mean big differences in what results they get. Is the pollster a public government organization? A private polling firm? A newspaper? Looking at who conducted the poll may give you clues as to who the poll might be inclined to favor (or oppose). However, keep in mind that who fielded a poll (and their partisan bias, if they have any) is only sometimes immediately apparent because many polling firms conduct polls for other groups. Moreover, even when a pollster has no partisan bias, their polling can still be consistently inaccurate due to various quality control issues.FiveThirtyEight maintains pollster ratings for most major US pollsters, which are based on historical polling accuracy and adjusted for things like the type of election, sample size, and the performance of other polls . These ratings can be helpful when assessing polling sources’ quality.

- When was the poll conducted? Remember, polls are snapshots in time. Always look at when a poll was fielded and consider major events that happened just before the survey was sent out that may have impacted the results. Similarly, consider what events have occurred since the poll came out of the field and how that could have shifted public opinion. For example, the Supreme Court’s decision to overturn Roe v Wade meant that early summer 2022 polling foresaw a midterm red wave that failed to appear due to the boost of urgency the ruling gave to Democratic voters.

- How was the poll conducted? Did the poll call voters at home or on their cell phones? Did it use online questionnaires? Did it use a mix of methods? Live caller polling had long been the gold standard, but as internet access expanded, so did the use of online polls . The polling method matters, and a given method’s effect is constantly evolving. For example, in 2012, FiveThirtyEight found that online polls performed slightly better than telephone polling overall . However, in 2016, they discovered that Hillary Clinton’s lead was larger in live interview polls compared to non-live polls . Of course, the method only matters insomuch as it affects the sample’s representativeness, but even polling researchers still lack a clear understanding of the quality of online samples compared to phone-based sampling. Moreover, sample quality can vary from pollster to pollster based on many factors that may or may not be related to the polling method.

- Who was polled? A general U.S. poll typically looks at the general population, registered voters, or likely voters. The group polled can have a significant impact on results (and making comparisons between polls with different target samples can be very tricky). Voters are generally older, whiter, and thus more Republican and about 40% of Americans are not registered to vote . Thus, the decision to poll a specific group can skew the results due to restrictions (or a lack of restrictions) on the sample.

- What questions were asked and how were they framed? Do not just look at the results when reading a poll, look at how the questions were asked. In 2022, Public Wise conducted a poll experiment where respondents were asked about their thoughts on President Joe Biden’s decision to nominate a Black woman to the Supreme Court. The survey found, among other things, that how you phrase a question and the context you do (or do not) provide can have an enormous impact on responses. Some polls are even not quite genuine polls at all! Polling experts refer to “push polls” to describe polling attempts designed to influence rather than measure the attitudes and beliefs of respondents, by posing loaded questions that contain negative information about an opposing candidate or issue. Always look at how a question is asked when interpreting results. This can also be key to understanding why different polls may find quite different results for what seems to be the same topic.

- What is the poll trying to estimate? Polls are used for many tasks, such as estimating public opinion on a given issue, predicting election outcomes, or trying to understand whether a specific action or event had an effect on peoples’ views or behaviors. These different uses require distinct levels of precision when it comes to practical applications. The margin of error in a public opinion poll can be larger, without changing our overall conclusions in a meaningful way. For example, a 5% difference in either direction on a question about gun control likely doesn’t change the general conclusion that a large share of the population favors more gun control. But when trying to estimate the outcome of a tight political race where a candidate might win by a few percentage points, it is more important to have a much tighter margin of error.

How to Look At Polling In The Aggregate

In any given election cycle or when you are focused on a specific issue campaign, you will often be flooded with polls and surveys for months on end. This advice focuses on how to think about longer trends and things that can impact the polling ecosystem at large.

Things to consider when reading polls more generally:

- Look at a variety of polls from a variety of sources. A sample of one is not a particularly helpful sample, both when conducting a poll and when reading them. Looking at a large selection of polls from many different sources will give readers a better understanding of the landscape as a whole. Pollsters make different decisions when conducting a poll and interpreting results, as the New York Times demonstrated when they gave four pollsters the same raw data and wound up with 4 different sets of results .

- Outliers are tricky. Sometimes, polls can produce unusual or extreme results that don’t align with other polls. It’s important to be cautious of such outliers, but paying attention to whether the pollster has a reliable reputation can help decide how much weight to give these outliers.In the opposite vein, the phenomenon known as “pollster herding” can also lead you astray. Pollsters are often disinclined to publish polls that deviate too much from what others are putting out for fear of being wrong. Because of this, pollsters might hold back on publishing outlier polls.But sometimes, outlier polls are not outliers at all; they are accurate. That was the case in the 2014 Virginia Senate race when outlier pollsters decided not to publish findings in the campaign’s final stretch. Conversely, in 2020, Ann Selzer of the Iowa Poll released results in the run-up to the general election that put President Donald Trump 7 points up in Iowa . These results were a departure from the tighter race polls had been predicting in Iowa, and Selzer faced a great deal of blowback for releasing what was considered an “outlier” poll. When all was said and done, Seltzer was right. Trump won Iowa by 8 points.

In sum, there is a long list of things to consider when reading a poll. Whether the poll is looking at public opinion in a single state or is being used as part of a larger model to predict a presidential election outcome, it is easy to lose sight of the forest for the trees. These tips will help you make the most of polls this primary and general election season.

1 Sometimes samples will also include oversamples of certain groups to ensure they have enough respondents to do subgroup analyses. When a group is oversampled, it means their share of the sample is larger than the share of the population the sample is supposed to represent.

Join the fight for an equitable democracy.

Mobile menu overlay.

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

U.S. Surveys

Pew Research Center has deep roots in U.S. public opinion research. Launched initially as a project focused primarily on U.S. policy and politics in the early 1990s, the Center has grown over time to study a wide range of topics vital to explaining America to itself and to the world. Our hallmarks: a rigorous approach to methodological quality, complete transparency as to our methods, and a commitment to exploring and evaluating ongoing developments in data collection. All data that we publish is accompanied by a detailed methods report such as the one here and here .

The American Trends Panel

Try our email course on polling

Want to know more about polling? Take your knowledge to the next level with a short mini-course from Pew Research Center.

From the 1980s until relatively recently, most national polling organizations conducted surveys by telephone, relying on live interviewers to call randomly selected Americans across the country. Then came the internet. While it took survey researchers some time to adapt to the idea of online surveys, a quick look at the public polls on an issue like presidential approval reveals a landscape now dominated by online polls rather than phone polls.

Most of our U.S. surveys are conducted on the American Trends Panel (ATP), Pew Research Center’s national survey panel of about 10,000 randomly selected U.S. adults. ATP participants are recruited offline using random sampling from the U.S. Postal Service’s residential address file , and respondents are reimbursed for their time. Most panelists complete the surveys online using smartphones, tablets or desktop devices. Non-internet and internet-averse panelists are called by an interviewer to complete surveys on the phone. Learn more about how people in the U.S. take Pew Research Center surveys.

Methods 101

Our video series helps explain the fundamental concepts of survey research including random sampling , question wording , mode effects , non probability surveys and how polling is done around. the world.

The Center also conducts custom surveys of special populations (e.g., Muslim Americans , Jewish Americans , Black Americans , Hispanic Americans , teenagers ) that are not readily studied using national, general population sampling. The Center’s survey research is sometimes paired with demographic or organic data to provide new insights. In addition to our U.S. survey research, you can also read more details on our international survey research , our demographic research and our data science methods.

Our survey researchers are committed to contributing to the larger community of survey research professionals, and are active in American Association of Public Opinion Research (AAPOR) and is a charter member of the AAPOR Transparency Initiative .

Frequently asked questions about surveys

- Why am I never asked to take a poll?

- Can I volunteer to be polled?

- Why should I participate in surveys?

- What good are polls?

- Do pollsters have a code of ethics? If so, what is in the code?

- How are your surveys different from market research?

- How are people selected for your polls?

- Do people lie to pollsters?

- How can I tell a high-quality poll from a lower-quality one?

- How can a small sample of 1,000 (or even 10,000) accurately represent the views of 250,000,000+ Americans?

- Do your surveys include people who are offline?

Reports on the state of polling

- Key Things to Know about Election Polling in the United States

- A Field Guide to Polling: 2020 Edition

- Confronting 2016 and 2020 Polling Limitations

- What 2020’s Election Poll Errors Tell Us About the Accuracy of Issue Polling

- Q&A: After misses in 2016 and 2020, does polling need to be fixed again? What our survey experts say

- Understanding how 2020 election polls performed and what it might mean for other kinds of survey work

- Can We Still Trust Polls?

- Political Polls and the 2016 Election

- Flashpoints in Polling: 2016

Sign up for our Methods newsletter

The latest on survey methods, data science and more, delivered quarterly.

Other Research Methods

901 E St. NW, Suite 300 Washington, DC 20004 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan, nonadvocacy fact tank that informs the public about the issues, attitudes and trends shaping the world. It does not take policy positions. The Center conducts public opinion polling, demographic research, computational social science research and other data-driven research. Pew Research Center is a subsidiary of The Pew Charitable Trusts , its primary funder.

© 2024 Pew Research Center

IMAGES

VIDEO

COMMENTS

When a research company polls residents about their voting intentions, new Canadians are under-represented. This is an example of

tells you how many percentage points your results will differ from the real population value. Study with Quizlet and memorize flashcards containing terms like Sampling bias example, Non-response bias example, Biased example and more.

When a research company polls residents about their voting intentions, new Canadians areunder-represented. This is an example of. A researcher is conducting a survey among students to determine their mean age. Data is collected by asking the age of a simple random sample of 150 students.

538’s pollster ratings are calculated by analyzing the historical accuracy and methodological transparency of each polling organization’s polls. We define accuracy as the average adjusted...

When a research company polls residents about their voting intentions, new Canadians are under-represented. B. A radio station asks its listeners to call in to answer a survey question on spending by politicians.

Question: When a research company polls residents about their voting intentions, new Canadians are under-represented. This is an example of non-response bias response bias sampling bias measurement bias. Show transcribed image text. There’s just one step to solve this.

How do polls work? What are the different kinds of polls? And what should you look for in a high-quality opinion poll? A Pew Research Center survey methodologist answers these questions and more in six short, easy to read lessons.

Why am I never asked to take a poll? You have roughly the same chance of being polled as anyone else living in the United States. This chance, however, is only about 1 in 26,000 for a typical Pew Research Center survey.

What is the poll trying to estimate? Polls are used for many tasks, such as estimating public opinion on a given issue, predicting election outcomes, or trying to understand whether a specific action or event had an effect on peoples’ views or behaviors.

What good are polls? Do pollsters have a code of ethics? If so, what is in the code? How are your surveys different from market research? How are people selected for your polls? Do people lie to pollsters? How can I tell a high-quality poll from a lower-quality one?