Loading metrics

Open Access

Ten simple rules for tackling your first mathematical models: A guide for graduate students by graduate students

Roles Conceptualization, Investigation, Writing – original draft, Writing – review & editing

* E-mail: [email protected]

Affiliations Department of Biological Sciences, University of Toronto Scarborough, Toronto, Ontario, Canada, Department of Ecology and Evolution, University of Toronto, Toronto, Ontario, Canada

Affiliation Department of Ecology and Evolution, University of Toronto, Toronto, Ontario, Canada

Affiliation Department of Physical and Environmental Sciences, University of Toronto Scarborough, Toronto, Ontario, Canada

Affiliation Department of Biology, Memorial University of Newfoundland, St John’s, Newfoundland, Canada

- Korryn Bodner,

- Chris Brimacombe,

- Emily S. Chenery,

- Ariel Greiner,

- Anne M. McLeod,

- Stephanie R. Penk,

- Juan S. Vargas Soto

Published: January 14, 2021

- https://doi.org/10.1371/journal.pcbi.1008539

- Reader Comments

Citation: Bodner K, Brimacombe C, Chenery ES, Greiner A, McLeod AM, Penk SR, et al. (2021) Ten simple rules for tackling your first mathematical models: A guide for graduate students by graduate students. PLoS Comput Biol 17(1): e1008539. https://doi.org/10.1371/journal.pcbi.1008539

Editor: Scott Markel, Dassault Systemes BIOVIA, UNITED STATES

Copyright: © 2021 Bodner et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: The authors received no specific funding for this work.

Competing interests: The authors have declared that no competing interests exist.

Introduction

Biologists spend their time studying the natural world, seeking to understand its various patterns and the processes that give rise to them. One way of furthering our understanding of natural phenomena is through laboratory or field experiments, examining the effects of changing one, or several, variables on a measured response. Alternatively, one may conduct an observational study, collecting field data and comparing a measured response along natural gradients. A third and complementary way of understanding natural phenomena is through mathematical models. In the life sciences, more scientists are incorporating these quantitative methods into their research. Given the vast utility of mathematical models, ranging from providing qualitative predictions to helping disentangle multiple causation (see Hurford [ 1 ] for a more complete list), their increased adoption is unsurprising. However, getting started with mathematical models may be quite daunting for those with traditional biological training, as in addition to understanding new terminology (e.g., “Jacobian matrix,” “Markov chain”), one may also have to adopt a different way of thinking and master a new set of skills.

Here, we present 10 simple rules for tackling your first mathematical models. While many of these rules are applicable to basic scientific research, our discussion relates explicitly to the process of model-building within ecological and epidemiological contexts using dynamical models. However, many of the suggestions outlined below generalize beyond these disciplines and are applicable to nondynamic models such as statistical models and machine-learning algorithms. As graduate students ourselves, we have created rules we wish we had internalized before beginning our model-building journey—a guide by graduate students, for graduate students—and we hope they prove insightful for anyone seeking to begin their own adventures in mathematical modelling.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

Boxes represent susceptible, infected, and recovered compartments, and directed arrows represent the flow of individuals between these compartments with the rate of flow being controlled by the contact rate, c , the probability of infection, γ , and the recovery rate, θ .

https://doi.org/10.1371/journal.pcbi.1008539.g001

Rule 1: Know your question

“All models are wrong, some are useful” is a common aphorism, generally attributed to statistician George Box, but determining which models are useful is dependent upon the question being asked. The practice of clearly defining a research question is often drilled into aspiring researchers in the context of selecting an appropriate research design, interpreting statistical results, or when outlining a research paper. Similarly, the practice of defining a clear research question is important for mathematical models as their results are only as interesting as the questions that motivate them [ 5 ]. The question defines the model’s main purpose and, in all cases, should extend past the goal of merely building a model for a system (the question can even answer whether a model is even necessary). Ultimately, the model should provide an answer to the research question that has been proposed.

When the research question is used to inform the purpose of the model, it also informs the model’s structure. Given that models can be modified in countless ways, providing a purpose to the model can highlight why certain aspects of reality were included in the structure while others were ignored [ 6 ]. For example, when deciding whether we should adopt a more realistic model (i.e., add more complexity), we can ask whether we are trying to inform general theory or whether we are trying to model a response in a specific system. For example, perhaps we are trying to predict how fast an epidemic will grow based on different age-dependent mixing patterns. In this case, we may wish to adapt our basic SIR model to have age-structured compartments if we suspect this factor is important for the disease dynamics. However, if we are exploring a different question, such as how stochasticity influences general SIR dynamics, the age-structured approach would likely be unnecessary. We suggest that one of the first steps in any modelling journey is to choose the processes most relevant to your question (i.e., your hypothesis) and the direct and indirect causal relationships among them: Are the relationships linear, nonlinear, additive, or multiplicative? This challenge can be aided with a good literature review. Depending on your model purpose, you may also need to spend extra time getting to know your system and/or the data before progressing forward. Indeed, the more background knowledge acquired when forming your research question, the more informed your decision-making when selecting the structure, parameters, and data for your model.

Rule 2: Define multiple appropriate models

Natural phenomena are complicated to study and often impossible to model in their entirety. We are often unsure about the variables or processes required to fully answer our research question(s). For example, we may not know how the possibility of reinfection influences the dynamics of a disease system. In cases such as these, our advice is to produce and sketch out a set of candidate models that consider alternative terms/variables which may be relevant for the phenomena under investigation. As in Fig 2 , we construct 2 models, one that includes the ability for recovered individuals to become infected again, and one that does not. When creating multiple models, our general objective may be to explore how different processes, inputs, or drivers affect an outcome of interest or it may be to find a model or models that best explain a given set of data for an outcome of interest. In our example, if the objective is to determine whether reinfection plays an important role in explaining the patterns of a disease, we can test our SIR candidate models using incidence data to determine which model receives the most empirical support. Here we consider our candidate models to be alternative hypotheses, where the candidate model with the least support is discarded. While our perspective of models as hypotheses is a view shared by researchers such as Hilborn and Mangel [ 7 ], and Penk and colleagues [ 8 ], please note that others such as Oreskes and colleagues [ 9 ] believe that models are not subject to proof and hence disagree with this notion. We encourage modellers who are interested in this debate to read the provided citations.

(A) A susceptible/infected/recovered model where individuals remain immune (gold) and (B) a susceptible/infected/recovered model where individuals can become susceptible again (blue). Arrows indicate the direction of movement between compartments, c is the contact rate, γ is the infection rate given contact, and θ is the recovery rate. The text below each conceptual model are the hypotheses ( H1 and H2 ) that represent the differences between these 2 SIR models.

https://doi.org/10.1371/journal.pcbi.1008539.g002

Finally, we recognize that time and resource constraints may limit the ability to build multiple models simultaneously; however, even writing down alternative models on paper can be helpful as you can always revisit them if your primary model does not perform as expected. Of course, some candidate models may not be feasible or relevant for your system, but by engaging in the activity of creating multiple models, you will likely have a broader perspective of the potential factors and processes that fundamentally shape your system.

Rule 3: Determine the skills you will need (and how to get them)

Equipping yourself with the necessary analytical tools that form the basis of all quantitative techniques is essential. As Darwin said, those that have knowledge of mathematics seem to be endowed with an extra sense [ 10 ], and having a background in calculus, linear algebra, and statistics can go a long way. Thus, make it a habit to set time for yourself to learn these mathematical skills, and do not treat all your methods like a black box. For instance, if you plan to use ODEs, consider brushing up on your calculus, e.g., using Stewart [ 11 ]. If you are working with a system of ODEs, also read up on linear algebra, e.g., using Poole [ 12 ]. Some universities also offer specialized math biology courses that combine topics from different math courses to teach the essentials of mathematical modelling. Taking these courses can help save time, and if they are not available, their syllabi can help focus your studying. Also note that while narrowing down a useful skillset in the early stages of model-building will likely spare you from some future headaches, as you progress in your project, it is inevitable that new skills will be required. Therefore, we advise you to check in at different stages of your modelling journey to assess the skills that would be most relevant for your next steps and how best to acquire them. Hopefully, these decisions can also be made with the help of your supervisor and/or a modelling mentor. Building these extra skills can at first seem daunting but think of it as an investment that will pay dividends in improving your future modelling work.

When first attempting to tackle a specific problem, find relevant research that accomplishes the same tasks and determine if you understand the processes and techniques that are used in that study. If you do, then you can implement similar techniques and methods, and perhaps introduce new methods. If not, then determine which tools you need to add to your toolbox. For instance, if the problem involves a system of ODEs (e.g., SIR models, see above), can you use existing symbolic software (e.g., Maple, Matlab, Mathematica) to determine the systems dynamics via a general solution, or is the complexity too great that you will need to create simulations to infer the dynamics? Figuring out questions like these is key to understanding what skills you will need to work with the model you develop. While there is a time and a place for involving collaborators to help facilitate methods that are beyond your current reach, we strongly advocate that you approach any potential collaborator only after you have gained some knowledge of the methods first. Understanding the methodology, or at least its foundation, is not only crucial for making a fruitful collaboration, but also important for your development as a scientist.

Rule 4: Do not reinvent the wheel

While we encourage a thorough understanding of the methods researchers employ, we simultaneously discourage unnecessary effort redoing work that has already been done. Preventing duplication can be ensured by a thorough review of the literature (but note that reproducing original model results can advance your knowledge of how a model functions and lead to new insights in the system). Often, we are working from established theory that provides an existing framework that can be applied to different systems. Adapting these frameworks can help advance your own research while also saving precious time. When digging through articles, bear in mind that most modelling frameworks are not system-specific. Do not be discouraged if you cannot immediately find a model in your field, as the perfect model for your question may have been applied in a different system or be published only as a conceptual model. These models are still useful! Also, do not be shy about reaching out to authors of models that you think may be applicable to your system. Finally, remember that you can be critical of what you find, as some models can be deceptively simple or involve assumptions that you are not comfortable making. You should not reinvent the wheel, but you can always strive to build a better one.

Rule 5: Study and apply good coding practices

The modelling process will inevitably require some degree of programming, and this can quickly become a challenge for some biologists. However, learning to program in languages commonly adopted by the scientific community (e.g., R, Python) can increase the transparency, accessibility, and reproducibility of your models. Even if you only wish to adopt preprogrammed models, you will likely still need to create code of your own that reads in data, applies functions from a collection of packages to analyze the data, and creates some visual output. Programming can be highly rewarding—you are creating something after all—but it can also be one of the most frustrating parts of your research. What follows are 3 suggestions to avoid some of the frustration.

Organization is key, both in your workflow and your written code. Take advantage of existing software and tools that facilitate keeping things organized. For example, computational notebooks like Jupyter notebooks or R-Markdown documents allow you to combine text, commands, and outputs in an easily readable and shareable format. Version control software like Git makes it simple to both keep track of changes as well as to safely explore different model variants via branches without worrying that the original model has been altered. Additionally, integrating with hosting services such as Github allows you to keep your changes safely stored in the cloud. For more details on learning to program, creating reproducible research, programming with Jupyter notebooks, and using Git and Github, see the 10 simple rules by Carey and Papin [ 13 ], Sandve and colleagues [ 14 ], Rule and colleagues [ 15 ], and Perez-Riverol and colleagues [ 16 ], respectively.

Comment your code and comment it well (see Fig 3 ). These comments can be the pseudocode you have written on paper prior to coding. Assume that when you revisit your code weeks, months, or years later, you will have forgotten most of what you did and why you did it. Good commenting can also help others read and use your code, making it a critical part of increasing scientific transparency. It is always good practice to write your comments before you write the code, explaining what the code should do. When coding a function, include a description of its inputs and outputs. We also encourage you to publish your commented model code in repositories such that they are easily accessible to others—not only to get useful feedback for yourself but to provide the modelling foundation for others to build on.

Two functionally identical codes in R [ 17 ] can look very different without comments (left) and with descriptive comments (right). Writing detailed comments will help you and others understand, adapt, and use your code.

https://doi.org/10.1371/journal.pcbi.1008539.g003

When writing long code, test portions of it separately. If you are writing code that will require a lot of processing power or memory to run, use a simple example first, both to estimate how long the project will take, and to avoid waiting 12 hours to see if it works. Additionally, when writing code, try to avoid too many packages and “tricks” as it can make your code more difficult to understand. Do not be afraid of writing 2 separate functions if it will make your code more intuitive. As with writing, your skill as a writer is not dependent on your ability to use big words, but instead about making sure your reader understands what you are trying to communicate.

Rule 6: Sweat the “right” small stuff

By “sweat the ‘right’ small stuff,” we mean considering the details and assumptions that can potentially make or break a mathematical model. A good start would be to ensure your model follows the rules of mass and energy conservation. In a closed system, mass and energy cannot be created nor destroyed, and thus, the left side of the mathematical equation must equal the right under all circumstances. For example, in Eq 2 , if the number of susceptible individuals decreases due to infection, we must include a negative term in this equation (− cγIS ) to indicate that loss and its conjugate (+ cγIS ) to the infected individuals equation, Eq 3 , to represent that gain. Similarly, units of all terms must also be balanced on both sides of the equation. For example, if we wish to add or subtract 2 values, we must ensure their units are equivalent (e.g., cannot add day −1 and year −1 ). Simple oversights in units can lead to major setbacks and create bizarre dynamics, so it is worth taking the time to ensure the units match up.

Modellers should also consider the fundamental boundary conditions of each parameter to determine if there are some values that are illogical. Logical constraints and boundaries can be developed for each parameter using prior knowledge and assumptions (e.g., Huntley [ 18 ]). For example, when considering an SIR model, there are 2 parameters that comprise the transmission rate—the contact rate, c , and the probability of infection given contact, γ . Using our intuition, we can establish some basic rules: (1) the contact rate cannot be negative; (2) the number of susceptible, infected, and recovered individuals cannot be below 0; and (3) the probability of infection given contact must fall between 0 and 1. Keeping these in mind as you test your model’s dynamics can alert you to problems in your model’s structure. Finally, simulating your model is an excellent method to obtain more reasonable bounds for inputs and parameters and ensure behavior is as expected. See Otto and Day [ 5 ] for more information on the “basic ingredients” of model-building.

Rule 7: Simulate, simulate, simulate

Even though there is a lot to be learned from analyzing simple models and their general solutions, modelling a complex world sometimes requires complex equations. Unfortunately, the cost of this complexity is often the loss of general solutions [ 19 ]. Instead, many biologists must calculate a numerical solution, an approximate solution, and simulate the dynamics of these models [ 20 ]. Simulations allow us to explore model behavior, given different structures, initial conditions, and parameters ( Fig 4 ). Importantly, they allow us to understand the dynamics of complex systems that may otherwise not be ethical, feasible, or economically viable to explore in natural systems [ 21 ].

Gold lines represent the SIR structure ( Fig 2A ) where lifelong immunity of individuals is inferred after infection, and blue lines represent an SIRS structure ( Fig 2B ) where immunity is lost over time. The solid lines represent model dynamics assuming a recovery rate ( θ ) of 0.05, while dotted lines represent dynamics assuming a recovery rate of 0.1. All model runs assume a transmission rate, cγ , of 0.2 and an immunity loss rate, ψ , of 0.01. By using simulations, we can explore how different processes and rates change the system’s dynamics and furthermore determine at what point in time these differences are detectable. SIR, Susceptible-Infected-Recovered; SIRS, Susceptible-Infected-Recovered-Susceptible.

https://doi.org/10.1371/journal.pcbi.1008539.g004

One common method of exploring the dynamics of complex systems is through sensitivity analysis (SA). We can use this simulation-based technique to ascertain how changes in parameters and initial conditions will influence the behavior of a system. For example, if simulated model outputs remain relatively similar despite large changes in a parameter value, we can expect the natural system represented by that model to be robust to similar perturbations. If instead, simulations are very sensitive to parameter values, we can expect the natural system to be sensitive to its variation. Here in Fig 4 , we can see that both SIR models are very sensitive to the recovery rate parameter ( θ ) suggesting that the natural system would also be sensitive to individuals’ recovery rates. We can therefore use SA to help inform which parameters are most important and to determine which are distinguishable (i.e., identifiable). Additionally, if observed system data are available, we can use SA to help us establish what are the reasonable boundaries for our initial conditions and parameters. When adopting SA, we can either vary parameters or initial conditions one at a time (local sensitivity) or preferably, vary multiple of them in tandem (global sensitivity). We recognize this topic may be overwhelming to those new to modelling so we recommend reading Marino and colleagues [ 22 ] and Saltelli and colleagues [ 23 ] for details on implementing different SA methods.

Simulations are also a useful tool for testing how accurately different model fitting approaches (e.g., Maximum Likelihood Estimation versus Bayesian Estimation) can recover parameters. Given that we know the parameter values for simulated model outputs (i.e., simulated data), we can properly evaluate the fitting procedures of methods when used on that simulated data. If your fitting approach cannot even recover simulated data with known parameters, it is highly unlikely your procedure will be successful given real, noisy data. If a procedure performs well under these conditions, try refitting your model to simulated data that more closely resembles your own dataset (i.e., imperfect data). If you know that there was limited sampling and/or imprecise tools used to collect your data, consider adding noise, reducing sample sizes, and adding temporal and spatial gaps to see if the fitting procedure continues to return reasonably correct estimates. Remember, even if your fitting procedures continue to perform well given these additional complexities, issues may still arise when fitting to empirical data. Models are approximations and consequently their simulations are imperfect representations of your measured outcome of interest. However, by evaluating procedures on perfectly known imperfect data, we are one step closer to having a fitting procedure that works for us even when it seems like our data are against us.

Rule 8: Expect model fitting to be a lengthy, arduous but creative task

Model fitting requires an understanding of both the assumptions and limitations of your model, as well as the specifics of the data to be used in the fitting. The latter can be challenging, particularly if you did not collect the data yourself, as there may be additional uncertainties regarding the sampling procedure, or the variables being measured. For example, the incidence data commonly adopted to fit SIR models often contain biases related to underreporting, selective reporting, and reporting delays [ 24 ]. Taking the time to understand the nuances of the data is critical to prevent mismatches between the model and the data. In a bad case, a mismatch may lead to a poor-fitting model. In the worst case, a model may appear well-fit, but will lead to incorrect inferences and predictions.

Model fitting, like all aspects of modelling, is easier with the appropriate set of skills (see Rule 2). In particular, being proficient at constructing and analyzing mathematical models does not mean you are prepared to fit them. Fitting models typically requires additional in-depth statistical knowledge related to the characteristics of probability distributions, deriving statistical moments, and selecting appropriate optimization procedures. Luckily, a substantial portion of this knowledge can be gleaned from textbooks and methods-based research articles. These resources can range from covering basic model fitting, such as determining an appropriate distribution for your data and constructing a likelihood for that distribution (e.g., Hilborn and Mangel [ 7 ]), to more advanced topics, such as accounting for uncertainties in parameters, inputs, and structures during model fitting (e.g., Dietze [ 25 ]). We find these sources among others (e.g., Hobbs and Hooten [ 26 ] for Bayesian methods; e.g., Adams and colleagues [ 27 ] for fitting noisy and sparse datasets; e.g., Sirén and colleagues [ 28 ] for fitting individual-based models; and Williams and Kendall [ 29 ] for multiobject optimization—to name a few) are not only useful when starting to fit your first models, but are also useful when switching from one technique or model to another.

After you have learned about your data and brushed up on your statistical knowledge, you may still run into issues when model fitting. If you are like us, you will have incomplete data, small sample sizes, and strange data idiosyncrasies that do not seem to be replicated anywhere else. At this point, we suggest you be explorative in the resources you use and accept that you may have to combine multiple techniques and/or data sources before it is feasible to achieve an adequate model fit (see Rosenbaum and colleagues [ 30 ] for parameter estimation with multiple datasets). Evaluating the strength of different techniques can be aided by using simulated data to test these techniques, while SA can be used to identify insensitive parameters which can often be ignored in the fitting process (see Rule 7).

Model accuracy is an important metric but “good” models are also precise (i.e., reliable). During model fitting, to make models more reliable, the uncertainties in their inputs, drivers, parameters, and structures, arising due to natural variability (i.e., aleatory uncertainty) or imperfect knowledge (i.e., epistemic uncertainty), should be identified, accounted for, and reduced where feasible [ 31 ]. Accounting for uncertainty may entail measurements of uncertainties being propagated through a model (a simple example being a confidence interval), while reducing uncertainty may require building new models or acquiring additional data that minimize the prioritized uncertainties (see Dietze [ 25 ] and Tsigkinopoulou and colleagues [ 32 ] for a more thorough review on the topic). Just remember that although the steps outlined in this rule may take a while to complete, when you do achieve a well-fitted reliable model, it is truly something to be celebrated.

Rule 9: Give yourself time (and then add more)

Experienced modellers know that it often takes considerable time to build a model and that even more time may be required when fitting to real data. However, the pervasive caricature of modelling as being “a few lines of code here and there” or “a couple of equations” can lead graduate students to hold unrealistic expectations of how long finishing a model may take (or when to consider a model “finished”). Given the multiple considerations that go into selecting and implementing models (see previous rules), it should be unsurprising that the modelling process may take weeks, months, or even years. Remembering that a published model is the final product of long and hard work may help reduce some of your time-based anxieties. In reality, the finished product is just the tip of the iceberg and often unseen is the set of failed or alternative models providing its foundation. Note that taking time early on to establish what is “good enough” given your objective, and to instill good modelling practices, such as developing multiple models, simulating your models, and creating well-documented code, can save you considerable time and stress.

Rule 10: Care about the process, not just the endpoint

As a graduate student, hours of labor coupled with relative inexperience may lead to an unwillingness to change to a new model later down the line. But being married to one model can restrict its efficacy, or worse, lead to incorrect conclusions. Early planning may mitigate some modelling problems, but many issues will only become apparent as time goes on. For example, perhaps model parameters cannot be estimated as you previously thought, or assumptions made during model formulation have since proven false. Modelling is a dynamic process, and some steps will need to be revisited many times as you correct, refine, and improve your model. It is also important to bear in mind that the process of model-building is worth the effort. The process of translating biological dynamics into mathematical equations typically forces us to question our assumptions, while a misspecified model often leads to novel insights. While we may wish we had the option to skip to a final finished product, in the words of Drake, “sometimes it’s the journey that teaches you a lot about your destination”.

There is no such thing as a failed model. With every new error message or wonky output, we learn something useful about modelling (mostly begrudgingly) and, if we are lucky, perhaps also about the study system. It is easy to cave in to the ever-present pressure to perform, but as graduate students, we are still learning. Luckily, you are likely surrounded by other graduate students, often facing similar challenges who can be an invaluable resource for learning and support. Finally, remember that it does not matter if this was your first or your 100th mathematical model, challenges will always present themselves. However, with practice and determination, you will become more skilled at overcoming them, allowing you to grow and take on even greater challenges.

Acknowledgments

We thank Marie-Josée Fortin, Martin Krkošek, Péter K. Molnár, Shawn Leroux, Carina Rauen Firkowski, Cole Brookson, Gracie F.Z. Wild, Cedric B. Hunter, and Philip E. Bourne for their helpful input on the manuscript.

- 1. Hurford A. Overview of mathematical modelling in biology II. 2012 [cite 2020 October 25]. Available: https://theartofmodelling.wordpress.com/2012/01/04/overview-of-mathematical-modelling-in-biology-ii/

- View Article

- PubMed/NCBI

- Google Scholar

- 3. Maki Y, Hirose H, ADSIR M. Infectious Disease Spread Analysis Using Stochastic Differential Equations for SIR Model. International Conference on Intelligent Systems, Modelling and Simulation. IEEE. 2013.

- 5. Otto SP, Day T. A biologist’s guide to mathematical modeling in ecology and evolution. Princeton, NJ: Princeton University Press; 2007.

- 7. Hilborn R, Mangel M. The ecological detective: Confronting models with data. Princeton, NJ: Princeton University Press; 1997.

- 10. Darwin C. The autobiography of Charles Darwin. Darwin F, editor. 2008. Available: https://www.gutenberg.org/files/2010/2010-h/2010-h.htm

- 11. Stewart J. Calculus: Early transcendentals. Eighth. Boston, MA: Cengage Learning; 2015.

- 12. Poole D. Linear algebra: A modern introduction. Fourth. Stamford, CT: Cengage Learning; 2014.

- 17. R Core Team. R: A language and environment for statistical computing (version 3.6.0, R foundation for statistical computing). 2020.

- 18. Huntley HE. Dimensional analysis. First. New York, NY: Dover Publications; 1967.

- 19. Corless RM, Fillion N. A graduate introduction to numerical methods. New York, NY: Springer; 2016.

- 23. Saltelli A, Ratto M, Andres T, Campolongo F, Cariboni J, Gatelli D, et al. Global sensitivity analysis: The primer. Chichester: Wiley; 2008.

- 25. Dietze MC. Ecological forecasting. Princeton, NJ: Princeton University Press; 2017.

- 26. Hobbs NT, Hooten MB. Bayesian models: A statistical primer for ecologists. Princeton, NJ: Princeton University Press

Mathematical modelling: a language that explains the real world

Deputy Director of the DSI-NRF Centre of Excellence in Mathematical and Statistical Sciences and Senior Lecturer in the School of Computer Science and Applied Mathematics, University of the Witwatersrand

Disclosure statement

Ashleigh Jane Hutchinson received funding from the National Research Foundation, South Africa. She is affiliated with the University of the Witwatersrand and the CoE-MaSS, South Africa.

University of the Witwatersrand provides support as a hosting partner of The Conversation AFRICA.

View all partners

The motion of all solid objects and fluids can be described using the unifying language of mathematics. Wind movement , turbulence in the oceans , the migration of butterflies in a big city: all these phenomena can be better understood using mathematics.

Imagine dropping a coin from a tall building. The simple, yet brilliant, result derived by Sir Isaac Newton – that the resultant force equals mass times acceleration – can be used to predict the position of the coin at any given time. The velocity at which the coin reaches the ground can also be calculated with ease. This is a prime example of a mathematical model that can be used to explain what we observe in reality.

Mathematics can be used to solve very complex problems – far more complicated than that of a coin falling under gravity. Industries are in no short supply of such problems. Mathematical techniques can explain how the world works and make it better. Mathematical modelling is capable of saving lives, assisting in policy and decision-making, and optimising economic growth. It can also be exploited to help understand the Universe and the conditions needed to sustain life.

My own work , for example, has involved developing mathematical models for use in industries such as sugar, mining and energy conservation. I’ve worked with researchers from different disciplines to understand the challenges that these industries face, and to help mitigate their effects. This research shows how, far from being simply an abstract area of knowledge, mathematics has many important and practical uses in the real world.

Mining and sugar

For instance, our research in the mining industry deals with roof collapse in underground mines. Such collapses pose a serious concern to the safety of miners, as well as the efficiency of mining operations. It’s important to identify possible sections of a mine roof that may be prone to collapse. That’s where mathematical modelling comes in: with further investigation and research, we can use it to predict roof collapses. Miners can be safely evacuated before something like this happens.

South Africa, where I conduct my research, had a record-low number of deaths due to mining related incidents in 2019. But even a single preventable death is unacceptable. Mathematical modelling can help to inform safety measures and keep the fatality rate down.

The sugar industry has also benefited from mathematical modelling. Manufacturers want to produce as many sugar crystals as possible, in minimal time. We have used mathematical modelling to suggest ways in which the process of separating molasses from the sugar crystals can be speeded up so that the production time of sugar can be optimised.

This work doesn’t just benefit individual sugar producers. In South Africa, the sugar industry not only provides job opportunities, but surplus sugar is also exported . Getting as much of the end product as possible out of the raw materials contributes to the economy by increasing the amount of sugar exported to other countries.

Power predictions

One of the research projects I have been involved with focuses on a very hot topic in South Africa and elsewhere on the African continent: power supply.

A lack of power supply is not only a nuisance in domestic households, it has a fundamental effect on the economy . One of the relevant issues here is what to charge users. Tariffs, which are the price per unit of electricity, are based on numerous factors. High tariff price increases have led to households and businesses investing in renewable energy sources and removing themselves from the grid . Once this happens, less income is available to cross-subsidise the poor, who would otherwise not have access to electricity.

Mathematical modelling can be used to show – as we have done – that increasing tariff prices, which encourages consumers to invest in alternative energy supplies, will lead to exactly the situation described above, putting the country’s state-owned power entity, Eskom, at risk.

This is an example of how mathematical modelling can play a valuable role in informing policy decisions.

Bringing researchers together

Our research also highlights the importance of collaboration in tackling difficult problems in industry.

This is a crucial element of mathematical modelling: it unifies researchers from different academic backgrounds. Developing a mathematical model is really just the start of a research project. Predictions obtained from any mathematical model need to be studied by experts in order to verify whether the model is a valid and accurate representation of the real-world problem of interest. So for instance, our work on the power grid could be assessed by economists to test it against real-world figures, then applied by policy makers.

- Mathematics

- Electricity

- Mathematical modelling

Editorial Internship

Manager, Enterprise Applications

Integrated Management of Invasive Pampas Grass for Enhanced Land Rehabilitation

Deputy Vice-Chancellor (Indigenous Strategy and Services)

A systematic literature review of the current discussion on mathematical modelling competencies: state-of-the-art developments in conceptualizing, measuring, and fostering

- Open access

- Published: 15 October 2021

- Volume 109 , pages 205–236, ( 2022 )

Cite this article

You have full access to this open access article

- Mustafa Cevikbas 1 ,

- Gabriele Kaiser ORCID: orcid.org/0000-0002-6239-0169 1 , 2 &

- Stanislaw Schukajlow 3

17k Accesses

61 Citations

6 Altmetric

Explore all metrics

This article has been updated

Mathematical modelling competencies have become a prominent construct in research on the teaching and learning of mathematical modelling and its applications in recent decades; however, current research is diverse, proposing different theoretical frameworks and a variety of research designs for the measurement and fostering of modelling competencies. The study described in this paper was a systematic literature review of the literature on modelling competencies published over the past two decades. Based on a full-text analysis of 75 peer-reviewed studies indexed in renowned databases and published in English, the study revealed the dominance of an analytical, bottom-up approach for conceptualizing modelling competencies and distinguishing a variety of sub-competencies. Furthermore, the analysis showed the great richness of methods for measuring modelling competencies, although a focus on (non-standardized) tests prevailed. Concerning design and offering for fostering modelling competencies, the majority of the papers reported training strategies for modelling courses. Overall, the current literature review pointed out the necessity for further theoretical work on conceptualizing mathematical modelling competencies while highlighting the richness of developed empirical approaches and their implementation at various educational levels.

Similar content being viewed by others

Modelling Competencies: Past Development and Further Perspectives

Fostering Mathematical Modelling Competencies: A Systematic Literature Review

Empirical research on teaching and learning of mathematical modelling: a survey on the current state-of-the-art

Avoid common mistakes on your manuscript.

Modelling and applications are essential components of mathematics, and applying mathematical knowledge in the real world is a core competence of mathematical literacy; thus, fostering students’ competence in solving real-world problems is a widely accepted goal of mathematics education, and mathematical modelling is included in many curricula across the world. Despite this consensus on the relevance of mathematical modelling competencies, various influential approaches exist that define modelling competencies differently. In psychological discourse, competencies are mainly defined as cognitive abilities for solving specific problems, complemented by affective components, such as the volitional and social readiness to use the problem solutions (Weinert, 2001 ). In the mathematics educational discourse, Niss and Højgaard ( 2011 , 2019 ) emphasized cognitive abilities as the core of mathematical competencies within their extensive framework, an updated version of which has recently been published; therefore, the question of how to conceptualize competence as an overall construct, with competency and competencies as associated derivations, remains open.

The discussion on the teaching and learning of mathematical modelling, which began in the 1980s, has emphasized the practical application of mathematical modelling skills; for example, the prologue of the proceedings of the first conference on the teaching and learning of mathematical modelling and applications (hereafter ICTMA) stated: “To become proficient in modelling, you must fully experience it – it is no good just watching somebody else do it, or repeat what somebody else has done – you must experience it yourself” (Burghes, 1984 , p. xiii). This strong connection to proficiency may be one reason for the early development of the discourse on modelling competencies compared to other domains, such as teacher education.

Despite this broad consensus on the importance of modelling competencies and the relevance of the modelling cycle in specifying the expected modelling steps and phases, no worldwide accepted research evidence exists on the effects of short- and long-term mathematical modelling examples and courses in school and higher education on the development of modelling competencies. One reason for this research gap may be the diversity of instruments for measuring modelling competencies and the lack of agreed-upon standards for investigating the effects of fostering mathematical modelling competencies at various educational levels, which depends on reliable and valid measurement instruments. Finally, only a few approaches have addressed or further developed the construct of mathematical modelling competencies and/or its descriptions and components. Precise conceptualizations of mathematical modelling competence as a construct are needed to underpin reliable and valid measurement instruments and implementation studies to foster mathematical modelling competencies effectively.

With this systematic literature review, the results of which are presented in this paper, we aimed to analyze current state-of-the-art research regarding the development of modelling competencies and their conceptualization, measurement, and fostering. This analysis hopefully will contribute to a better understanding of the previously mentioned research gaps and may encourage further research.

1 Theoretical frameworks as the basis for the research questions and analysis

To date, only one comprehensive literature review on modelling competencies—a classical literature search on modelling competencies by Kaiser and Brand ( 2015 )—has been conducted, constituting an important starting point for the current discourse on mathematical modelling. Contrasting with the present systematic literature review using reputable databases, this classical literature review was based on the proceedings of the biennial ICTMA series. A study by the International Commission on Mathematics Instruction (ICMI) on modelling and applications (the 14th ICMI Study), conducted at the International Congress of Mathematical Education’s (ICME’s) international quadrennial congresses, reviewed related books published by special groups, together with special issues of mathematics educational journals and other journal papers. Based on this literature survey, the development of the discourse on modelling competencies since the start of the international conference series in 1983 was reconstructed by Kaiser and Brand ( 2015 ).

The review indicated that the early discourse addressed the constructs of modelling skills or modelling abilities, including metacognitive skills. The first widespread use of the modelling competence construct emerged with the 14th ICMI Study on Applications and Modelling, a separate part of which was devoted to modelling competencies (Blum et al., 2007 ). More or less simultaneously, the discussion on modelling competence, and its conceptualization and measurement, started at ICTMA12 (Haines et al., 2007 ) and continued at ICTMA14 (Kaiser et al., 2011 ), with both proceedings containing sections on modelling competencies.

In their literature review, Kaiser and Brand ( 2015 ) distinguished four central perspectives on mathematical modelling competencies with different emphases and foci. These four perspectives were characterized as follows:

Introduction of modelling competencies and an overall comprehensive concept of competencies by the Danish KOM project (Niss & Højgaard, 2011 , 2019 )

Measurement of modelling skills and the development of measurement instruments by a British-Australian group (Haines et al., 1993 ; Houston & Neill, 2003 )

Development of a comprehensive concept of modelling competencies based on sub-competencies and their evaluation by a German modelling group (Kaiser, 2007 ; Maaß, 2006 )

Integration of metacognition into modelling competencies by an Australian modelling group (Galbraith et al., 2007 ; Stillman, 2011 ; Stillman et al., 2010 ).

These perspectives shaping the discourse on modelling competencies followed two distinct approaches to understanding and defining mathematical modelling competence: a holistic understanding and an analytic description of modelling competencies, referred to as top-down and bottom-up approaches by Niss and Blum ( 2020 ).

In the following, we describe these two diametrically opposite approaches and identify intermediate approaches.

1.1 A holistic approach to mathematical modelling competence—the top-down approach

The Danish KOM project first clarified the concept of modelling competence, which was embedded by Niss and Højgaard ( 2011 ) into an overall concept of mathematical competence consisting of eight mathematical competencies. The modelling competency was defined as one of the eight competencies, which were seen as aspects of a holistic description of mathematical competency, in the sense of Shavelson ( 2010 ). The modelling competency was defined by Niss and Højgaard ( 2011 ) as follows:

This competency involves, on the one hand, being able to analyze the foundations and properties of existing models and being able to assess their range and validity. Belonging to this is the ability to ‘de-mathematise’ (traits of) existing mathematical models; i.e. being able to decode and interpret model elements and results in terms of the real area or situation which they are supposed to model. On the other hand, competency involves being able to perform active modelling in given contexts; i.e. mathematising and applying it to situations beyond mathematics itself. (p. 58)

In their revised version of the definition of mathematical competence, Niss and Højgaard ( 2019 ) explicitly excluded affective aspects such as volition and focused on cognitive components. Referring to the mastery of the modelling competency, Blomhøj and Højgaard Jensen ( 2007 ) developed three dimensions for evaluation:

Degree of coverage, referring to the part of the modelling process the students work with and the level of their reflection

Technical level, describing the kind of mathematics students use

Radius of action, denoting the domain of situations in which students perform modeling activities.

Niss and Blum’s ( 2020 ) description of these approaches called this definition the “top-down” definition, referring explicitly to the expression “modelling competency” as singular, denoting a “distinct, recognizable and more or less well-defined entity” (p. 80).

Concerning the fostering of modelling competencies, Blomhøj and Jensen ( 2003 ) distinguished holistic and atomistic approaches. The holistic approach depends on a full-scale modelling process, with the students working through all phases of the modelling process. In the atomistic approach, students concentrate on selected phases of the modelling process, especially the processes of mathematizing and analyzing models mathematically, because these phases are seen as especially demanding. However, the authors issued a strong plea for a balance between these two approaches, since neither of them alone was seen as adequate.

1.2 An analytic approach to modelling competencies and sub-competencies—the bottom-up approach

The analytic definition of competence refers to the seminal work of Weinert ( 2001 ), which described it as “the cognitive abilities and skills available to individuals or learnable through them to solve specific problems, as well as the associated motivational, volitional and social readiness and abilities to use problem solutions successfully and responsibly in variable situations” (Weinert, 2001 , p. 27f). Based on this definition, modelling competencies were distinguished from modelling abilities: “Modelling competencies include, in contrast to modelling abilities, not only the ability but also the willingness to work out problems, with mathematical aspects taken from reality, through mathematical modelling” (Kaiser, 2007 , p. 110). Similarly, Maaß ( 2006 ) described modelling competencies as the ability and willingness to work out problems with mathematical means, including knowledge as the inevitable basis for competencies. The emphasis on knowledge as part of competence is in line with the discussion on competencies in the professional development of teachers; the most recent approach within this discussion on competence as a continuum aims to connect dispositions, including knowledge and beliefs, with situation-specific skills and classroom performance (Blömeke et al., 2015 ). Due to the fact that no standard methods exist for mathematical modelling as a means to find solutions to real-world problems, many cognitive and affective barriers must be overcome. This situation makes metacognitive skills and knowledge needed to monitor modelling activities highly relevant to the development of non-standard approaches. The construct of metacognition has been introduced into the broad discussion about the teaching and learning of mathematical modelling and plays an increasing role within the current modelling discourse, which was called for by Stillman and Galbraith in 1998 and further developed by, among others, Stillman ( 2011 ) and Vorhölter ( 2018 ).

Departing from the developments described above, a distinction has been developed between global modelling competencies and the sub-competencies of mathematical modelling within the mathematical modelling discourse (Kaiser, 2007 ; Maaß, 2006 ). Global modelling competencies are defined as the abilities necessary to perform and reflect on the whole modelling process, to at least partially solve a real-world problem through a model developed by oneself, to reflect on the modelling process using meta-knowledge, and to develop insight into the connections between mathematics and reality and into the subjectivity of mathematical modelling. Furthermore, social competencies, such as the ability to work in groups and communicate about and via mathematics, are part of global competencies.

The sub-competencies of mathematical modelling relate to the modelling cycle, of which different descriptions exist, including the different competencies essential for performing individual steps of the modelling cycle. Based on early work by Blum and Kaiser ( 1997 ) and subsequent extensive empirical studies, the following sub-competencies of modelling competence were distinguished by Kaiser ( 2007 , p. 111) and similarly by Maaß ( 2006 ):

Competencies to understand real-world problems and to develop a real-world model

Competencies to create a mathematical model out of a real-world model

Competencies to solve mathematical problems within a mathematical model

Competencies to interpret the mathematical results in a real-world model or a real-world situation

Competencies to challenge the developed solution and carry out the modelling process again, if necessary

Due to the strong reference to sub-competencies as part of the construct competence, this approach is called the analytic approach or, according to Niss and Blum ( 2020 ), the “bottom-up approach” (p. 80). Stillman et al. ( 2015 ) noted in their summarized description of this perspective the comprehensive character of this approach, referring to the early development of assessment instruments with multiple-choice items mapped to indicators of each sub-competence (e.g., Haines et al., 1993 ), which was adopted by further studies. Additionally, different levels of modelling competence were distinguished based, for example, on various test instruments (Maaß, 2006 ; Kaiser, 2007 ) and metacognitive frameworks (Stillman, 2011 ).

1.3 Further approaches to mathematical modelling competence

Further approaches concerning the construct of mathematical modelling competence can be distinguished, which this section briefly summarizes.

In their survey paper for the ICMI study on mathematical modelling and applications, Niss et al. ( 2007 ) proposed an enrichment of the top-down method, which integrated the main characteristics of the top-down approach with elements of the bottom-up approach. Referring to the holistic approach to competency, they defined mathematical modelling competency as follows:

[The] ability to identify relevant questions, variables, relations or assumptions in a given real world situation, to translate these into mathematics and to interpret and validate the solution of the resulting mathematical problem in relation to the given situation, as well as the ability to analyze or compare given models by investigating the assumptions being made, checking properties and scope of a given model etc. in short: modelling competency in our sense denotes the ability to perform the processes that are involved in the construction and investigation of mathematical models. (p. 12–13)

Furthermore, social competencies and mathematical competencies were included in this approach, which had (at least initially) some similarities to the bottom-up approach; however, with the inclusion of a critical analysis of modelling activities, this approach can be seen as resembling the top-down approach. In our systematic literature review, we call this approach a top-down enriched approach.

Other approaches have also been developed in the past, and hierarchical level-oriented approaches in particular have received some attention; for example, Henning and Keune ( 2007 ) focused on the cognitive demands of modelling competencies and distinguished them as follows: level one recognition and understanding of modelling, level two independent modelling, and level three meta-reflection on modelling. Furthermore, design-based model-eliciting activity principles, which had been developed as assessment tools for modelling competencies, were proposed as competence framework (Lesh et al., 2000 ). Another framework taken up within the discourse was the framework for the successful implementation of mathematical modelling (Stillman et al., 2007 ).

Summarizing the description of the theoretical approaches to mathematical modelling competencies, we can state that only a few established theoretical frameworks currently exist, which are difficult to discriminate between, as they refer to each other and the ongoing discourse. Overall, although modelling competencies are clearly conceptualized, as evidenced by the inclusion of current approaches in the ongoing discussion, we could not identify a rich variety of conceptualizations on which to build our literature review.

1.4 Research questions

Building upon the above-mentioned theoretical frameworks for conceptualizing modelling competencies and ways to measure and foster them, our study systematically reviewed the existing literature in the field of modelling competencies. In order to reveal how cumulative progress has been made in research over the past few decades, we endeavored to answer the following research questions and analyze them over time (i.e., the last three decades):

1.4.1 Study characteristics and research methodologies

How are the studies on modelling competencies distributed, and how can they be characterized by the country of origin of the authors, appearance over time, type of paper (theoretical or empirical; conference proceedings papers or journal papers), use of research methods, educational level involved, previous modelling experience before implementing the study, and type of modelling task used?

1.4.2 Characteristics of the studies concerning the conceptualization, measurement, and fostering of modelling competencies

How have researchers conceptualized modelling competencies? Are the theoretical perspectives identified and described in the theoretical frameworks also reflected in the various empirical studies? Are modelling competencies conceptualized as a holistic construct, or are they further differentiated as analytic constructs using various sub-competencies?

Which instruments for measuring modelling competencies have researchers used to study (preservice) teachers’ or school students’ modelling competencies? Specifically, what types of instruments and data collection methods were used, which groups were targeted, and what were the sample sizes?

Which interventions for fostering and measuring modelling competencies have researchers used to support (preservice) teachers’ or school students’ modelling competencies?

In the following section, we describe the methods used for this literature review before we present the results. The paper closes with an outlook on further perspectives on the work based on this systematic literature review and on the contributions of the papers in this special issue of Educational Studies in Mathematics on modelling competencies.

2 Methodology of the systematic literature review

2.1 search strategies and manuscript selection procedure.

To uncover the current state of the research conceptualizing mathematical modelling competencies and their measurement and fostering, we conducted a systematic literature review. This approach involves “a review of a clearly formulated question that uses systematic and explicit methods to identify, select, and critically appraise relevant research, and to collect and analyze data from the studies that are included in the review” (Moher et al., 2009 , p. 1).

The current review followed the most recent Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) guidelines (Page et al., 2021 ). The final literature search was carried out on March 17, 2021 in the following databases: (1) Web of Science (WoS) Core Collection, (2) Scopus, (3) PsycINFO, (4) ERIC, (5) Teacher Reference Center, (6) IEEEXplore Digital Library, (7) SpringerLink, (8) Taylor & Francis Online Journals, (9) zbMATH Open, (10) JSTOR, (11) MathSciNet, and (12) Education Source. These databases have high-quality indexing standards and a high international reputation. Furthermore, they contain many studies in the field of educational sciences, particularly in mathematics education research. In order to capture the relevant studies in the field of mathematics education research, search strings with Boolean operators and asterisks were used in the systematic review, as shown in Table 1 .

The current survey focused on studies conducted in the field of mathematics education, published in English, which closely related to the conceptualization, measurement, or fostering of mathematical modelling competencies. Our search embraced studies conducted at all levels of mathematics education and did not restrict the publication year of the studies. Overall, to specify eligible manuscripts for the review, we used five inclusion and five exclusion criteria as presented in Table 2 ; since not all exclusion criteria were exact opposites of the inclusion criteria, we separated exclusion and inclusion criteria. All the authors of this study were responsible for determining the eligibility of the papers for inclusion.

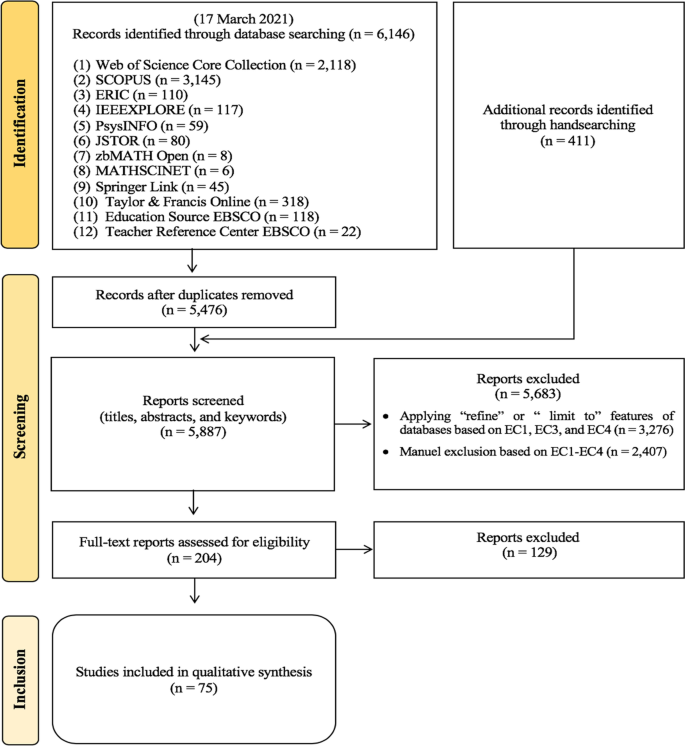

In addition to the electronic database search, on the basis of the first four criteria (IC1-IC4 and EC1-EC4), we conducted a hand-search for key conference proceedings that are important for mathematical modelling research, although they were not indexed in the electronic databases (amongst others as they were not registered by their publishers). We therefore screened the ICTMA proceedings from 1984 to 2009 and the recently published ICTMA19 proceedings. There was no need to manually screen the remaining ICTMA proceedings since they were already indexed in databases covered by IC5. Hand-searching is an accepted way of identifying the most recent studies that have not yet been included in or indexed by academic databases (Hopewell et al., 2007 ).The manuscript selection process was conducted in three main stages: (1) identification, (2) screening, and (3) inclusion (Page et al., 2021 ). In the identification stage, search strings from Table 1 were used, and a literature search of the 12 listed databases yielded 6,146 records. Endnote served as bibliographic software for managing references and removing duplicate records. After discarding 670 duplicate records, we moved to the initial screening of 5,476 records. Additionally, we found 411 potentially eligible records through hand-searching and screened all 5,887 reports based on their titles, abstracts, and keywords. First, we used the “refine” or “limit to” features of the electronic databases to exclude 3,276 papers by selecting the document type as journal article, book chapter, or conference proceedings; the language as English (all databases); and subject areas as education/educational research and educational scientific disciplines (WoS), education (Taylor & Francis Online Journals, SpringerLink, and JSTOR), social sciences (Scopus), and mathematics (WoS and Scopus). We then manually screened the remaining 2,611 reports’ titles, abstracts, and keywords on the basis of our ICs and ECs and found 204 potentially relevant studies through independent examination by the authors. At the end of the screening stage, we examined the full-text versions of these 204 studies based on our eligibility criteria, as mentioned in Table 2 . Ultimately, we included 75 studies in the systematic review with the full agreement of all the authors. Figure 1 illustrates the flow chart for the entire manuscript selection process.

Flow diagram of the manuscript selection process

2.2 Data analysis

Our analysis included 75 papers, which are described in Tables 9 , 10 , 11 , and 12 in the Appendix and displayed in the electronic supplementary material. The analysis of the current review mainly consisted of a screening and a coding procedure. First, the eligible studies were screened three times by the authors and examined in-depth. A coding scheme was then developed, and the codes were structured around our research questions according to four overarching categories:

Study characteristics and research methodologies (research question 1)

Theoretical frameworks for modelling competencies (research question 2)

Measuring mathematical modelling competencies (research question 3)

Fostering mathematical modelling competencies (research question 4)

Table 3 exemplifies our coding concerning the theoretical conceptualization of the reviewed papers.

The analysis was carried out according to the qualitative content analysis method (Miles & Huberman, 1994 ), focusing on the topics the reviewed studies addressed. We analyzed the studies that concerned our research interests, systematized according to the four main categories. First, concerning research question 1, we categorized the general characteristics of the reviewed studies and the research methodologies they had used based on ten sub-categories (e.g., publication year, document type, geographic distribution, research methods, sample/participants, participants’ level of education, sample size, participants’ previous experience in modelling, task types and modelling activities, and data collection methods). Second, with a special focus on research question 2, we analyzed the theoretical frameworks for modelling competencies that motivated the studies. Regarding research questions 3 and 4, we identified the measurement and fostering strategies developed, used, or suggested in the reviewed studies.

The main parts of the coding manual can be found in the Appendix, displayed in the electronic supplementary material (Tables 9 , 10 , 11 , and 12 ) with a sample coding in Table 12 . Our initial coding was conducted by the first author, and multiple strategies were then used to check the coding reliability using Miles and Huberman’s ( 1994 , p.64) formula: “reliability = number of agreements / (total number of agreements + disagreements).” First, we applied a code-recode strategy, which involved recoding all the studies after an interval of four weeks, and the consistency rate between the two distinct coding sessions was computed as 0.97. Second, to test coding reliability, 20% of the reviewed papers were randomly selected and cross-checked for coherence by a coder other than the authors, who had previous experience in mathematical modelling research area and qualitative data analysis. The intercoder reliability was found to be 0.93. Third, the first two authors separately coded the theoretical frameworks of all the included studies because these frameworks for modelling competencies were more complex to code than the other categories due to more interpretative elements. After double coding all the data concerning the theoretical frameworks underpinning the studies, the intercoder reliability rate was 0.91. After applying these strategies, all coders discussed the coding schedule, with a particular focus on the discrepancies between different codes, to achieve full consensus. All the computed reliability rates illustrated that the coding system was sufficiently reliable (Creswell, 2013 ).

3 Results of the systematic literature review on mathematical modelling competencies

3.1 study characteristics and research methodologies of the papers (research question 1), 3.1.1 types of documents and publication years.

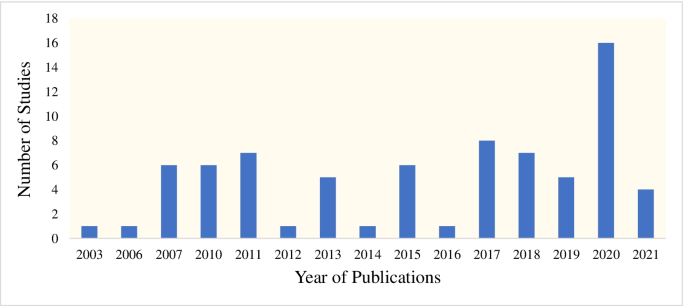

The 75 papers included in our study consisted of 67 empirical studies, 4 theoretical studies, 3 survey or overview studies, and 1 document analysis study with the following distribution of papers: 31 journal articles, 42 conference proceedings, and 2 book chapters. Eligible papers were published in 21 different scientific journals, including 10 mathematics education journals, 5 educational journals, and 4 interdisciplinary scientific journals focusing on science, technology, engineering, and mathematics (STEM) education. The reviewed articles published in mathematics education journals constituted only 7% of all the reviewed studies. Concerning conference proceedings, the majority of the eligible papers came from ICTMA proceedings ( n = 31), with only a few studies from other mathematics education conferences (mainly ICME, Psychology in Mathematics Education [PME], and Congress of the European Society for Research in Mathematics Education [CERME]). Concerning publication years, the reviewed studies appeared between 2003 and 2021, and an increase was observed in studies on modelling competencies in 2020 (see Fig. 2 ). The visualization in Fig. 2 does not show steady progress over time, especially regarding the impact of the biennial ICTMA conferences and their subsequently published proceedings. We called papers stemming from the books from the ICTMA conference series as conference proceedings, although the books have not been named like that in the past decade due to their selectivity and rigorous peer-review process carried out.

Numbers of annual studies on mathematical modelling competencies

3.1.2 Geographic distribution

The results concerning geographic distribution revealed the contributions of researchers from different countries to research on mathematical modelling competencies. An analysis conducted separately based on all authors’ affiliations and first authors’ affiliations found few important differences; thus, only the results based on all authors’ country affiliations are tabulated. When reviewing the studies, geographical origins were critical, as the classification criteria reflected the research culture of the countries to a certain extent; for example, the competence construct is especially prominent in Europe, whereas in other parts of the world, other constructs, such as proficiency, are more common. We therefore found as expected that most authors came from Europe, followed by Asia, Africa, and America, and only one came from Australia. In particular, the authors came from 18 different countries, with Germany being the most prominent. Table 4 indicates the distribution of the authors by country and continent.

3.1.3 Research designs and data collection methods

The analysis revealed that roughly one-third of the reviewed studies (32%, n = 24) used quantitative research methods (e.g., experimental, quasi-experimental, comparative, correlational research, survey, and relational survey models), followed by qualitative research methods (e.g., case study and grounded theory; 27%, n = 20) and design-based research methods (5%, n = 4). Only one study used both qualitative and quantitative research methods, and another study relied on document analysis. A few theoretical studies (5%, n = 4) and overview/survey studies (4%, n = 3) were counted among the remainder. For 18 eligible studies (24%), it was not possible to identify the research method used.

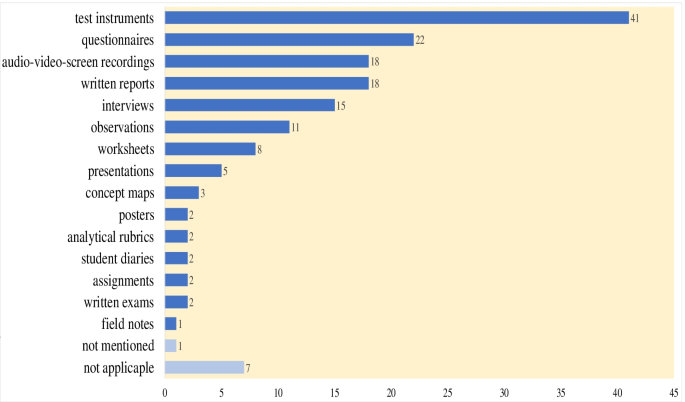

Various data collection methods were used in the reviewed studies, with (non-standardized) test instruments being the most frequently used method (see Fig. 3 ). The majority of the studies (44 of 75 studies, 59%) used more than one data collection method, whereas 23 studies (31%) used only one method. Seven papers reporting theoretical and overview/survey studies were not applicable to this category. We took into account that data collection might not be restricted to the evaluation of modelling competencies, but could include in addition other constructs, such as beliefs or attitudes.

Data collection methods used in the reviewed studies

3.1.4 Focus samples, sample sizes, and study participants’ levels of education

In this review study, we analyzed the sample characteristics of the reviewed studies. When we categorized the participants of the studies, we took into consideration the authors’ reports concerning the educational level of the participants. There is no clear international distinction between elementary and lower secondary education with elementary education covering year 1 to 4 or 1 to 6, which has to be taken into account.

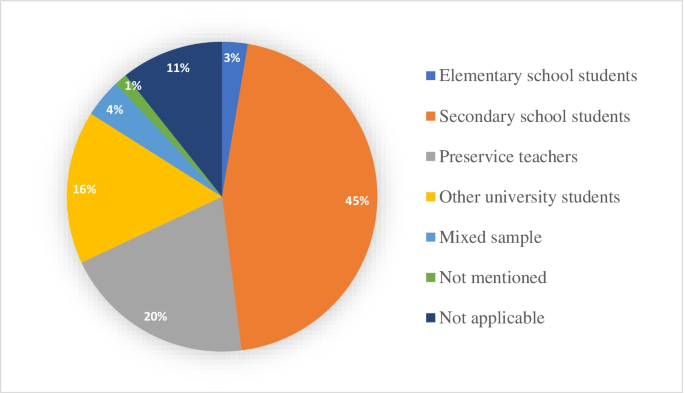

The analysis showed that the majority of the reviewed studies (45%, n = 34) recruited secondary school students (grades 6–12), and 20% ( n = 15) used samples of preservice (mathematics) teachers. Moreover, 12 studies (16%) focused on university students, including engineering students ( n = 8), mathematics and natural science students ( n = 1), and students from an interdisciplinary program ( n = 1), an introductory and interdisciplinary study program in science ( n = 1), and STEM education ( n = 1). Additionally, three studies (4%) used mixed samples of students, preservice teachers, and experienced teachers as their participants. Only two studies (3%) dealt with elementary school students (one group focusing on first graders and the other on fifth graders). One study did not mention the sample. The remaining eight studies (11%) were not applicable to this category, as they did not report on an empirical study or were of a theoretical nature. Figure 4 illustrates the distribution of the reviewed studies’ participants.

Participants in the reviewed studies

Table 5 shows the analysis of the sample sizes of the studies. The majority of the studies (32%, n = 24) recruited 0-50 participants, and overall, 51% ( n = 38) had less than 200 participants. Additionally, 14 studies (19%) conducted research with 201-500 participants, and 3 large-scale studies (4%) had more than 1,000 participants. We also analyzed the sample sizes of the studies based on the participants’ levels of education, and we used two categories (school/university students and preservice teachers). The results for these two categories did not differ substantially from each other; the most striking difference seemed to be in the range of 201-500 participants who were school students. The table illustrates the difficulties in higher education in collecting data from larger samples.

3.1.5 Participants’ previous modelling experience and modelling task types

Since participants’ previous modelling experience was mentioned as an important factor for success by several studies, we analyzed this information given in the papers. Our results showed that in 25% ( n = 19) of the studies, participants had very limited or no experience in modelling (i.e., they had participated in only one or two modelling activities by the time of the study). Furthermore, two studies reported that their participants had previous modelling experience (e.g., experience gained by attending modelling courses and seminars) before the research interventions. One study mentioned that the participants were heterogeneous in terms of their experience in modelling. Besides these results, we found that the majority of the reviewed studies (65%, n = 45) did not provide any information regarding participants’ previous experience in modelling.

In our review, we also evaluated how modelling activities were carried out during the research interventions and which types of tasks were used by the studies. The results revealed that modelling activities were predominantly performed in group work (37%, n = 28), 5 studies (7%) reported that they guided participants in individual/independent modelling activities, and the other 6 studies (8%) stated that they used both individual and group work. However, numerous reviewed studies (39%, n = 29) did not state how they performed modelling activities.

The types of modelling tasks used in the studies were not clearly described in the majority of the reviewed studies (59%, n = 44). We did not use a predefined classification, but used the classification given by the authors as in many papers no detailed information about the task was provided. This did not allow to use a predefined classification scheme, although we admit the advantages of predefined classifications. In this sense, we found that 11 studies (15%) applied more than 1 task type in the research; however, 18 reviewed studies (24%) employed a single type of modelling task. The results on type of tasks used by the reviewed studies are displayed in Table 6 .

Connected to the low information to the task type is the missing information about the context of the modelling examples (e.g., closeness to students’ world, workplace, citizenship, etc.) in many papers, which did not allow us to analyze this important category.

3.2 Results concerning the theoretical frameworks for modelling competencies (research question 2)

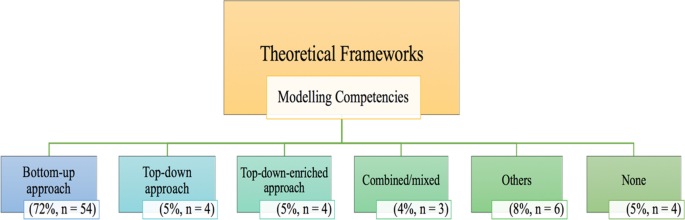

In our review, we classified the theoretical frameworks of the studies for mathematical modelling competencies into six categories. The main approaches used were as described in the theoretical part of this paper, and the results are displayed in Fig. 5 . The most prevalent theoretical framework was the bottom-up approach; other different theoretical frameworks comprised model-eliciting activities and principles as assessment tools for modelling competencies (Lesh et al., 2000 ), level-oriented descriptions of modelling competencies (Henning & Keune, 2007 ), or the framework for success in modelling (Stillman et al., 2007 ). Overall, the results indicated a scarcity of theoretical frameworks underpinning studies that investigated modelling competencies.

Theoretical frameworks of the studies on modelling competencies

3.3 Results concerning the measurement of modelling competencies (research question 3)

Our results revealed that a number of methods were used to measure modelling competencies. The most prevalent approaches used (mainly non-standardized) test instruments (55%, n = 41), written reports, and audio/video and screen recordings (24%, n = 18). The least-used methods were multidimensional item response theory (IRT) approaches ( n = 1) and field notes ( n = 1). Table 7 illustrates the methods described by the researchers for measuring modelling competencies.

The reviewed studies applied 16 different approaches to measure mathematical modelling competencies: 33% ( n = 25) of the studies used one method, 27% ( n = 20) applied two methods, 17% ( n = 13) used three methods, 7% ( n = 5) followed four methods, 4% ( n = 3) applied 5 methods, and 1 study used 6 different measurement methods for modelling competencies. For one of the reviewed studies, the modelling competency measurement method was not specified.

3.4 Results concerning the fostering of modelling competencies (research question 4)