Eberly Center

Teaching excellence & educational innovation, grading and performance rubrics, what are rubrics.

A rubric is a scoring tool that explicitly represents the performance expectations for an assignment or piece of work. A rubric divides the assigned work into component parts and provides clear descriptions of the characteristics of the work associated with each component, at varying levels of mastery. Rubrics can be used for a wide array of assignments: papers, projects, oral presentations, artistic performances, group projects, etc. Rubrics can be used as scoring or grading guides, to provide formative feedback to support and guide ongoing learning efforts, or both.

Advantages of Using Rubrics

Using a rubric provides several advantages to both instructors and students. Grading according to an explicit and descriptive set of criteria that is designed to reflect the weighted importance of the objectives of the assignment helps ensure that the instructor’s grading standards don’t change over time. Grading consistency is difficult to maintain over time because of fatigue, shifting standards based on prior experience, or intrusion of other criteria. Furthermore, rubrics can reduce the time spent grading by reducing uncertainty and by allowing instructors to refer to the rubric description associated with a score rather than having to write long comments. Finally, grading rubrics are invaluable in large courses that have multiple graders (other instructors, teaching assistants, etc.) because they can help ensure consistency across graders and reduce the systematic bias that can be introduced between graders.

Used more formatively, rubrics can help instructors get a clearer picture of the strengths and weaknesses of their class. By recording the component scores and tallying up the number of students scoring below an acceptable level on each component, instructors can identify those skills or concepts that need more instructional time and student effort.

Grading rubrics are also valuable to students. A rubric can help instructors communicate to students the specific requirements and acceptable performance standards of an assignment. When rubrics are given to students with the assignment description, they can help students monitor and assess their progress as they work toward clearly indicated goals. When assignments are scored and returned with the rubric, students can more easily recognize the strengths and weaknesses of their work and direct their efforts accordingly.

Examples of Rubrics

Here are links to a diverse set of rubrics designed by Carnegie Mellon faculty and faculty at other institutions. Although your particular field of study and type of assessment activity may not be represented currently, viewing a rubric that is designed for a similar activity may provide you with ideas on how to divide your task into components and how to describe the varying levels of mastery.

Paper Assignments

- Example 1: Philosophy Paper This rubric was designed for student papers in a range of philosophy courses, CMU.

- Example 2: Psychology Assignment Short, concept application homework assignment in cognitive psychology, CMU.

- Example 3: Anthropology Writing Assignments This rubric was designed for a series of short writing assignments in anthropology, CMU.

- Example 4: History Research Paper . This rubric was designed for essays and research papers in history, CMU.

- Example 1: Capstone Project in Design This rubric describes the components and standard of performance from the research phase to the final presentation for a senior capstone project in the School of Design, CMU.

- Example 2: Engineering Design Project This rubric describes performance standards on three aspects of a team project: Research and Design, Communication, and Team Work.

Oral Presentations

- Example 1: Oral Exam This rubric describes a set of components and standards for assessing performance on an oral exam in an upper-division history course, CMU.

- Example 2: Oral Communication

- Example 3: Group Presentations This rubric describes a set of components and standards for assessing group presentations in a history course, CMU.

Class Participation/Contributions

- Example 1: Discussion Class This rubric assesses the quality of student contributions to class discussions. This is appropriate for an undergraduate-level course, CMU.

- Example 2: Advanced Seminar This rubric is designed for assessing discussion performance in an advanced undergraduate or graduate seminar.

Rubrics for Oral Presentations

Introduction.

Many instructors require students to give oral presentations, which they evaluate and count in students’ grades. It is important that instructors clarify their goals for these presentations as well as the student learning objectives to which they are related. Embedding the assignment in course goals and learning objectives allows instructors to be clear with students about their expectations and to develop a rubric for evaluating the presentations.

A rubric is a scoring guide that articulates and assesses specific components and expectations for an assignment. Rubrics identify the various criteria relevant to an assignment and then explicitly state the possible levels of achievement along a continuum, so that an effective rubric accurately reflects the expectations of an assignment. Using a rubric to evaluate student performance has advantages for both instructors and students. Creating Rubrics

Rubrics can be either analytic or holistic. An analytic rubric comprises a set of specific criteria, with each one evaluated separately and receiving a separate score. The template resembles a grid with the criteria listed in the left column and levels of performance listed across the top row, using numbers and/or descriptors. The cells within the center of the rubric contain descriptions of what expected performance looks like for each level of performance.

A holistic rubric consists of a set of descriptors that generate a single, global score for the entire work. The single score is based on raters’ overall perception of the quality of the performance. Often, sentence- or paragraph-length descriptions of different levels of competencies are provided.

When applied to an oral presentation, rubrics should reflect the elements of the presentation that will be evaluated as well as their relative importance. Thus, the instructor must decide whether to include dimensions relevant to both form and content and, if so, which one. Additionally, the instructor must decide how to weight each of the dimensions – are they all equally important, or are some more important than others? Additionally, if the presentation represents a group project, the instructor must decide how to balance grading individual and group contributions. Evaluating Group Projects

Creating Rubrics

The steps for creating an analytic rubric include the following:

1. Clarify the purpose of the assignment. What learning objectives are associated with the assignment?

2. Look for existing rubrics that can be adopted or adapted for the specific assignment

3. Define the criteria to be evaluated

4. Choose the rating scale to measure levels of performance

5. Write descriptions for each criterion for each performance level of the rating scale

6. Test and revise the rubric

Examples of criteria that have been included in rubrics for evaluation oral presentations include:

- Knowledge of content

- Organization of content

- Presentation of ideas

- Research/sources

- Visual aids/handouts

- Language clarity

- Grammatical correctness

- Time management

- Volume of speech

- Rate/pacing of Speech

- Mannerisms/gestures

- Eye contact/audience engagement

Examples of scales/ratings that have been used to rate student performance include:

- Strong, Satisfactory, Weak

- Beginning, Intermediate, High

- Exemplary, Competent, Developing

- Excellent, Competent, Needs Work

- Exceeds Standard, Meets Standard, Approaching Standard, Below Standard

- Exemplary, Proficient, Developing, Novice

- Excellent, Good, Marginal, Unacceptable

- Advanced, Intermediate High, Intermediate, Developing

- Exceptional, Above Average, Sufficient, Minimal, Poor

- Master, Distinguished, Proficient, Intermediate, Novice

- Excellent, Good, Satisfactory, Poor, Unacceptable

- Always, Often, Sometimes, Rarely, Never

- Exemplary, Accomplished, Acceptable, Minimally Acceptable, Emerging, Unacceptable

Grading and Performance Rubrics Carnegie Mellon University Eberly Center for Teaching Excellence & Educational Innovation

Creating and Using Rubrics Carnegie Mellon University Eberly Center for Teaching Excellence & Educational Innovation

Using Rubrics Cornell University Center for Teaching Innovation

Building a Rubric University of Texas/Austin Faculty Innovation Center

Building a Rubric Columbia University Center for Teaching and Learning

Creating and Using Rubrics Yale University Poorvu Center for Teaching and Learning

Types of Rubrics DePaul University Teaching Commons

Creating Rubrics University of Texas/Austin Faculty Innovation Center

Examples of Oral Presentation Rubrics

Oral Presentation Rubric Pomona College Teaching and Learning Center

Oral Presentation Evaluation Rubric University of Michigan

Oral Presentation Rubric Roanoke College

Oral Presentation: Scoring Guide Fresno State University Office of Institutional Effectiveness

Presentation Skills Rubric State University of New York/New Paltz School of Business

Oral Presentation Rubric Oregon State University Center for Teaching and Learning

Oral Presentation Rubric Purdue University College of Science

Group Class Presentation Sample Rubric Pepperdine University Graziadio Business School

HIGHER ED SOLUTIONS

BY DEPARTMENT

- Teacher Education

- Nursing Education

- Behavioral Health

- Sign & Foreign Languages

- Performing Arts

- Communication

- Any Skill You Teach

NEW FEATURE

The AI Assistant is Here

Meet your new teaching ally.

Developed with coaching as a priority.

- Professional Ed

- Workforce Training

CONTENT TYPE

- Case Studies

- Product Demos

Assessing Skills in an AI-Driven World

Practical tips and strategies.

- CONTACT SALES EXPLORE GOREACT TRY FOR FREE CONTACT SALES

Higher Education

How to (Effectively) Use a Presentation Grading Rubric

Almost all higher education courses these days require students to give a presentation, which can be a beast to grade. But there’s a simple tool to keep your evaluations on track.

Enter: The presentation grading rubric.

With a presentation grading rubric, giving feedback is simple. Rubrics help instructors standardize criteria and provide consistent scoring and feedback for each presenter.

How can presentation grading rubrics be used effectively? Here are 5 ways to make the most of your rubrics.

1. Find a Good Customizable Rubric

There’s practically no limit to how rubrics are used, and there are oodles of presentation rubrics on Pinterest and Google Images. But not all rubrics are created equal.

Professors need to be picky when choosing a presentation rubric for their courses. Rubrics should clearly define the target that students are aiming for and describe performance.

2. Fine-Tune Your Rubric

Make sure your rubric accurately reflects the expectations you have for your students. It may be helpful to ask a colleague or peer to review your rubric before putting it to use. After using it for an assignment, you could take notes on the rubric’s efficiency as you grade.

You may need to tweak your rubric to correct common misunderstandings or meet the criteria for a specific assignment. Make adjustments as needed and frequently review your rubric to maximize its effectiveness.

3. Discuss the Rubric Beforehand

On her blog Write-Out-Loud , Susan Dugdale advises to not keep rubrics a secret. Rubrics should be openly discussed before a presentation is given. Make sure reviewing your rubric with students is listed on your lesson plan.

Set aside time to discuss the criteria with students ahead of presentation day so they know where to focus their efforts. To help students better understand the rubric, play a clip of a presentation and have students use the rubric to grade the video. Go over what grade students gave the presentation and why, based on the rubric’s standards. Then explain how you would grade the presentation as an instructor. This will help your students internalize the rubric as they prepare for their presentations.

4. Use the Rubric Consistently

Rubrics help maintain fairness in grading. When presentation time arrives, use a consistent set of grading criteria across all speakers to keep grading unbiased.

An effective application for rubrics is to apply a quantitative value to students across a cohort and over multiple presentations. These values show which students made the most progress and where they started out (relative to the rest of their class). Taken together, this data tells the story of how effective or ineffective the feedback has been.

5. Share Your Feedback

If you’re using an electronic system, sharing feedback might be automatic. If you’re using paper, try to give copies to presenters as soon as possible. This will help them incorporate your feedback while everything is still fresh in their minds.

If you’re looking to use rubrics electronically, check out GoReact, the #1 video platform for skill development. GoReact allows you to capture student presentations on video for feedback, grading, and critique. The software includes a rubric builder that you can apply to recordings of any kind of presentation.

Presenters can receive real-time feedback by live recording directly to GoReact with a webcam or smartphone. Instructors and peers submit feedback during the presentation. Students improve astronomically.

A presentation grading rubric is a simple way to keep your evaluations on track. Remember to use a customizable rubric, discuss the criteria beforehand, follow a consistent set of grading criteria, make necessary adjustments, and quickly share your feedback.

By following these five steps, both you and your students can reap the benefits that great rubrics have to offer.

Personalize Your GoReact Experience

Choose your use case.

- Presentation Design

Presentation Rubric for a College Project

We seem to have an unavoidable relationship with public speaking throughout our lives. From our kindergarten years, when our presentations are nothing more than a few seconds of reciting cute words in front of our class…

...till our grown up years, when things get a little more serious, and the success of our presentations may determine getting funds for our business, or obtaining an academic degree when defending our thesis.

By the time we reach our mid 20’s, we become worryingly used to evaluations based on our presentations. Yet, for some reason, we’re rarely told the traits upon which we are being evaluated. Most colleges and business schools for instance use a PowerPoint presentation rubric to evaluate their students. Funny thing is, they’re not usually that open about sharing it with their students (as if that would do any harm!).

What is a presentation rubric?

A presentation rubric is a systematic and standardized tool used to evaluate and assess the quality and effectiveness of a presentation. It provides a structured framework for instructors, evaluators, or peers to assess various aspects of a presentation, such as content, delivery, organization, and overall performance. Presentation rubrics are commonly used in educational settings, business environments, and other contexts where presentations are a key form of communication.

A typical presentation rubric includes a set of criteria and a scale for rating or scoring each criterion. The criteria are specific aspects or elements of the presentation that are considered essential for a successful presentation. The scale assigns a numerical value or descriptive level to each criterion, ranging from poor or unsatisfactory to excellent or outstanding.

Common criteria found in presentation rubrics may include:

- Content: This criterion assesses the quality and relevance of the information presented. It looks at factors like accuracy, depth of knowledge, use of evidence, and the clarity of key messages.

- Organization: Organization evaluates the structure and flow of the presentation. It considers how well the introduction, body, and conclusion are structured and whether transitions between sections are smooth.

- Delivery: Delivery assesses the presenter's speaking skills, including vocal tone, pace, clarity, and engagement with the audience. It also looks at nonverbal communication, such as body language and eye contact.

- Visual Aids: If visual aids like slides or props are used, this criterion evaluates their effectiveness, relevance, and clarity. It may also assess the design and layout of visual materials.

- Audience Engagement: This criterion measures the presenter's ability to connect with the audience, maintain their interest, and respond to questions or feedback.

- Time Management: Time management assesses whether the presenter stayed within the allotted time for the presentation. Going significantly over or under the time limit can affect the overall effectiveness of the presentation.

- Creativity and Innovation: In some cases, rubrics may include criteria related to the creative and innovative aspects of the presentation, encouraging presenters to think outside the box.

- Overall Impact: This criterion provides an overall assessment of the presentation's impact on the audience, considering how well it achieved its intended purpose and whether it left a lasting impression.

“We’re used to giving presentations, yet we’re rarely told the traits upon which we’re being evaluated.

Well, we don’t believe in shutting down information. Quite the contrary: we think the best way to practice your speech is to know exactly what is being tested! By evaluating each trait separately, you can:

- Acknowledge the complexity of public speaking, that goes far beyond subject knowledge.

- Address your weaker spots, and work on them to improve your presentation as a whole.

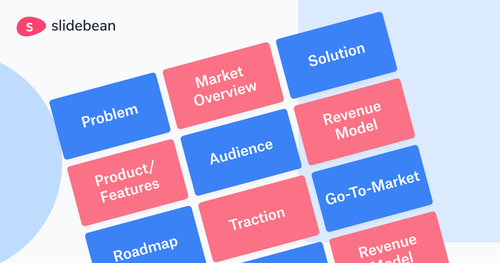

I’ve assembled a simple Presentation Rubric, based on a great document by the NC State University, and I've also added a few rows of my own, so you can evaluate your presentation in pretty much any scenario!

CREATE PRESENTATION

What is tested in this powerpoint presentation rubric.

The Rubric contemplates 7 traits, which are as follows:

Now let's break down each trait so you can understand what they mean, and how to assess each one:

Presentation Rubric

How to use this Rubric?:

The Rubric is pretty self explanatory, so I'm just gonna give you some ideas as to how to use it. The ideal scenario is to ask someone else to listen to your presentation and evaluate you with it. The less that person knows you, or what your presentation is about, the better.

WONDERING WHAT YOUR SCORE MAY INDICATE?

- 21-28 Fan-bloody-tastic!

- 14-21 Looking good, but you can do better

- 7-14 Uhmmm, you ain't at all ready

As we don't always have someone to rehearse our presentations with, a great way to use the Rubric is to record yourself (this is not Hollywood material so an iPhone video will do!), watching the video afterwards, and evaluating your presentation on your own. You'll be surprised by how different your perception of yourself is, in comparison to how you see yourself on video.

Related read: Webinar - Public Speaking and Stage Presence: How to wow?

It will be fairly easy to evaluate each trait! The mere exercise of reading the Presentation Rubric is an excellent study on presenting best practices.

If you're struggling with any particular trait, I suggest you take a look at our Academy Channel where we discuss how to improve each trait in detail!

It's not always easy to objectively assess our own speaking skills. So the next time you have a big presentation coming up, use this Rubric to put yourself to the test!

Need support for your presentation? Build awesome slides using our very own Slidebean .

Related video

Upcoming events, from pitch deck to funding: a crash course, crash course in financial modeling, popular articles.

AirBnb Pitch Deck: Teardown and Redesign (FREE Download)

What is a Pitch Deck? Meaning, Example, and Guide

Let’s move your company to the next stage 🚀

Ai pitch deck software, pitch deck services.

Financial Model Consulting for Startups 🚀

We can help craft the perfect pitch deck 🚀

The all-in-one pitch deck software 🚀

This presentation software list is the result of weeks of research of 50+ presentation tools currently available online. It'll help you compare and decide.

%20(1)%20(2).webp)

A pitch deck is an essential tool for startup founders, especially in the early stages, as it helps them connect with potential investors and secure crucial venture capital funding. It serves multiple purposes, all of which are key to a startup's growth path. Here, we outline them.

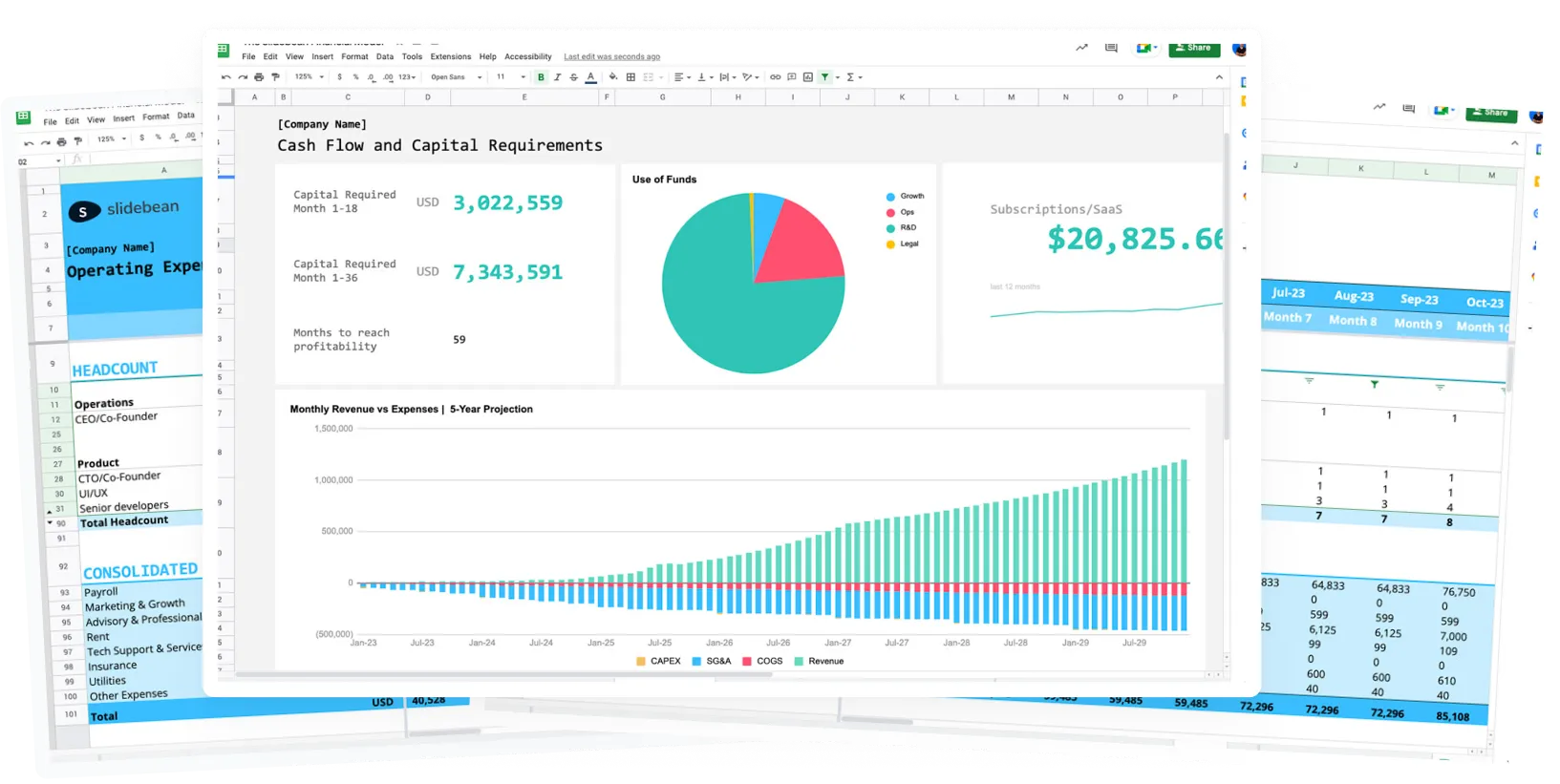

This is a functional model you can use to create your own formulas and project your potential business growth. Instructions on how to use it are on the front page.

Book a call with our sales team

In a hurry? Give us a call at

November 11, 2024

Presentation scoring rubric: What is it and how to make one

Need a presentation scoring rubric? Here's a helpful template to get started

Do you need to grade students or evaluate coworkers on their presentations? You can use a presentation scoring rubric to provide feedback for improvement.

We’ll explain what a presentation scoring rubric is, how to create one, and how to use Plus AI to gather more details about this helpful tool.

What is a presentation scoring rubric?

Most commonly used in educational scenarios, a presentation scoring rubric is used to assess a presentation. The scoring rubric includes specific criteria for a structured framework to measure performance.

The rubric is not only intended to provide a score (and thus a grade) to the student or presenter, but to identify strengths and weaknesses to guide the presenter toward improvement.

In business scenarios, a scoring rubric can be used for training purposes or for refining presentations when those presentations are key forms of communication.

Scoring rubric categories

You’ll find several categories for the scoring criteria. Most are common to presentations; however, you can add, remove, or adjust the categories per your scenario.

- Content : Relevance of the presented information.

- Organization : Sequence or flow of the presentation.

- Knowledge : Understanding of the topic.

- Communication or delivery : Speaking skills and nonverbal communication.

- Engagement : Ability to connect with the audience.

- Visuals or visual aids : Graphics, media, design, and layout.

- Mechanics : Grammar and spelling.

- Time management : Ability to meet time limits or requirements.

- Creativity : Innovative aspects of the presentation.

- Overall impact : Overall assessment of how well the presentation achieved its purpose and impacted the audience.

You can adjust your scoring rubric accordingly for each course, project, or other scenario. For example, if the presentation is for a class project with certain requirements, you might add a specific category to score how well the student meets each requirement.

Tip : Share these ways to make Google Slides look good or improve the appearance of your PowerPoint slides !

Rubric scoring scale

Presentation scoring rubrics normally use a three-, four-, or five-point scale. The scale can use a numerical and/or descriptive scoring system.

For instance, you can score each category on a scale from 1 to 5 with 1 being Unacceptable and 5 being Excellent. Or, you can score with a scale from 1 to 3 with 1 being Weak and 3 being Strong.

With each category, an explanation of the score is provided. To obtain the score for that category, the presenter meets the description provided for that score. Here’s an example for a Content category with a 4-point scoring scale.

The person evaluating the presentation, enters the score for each category in the column on the right. When the presentation is complete, the total score is calculated and provided to and optionally discussed with the presenter.

It’s also helpful to include a key for understanding the final score as shown in this example:

Tip : Help your presenters by sharing these top tips for effective presentations .

How do you create a presentation scoring rubric?

To create a presentation scoring rubric, you can use your go-to word processing application like Google Docs or Microsoft Word.

Insert a table which lists the criteria in the left-hand column, scoring scale in the top row, and a score column (for each category) on the right. You can also include a total score at the bottom as shown below.

To assemble the rubric:

- Define and list the criteria you want to use. Again, the categories can differ depending on the type and purpose of the presentation.

- Determine and enter the scoring scale and whether it should be numerical, descriptive, or both.

- Write the description for each category corresponding to each score on the scale.

- Optionally include a key for the total score at the bottom.

Want to use the template above? Head to the Presentation Scoring Rubric Template in Google Docs, select Make a copy , and then save the document to your account. You can then edit it per your needs and reuse it as you like.

Get help with a presentation scoring rubric from Plus AI

Plus AI is a terrific tool for creating and editing presentations, but it’s also a super helpful add-in for research! You can ask Plus to make a scoring rubric presentation and see helpful details and tips to set up your own rubric.

With useful information, you can refer to the content in this completed slideshow as you create the rubric. You may also add slides that include further details!

You can use Plus AI with Google Slides and Docs along with Microsoft PowerPoint . Check out the Plus AI website for details, example presentations, and information for starting your free trial.

If you’ve been tasked with creating a presentation scoring rubric for your students, coworkers, or peers, you now have the basics you need to get started. And remember, you can use the rubric template provided above and Plus AI for further help creating your rubric.

For your own successful slideshows, look at how to start a presentation along with how to end a presentation .

What is the 5/5/5 rule for better presentations?

Some experts recommend the 5/5/5 rule when creating presentations. This rule suggests no more than five words per line of text, five lines of text per slide, or five text-heavy slides in a row.

What is the Kawasaki presentation rule?

Guy Kawasaki popularized the 10/20/30 rule for effective presentations. This rule recommends a limit of 10 slides, a total presentation time within 20 minutes, and a font size of no less than 30 points.

What is a good talking speed for a presentation?

An average of 100 to 150 words per minute is common for presentations. For details on calculating words per minute and other tools you can use, check out Finding Your Speaking Rate on the Plus website.

Latest posts

Latest post.

Can AI tools replace content writers?

When do you think AI will replace writers? My thoughts as a professional content writer

PowerPoint Karaoke: Rules, tips, and free slide decks

Overview of PowerPoint Karaoke, rules, and free slide decks for PowerPoint Karaoke

100+ ChatGPT prompts to make presentations

100+ AI prompts to help you brainstorm, plan, create, practice, and revise your next presentation

More resources

Embed jira tickets in notion: a quick guide.

A detailed guide to help teams embed Jira tickets in Notion

How to do strikethrough in Google Slides

Here’s how to add a strikethrough in Google Slides using a few quick and easy methods. Learn to use strikethrough with the Format menu or a keyboard shortcut

How to insert a timer into Google Slides

Learn how to insert a timer into Google Slides for a break time countdown or timed audience task. We’ll show you two easy ways to add a timer in minutes.

Rubric Examples for Fair and Clear Student Grading

Rubrics are game-changers for grading student work fairly and clearly. Here's what you need to know:

- Rubrics are scoring guides that spell out expectations for assignments

- They keep grading consistent, clear, and efficient

- Holistic rubrics: Quick overall assessment

- Analytic rubrics: Detailed feedback on specific criteria

- Single-point rubrics: Focus on meeting expectations

- Performance-based rubrics: Assess real-world skills

- AI-enhanced rubrics: Save time and improve consistency

Good rubrics:

- Show students what's expected

- Make grading fair across assignments and teachers

- Give specific feedback for improvement

- Save teachers time while improving assessments

Bottom line: Rubrics are essential tools for effective teaching and learning. They turn vague goals into measurable points, helping students know what to do and often leading to better work.

Quick Comparison:

1. Holistic Rubrics

Holistic rubrics are the quick and dirty of grading tools. They give you one score for the whole shebang, instead of picking apart every little detail.

Why are they cool? Let's break it down:

1. They're fast

You can whip through a stack of assignments like nobody's business. One score, boom, done. It's a lifesaver when you're drowning in papers.

2. They focus on the good stuff

Instead of nitpicking every mistake, holistic rubrics look at what students did right. It's like giving a high-five instead of a red pen massacre.

3. They're flexible

Essays, art projects, interpretive dance - holistic rubrics can handle it all. They're great when the whole package matters more than the individual parts.

4. They keep things fair

When multiple teachers are grading the same thing, holistic rubrics help keep everyone on the same page. It's like having a shared grading language.

But hey, they're not perfect:

- Students might be left scratching their heads, wondering why they got a certain score.

- It can be tricky to score work that's awesome in some ways but not so hot in others.

Here's a simple holistic rubric for a history essay:

Holistic rubrics are perfect for quick checks or when you want to see the big picture without getting lost in the weeds.

As one teacher put it, "Holistic rubrics show what students CAN do, not what they can't." It's like giving a pat on the back instead of a punch in the gut.

2. Analytic Rubrics

Analytic rubrics are like a detailed map for grading. They break assignments into specific parts, giving students a clear picture of what's expected. Here's why they're so useful:

These rubrics are perfect for complex tasks where you want to give detailed feedback. They let you zoom in on each important part of an assignment. Think of a history essay - you might grade research, argument structure, and writing style separately.

Dr. Dana Dawson from Temple University says:

"Taking the time to reflect on your goals for an activity or assignment and to concretely articulate your expectations will not only improve the quality of the rubric you create, but will help guide your instruction."

Why use analytic rubrics? They offer:

- Specific feedback: Students know exactly what they did well and where to improve.

- Fair grading: Every part of the assignment gets attention.

- Clear learning goals: Students see which skills they need to work on.

But watch out - these rubrics can get complicated. Most use 3-5 levels for each criteria. Don't go overboard, or you might confuse students instead of helping them.

Here's a tip: Weight different parts of your rubric based on how important they are. This shows students where to focus for the biggest grade impact.

Creating an analytic rubric takes some work upfront, but it's worth it. You'll set clearer expectations and help students learn more effectively. To make one, start by listing what a great assignment looks like. Then, group similar items to create your rubric categories.

3. Single-Point Rubrics

Single-point rubrics are shaking up the grading game. They're simple, focused, and getting a lot of love from teachers.

What's the deal with single-point rubrics? They show what success looks like for each part of an assignment. No fancy levels or long descriptions. Just the target.

Why are teachers digging these? Here's the scoop:

- Quick to make. Teachers focus on the good stuff, not all the ways students might mess up.

- Easy to read. Students see what they need to do without getting lost in details.

- Room for creativity. No boxes to check means students can surprise you.

- Growth-focused. It's about progress, not labels.

Jennifer Gonzalez, an educator and blogger, says:

"The simplicity of these rubrics - with just a single column of criteria, rather than a full menu of performance levels - offers a whole host of benefits."

Let's check out a real example. Here's part of a single-point rubric for second-grade writing:

Clear as day, right? Kids know exactly what to do.

But here's the cool part: Those blank spaces on the sides? That's where teachers give specific, personalized feedback. No generic comments here.

Pernille Ripp, an educator and author, puts it this way:

"Using the single-point rubric is a breeze for me compared to the multi-point rubric."

So, if you want to simplify grading and still give great feedback, single-point rubrics might be your new go-to. They're clear, flexible, and all about helping students grow.

sbb-itb-bb2be89

4. performance-based rubrics.

Performance-based rubrics are tools that assess how well students can use their knowledge in real-world situations. They're not just about checking facts - they're about seeing how students apply what they've learned.

Why are these rubrics so useful? Here's the deal:

They test real-world skills. It's not about memorizing - it's about doing.

They make students think harder. We're talking analysis, evaluation, and creation.

They set clear goals. Both teachers and students know what "good" looks like.

They give specific feedback. Students learn exactly where they're strong and where they need work.

Philip Arcuria and Maryrose Chaaban, who know a thing or two about grading, say:

"A carefully designed rubric can provide benefits to instructors and students alike."

They're right. A good performance-based rubric makes grading fair, saves time, and shows students how to improve.

Let's look at a real example:

A Boston high school math teacher created a rubric for a probability unit in March 2022. The task? Decide if an inmate should get parole based on stats. Talk about bringing math into the real world!

Here's a simple version of that rubric:

This rubric isn't just checking math skills. It's seeing if students can analyze data, use statistical concepts, and make smart decisions based on evidence. That's the power of these rubrics - they connect classroom learning to real problem-solving.

When you're making your own performance-based rubrics:

- Match them to your learning goals

- Be clear about what success looks like

- Use words you and your students get

- Focus on stuff you can see and measure

Performance-based rubrics take grading to the next level. They show what students can DO, not just what they know. And in the end, isn't that what learning is all about?

5. AI-Enhanced Rubrics

AI-powered rubric generators are changing how teachers create and use grading criteria. These tools save time and make student assessment more consistent. Let's look at a standout example:

CoGrader : AI-Powered Rubric Creation

CoGrader is shaking up rubric creation for teachers. Here's why it's turning heads:

- It cuts rubric creation time by 80%

- It aligns with Grade Level Standards

- Teachers can adjust AI-generated rubrics or make their own

- It offers over 30 pre-made rubrics

But the real impact is on teachers and students. Nikki E., a high school English teacher from California, says:

"CoGrader's qualitative feedback is spot-on. It's so precise, it's mind-blowing. It saves me time, and my students get more thorough feedback than if I were grading by hand."

This shows that AI-enhanced rubrics aren't just about saving time. They're about giving better, more detailed feedback to help students improve.

By using AI, teachers can create rubrics that are:

- Consistent : AI applies grading criteria the same way for all student work

- Detailed : It can create nuanced performance descriptors for each criterion

- Aligned : Rubrics can easily match specific learning goals and standards

AI-enhanced rubrics like those from CoGrader are becoming a key tool for teachers. They're not replacing human judgment – they're making it better. This allows teachers to give fairer, clearer, and more helpful feedback to their students.

Rubrics have changed the game in student assessment. They bring fairness and clarity to grading. We've looked at different types of rubrics, from basic to AI-powered. It's clear: these tools are must-haves for teachers and students alike.

Good rubrics do a lot:

- They show students exactly what's expected

- They make grading fair across different assignments and teachers

- They give students specific feedback to improve

- They save teachers time while making assessments better

Amy Pinkerton from the Center for Teaching and Learning says:

"Rubrics are useful tools that faculty and teaching assistants can use to give their students clear and consistent feedback."

This clarity is key. Rubrics turn vague goals like "good writing" into specific, measurable points. This helps students know what to do and often leads to better work.

But rubrics do more than just help with one assignment. They can improve how we design courses, teach, and understand student learning. They shift focus from teacher-centered grading to student-centered feedback that boosts achievement.

Check out these results:

- AI tools like CoGrader can cut grading time by up to 80%

- Students feel less stressed when they know what's expected

- Rubrics can boost students' confidence by showing them what skills they need

Remember, making good rubrics is an ongoing job. Linda Suskie, who wrote "Assessing Student Learning: A Common Sense Guide", puts it this way:

"Rubrics make scoring more accurate, unbiased, and consistent."

To keep this up, teachers need to keep tweaking their rubrics based on how students do and what they say.

What does a good rubric look like?

A good rubric is like a GPS for academic success. It pinpoints exactly what students need to do to excel. Here's the secret sauce of a top-notch rubric:

- It spells out clear criteria

- It describes different performance levels

- It uses student-friendly language

- It focuses on measurable outcomes

Philip Arcuria and Maryrose Chaaban, assessment gurus, put it this way:

"The best rubrics will typically include specific criteria relevant to the task or assignment at hand, as well as a set of descriptors that outline the different levels of performance that learners may achieve."

Let's get real with an example. Picture grading a history essay. Your rubric might look like this:

This rubric paints a clear picture of what's expected at each level. It's specific, measurable, and easy to grasp.

But here's the kicker: a good rubric isn't just about slapping on a grade. It's a teaching powerhouse. Susan M. Brookhart, a big shot in classroom assessment, says:

"What do effective rubrics look like? They're more than just a checklist, but rather guidelines that focus on skills that demonstrate learning."

So, next time you're whipping up a rubric, think about how it can steer learning, not just measure it. Your students (and your sanity) will do a happy dance!

Related posts

- Assessment Reimagined: Alternative Strategies for Measuring Student Growth

- Top 7 Assessment Tools for Measuring Student Progress Effectively

- Best Assessment Methods for Teachers: Tracking and Enhancing Learning

- Formative Assessment Ideas to Track Student Progress in Real Time

Spelling Words for 3rd Grade: Games and Activities

The Role of Peer Feedback in Professional Growth

The Role of Emotional Intelligence in Teaching

- Open access

- Published: 04 November 2024

Students’ performance in clinical class II composite restorations: a case study using analytic rubrics

- Arwa Daghrery 1 ,

- Ghadeer Saleh Alwadai 2 ,

- Nada Ahmad Alamoudi 2 ,

- Saleh Ali Alqahtani 2 ,

- Faisal Hasan Alshehri 2 ,

- Mohammed Hussain Al Wadei 2 ,

- Naif Nabel Abogazalah 2 ,

- Gabriel Kalil Rocha Pereira 3 &

- Mohammed M Al Moaleem 4

BMC Medical Education volume 24 , Article number: 1252 ( 2024 ) Cite this article

149 Accesses

Metrics details

The analytical rubric serves as a permanent reference for guidelines on clinical performance for undergraduate dental students. This study aims to assess the rubric system used to evaluate clinical class II composite restorations performed by undergraduate dental students and to explore the impact of gender on overall student performance across two academic years. Additionally, we investigated the relationship between cumulative grade point averages (CGPAs) and students’ clinical performance.

An analytical rubric for the assessment of clinical class II composite restoration in the academic years of 2022/2023 and 2023/2024 was used by two evaluators. These two evaluators were trained to use the rubric before doing the evaluations. The scores were based on a 4-point scale for the evaluation of five major parameters for pre-operative procedures (10 points), cavity preparation (20 points), restoration procedures (20 points), and time management (4 points). At the same time, chairside oral exam parameter was 15 points based on a 5-point scale. Descriptive statistics were calculated for the different analytical rubric parameters, and the independent t-test was used to compare the scores between the student groups and the evaluators. Other tests, such as the Kappa test and Pearson’s correlation coefficient, were used to measure the association among CGPA, evaluators, and gender participants.

The overall score out of 69 slightly increased for females/males (61.28/59.42) and (61.18/59.49) in the 2022/2023 and 2023/2024 academic years, respectively, but the differences were not statistically significant. In the 2022/2023 academic year, female students scored significantly higher than male students in pre-operative procedures, as evaluated by both evaluators ( p = 0.001), and in time management, as assessed by both evaluators ( p = 0.031). The Kappa test demonstrated a moderate to substantial level of agreement between the two evaluators in both academic years. Strong and significant correlations were noted between students’ CGPA and some tested parameters ( p = 0.000).

The overall performance was very good and high among both genders, but it was marginally higher among females than among males. This study found some differences in performance between male and female students and variability in the evaluations by the two raters ranging from moderate to substantial agreement and similar performances for students with different CGPA.

Peer Review reports

Undergraduate dental students are among the main care providers for restorative procedures in the university dental setting. They develop the necessary skills for clinical procedures on preclinical training using artificial typodont teeth with the aid and guidance of clinical tutors [ 1 ]. The students then further develop and practice the skills learned on patients in a clinical environment by providing a comprehensive range of operative procedures for patients under close supervision from clinical teaching staff [ 2 ]. Evaluation of the student’s performance provides them with consistent feedback and guidance to ensure they are able to deliver care equivalent to that of a practicing general dentist.

The American Dental Education Association recommends using multiple assessment methods to effectively evaluate clinical skills and competence, with the ultimate goal of developing the skills needed for effective clinical practice [ 3 , 4 ]. Global rating scales and checklists are widely used in various assessment domains, but their advantages and disadvantages are debated [ 5 ]. Learners’ competency levels are assessed across multiple skills in healthcare education, requiring assessors to provide a final percentage score [ 6 ]. Checklists use binary options to indicate student performance against predefined criteria [ 7 ]. Both approaches are attractive for higher education as they do not require additional equipment and offer flexible assessment timing [ 8 ]. The literature indicates that poorly designed rating scales without clear, objective criteria can lead to significant inter-examiner variability [ 8 ]. Rater bias and incorrect rating scale interpretation are also significant contributors to marking variance in these assessment methods [ 5 ].

Learning is facilitated when students receive feedback from assessment methods that are consistent and based on meaningful, explicit criteria [ 9 , 10 ]. Lack of uniformity, uncertainty regarding the importance of assessment, and lack of well-defined assessment criteria are commonly expressed concerns among students [ 11 ]. Numerous studies have demonstrated the positive impact that well-designed rubrics have on dental students’ learning [ 12 , 13 ]. O’Donnell et al. proposed that one way to objectify the assessment process is through the use of rubrics: “scaled tools with levels of achievement and clearly defined criteria placed in grid” [ 9 ]. Dental educators from various global regions advocate for integrating rubrics as a pedagogical instrument and emphasizing the substantial value that rubrics can bring to the instructional process. It is a valuable grading tool, enabling educators to assess students’ work consistently, reliably, and impartially [ 14 ]. Moreover, Rubrics can improve the objectivity and consistency of evaluations across different faculty members, provide students with detailed, constructive feedback on their performance, and empower students to self-assess their work against established criteria [ 10 ].

Dental students encounter substantial stress when transitioning from the preclinical stage to the clinical stage of their education [ 15 ]. This increased stress is attributed to factors such as the unique nature of patient cases, anatomical variations among patients, and a lack of self-confidence [ 16 ]. Consequently, students face various challenges in the dental curriculum, with one specific area of significant struggle being class II composite restorations [ 17 , 18 ]. Direct class II composite restorations can present more challenges for the dentist compared to amalgam restorations. The viscoelastic properties of composite materials make them challenging to condense and adapt against the matrix band, which is a crucial step for achieving good marginal adaptation and seal [ 19 ]. Evaluating students using a detailed rubric grading system can be highly beneficial for assessing their competency in various aspects of the procedure [ 20 ]. The detailed analysis of each parameter using these rubrics can provide valuable feedback to students, highlighting their strengths and areas for improvement.

The use of rubrics in evaluating student performance across different areas of dentistry appears to be limited. Previous studies have documented the use of rubrics for assessing root canal treatment procedures [ 12 , 13 , 21 ], crown preparation skills [ 22 ], and oral presentations in the fields of orthodontics [ 23 ] and periodontics [ 24 ]. Additionally, rubrics have been employed to evaluate students’ self-assessment performance in preclinical endodontic [ 25 ] and conservative dentistry courses [ 26 ]. However, research on applying rubrics for evaluating clinical class II composite restorations is lacking.

It has been reported that socioeconomic, cultural, academic, and educational factors, as well as students’ genders and ages, have a significant association with cumulative grade point average (CGPA) scores [ 27 ]. Notably, gender is an essential individual factor that has been studied, but with varying results. A study conducted among Japanese dental students found no significant difference in gender grades [ 28 ]. While Andrade et al. 2009 [ 29 ] found significant differences related to gender, Andrade et al. 2010 [ 30 ] did not, and that could be related to potential variables such as motivation and confidence.

A well-constructed rubric is a permanent reference for clinical performance guidelines [ 31 , 32 , 33 ]. While some studies have delved into the impact of rubrics on student performance, the research in this area is still in its nascent stage. This paper aims to investigate the rubric system for assessing clinical class II composite restoration performed by undergraduate dental students during clinical courses in two successive academic years and explore the potential of gender differences to enhance student learning experience while providing more effective feedback on student performance. Using the analytic rubric, we also investigated any association between CGPAs and students’ clinical performance. The null hypothesis was that there would be no significant differences among students of different genders regarding clinical steps and performance related to composite class II restorations during the clinical phase setting. Furthermore, CGPA would not result in a significant difference in the total analytic rubric scores obtained for the participating students.

Study design and ethical approval

This study was conducted at the Department of Restorative Dental Sciences, College of Dentistry, Jazan University. The participants were 5th-year dental students, male and female, who were attending clinical phase courses. The current study was approved by the College of Dentistry, Jazan University’s ethical committee under # CODJU-2321 F, on August 21, 2023. Also, this ethical approval was approved by Standing Committee for Scientific Research - Jazan University (Approval No. REC-45/03/761) at September/ 22/ 2023.

This study comprised male and female fifth-year students who were registered in this course throughout the academic years 2022/2023 and 2023/2024. Two faculty members with equivalent practice and experience of teaching assessed and evaluated the class II composite filling procedure in advanced clinical restorative courses, including pre-operative steps, cavity preparation assessment, composite restoration steps, and time management.

Study and investigation type

This study was a cross-sectional analysis involving fifth-year undergraduate students enrolled in a clinical operative dentistry course. The students performed various procedures, which included patient examinations, preparing a cavity for a Class II composite restoration on posterior teeth, and conducting a composite restoration as part of their final exam. This exam was scheduled at the end of the academic year and was a requirement for their clinical training. The students received clear instructions about the exercise and were informed about the criteria outlined in the analytic rubric for their assessment, as well as the time allocated for completion. Additionally, throughout the academic year, the students practiced similar exercises using different types of restorations.

The inclusion and exclusion criteria

Inclusion criteria included regular students who finished, passed, and completed their requirements in this course and were eligible to carry out their final clinical exam. The exclusion criteria involved students who were found dishonest, any alteration in the class II cavity preparation and restoration tooth, and the students and student(s) who could not finish the exam exercise within the provided time. The total time allowed for the exercise was two hours. After the end of the exercise, the analytic rubric evaluation sheets were collected and numbered before the two evaluators scored them.

Cases selections and preparation

All the cases were screened earlier by the diagnostic department in accordance with certain requirements and instructions of the advanced operative dentistry course coordinator. All selected teeth should be ready before class II composite restoration and periodontal treatment.

Rubric scoring and data processing

The analytic rubric for the assessment of clinical class II composite restoration (pre-operative procedures [diagnosis steps], cavity preparation, composite restoration, time management, and chairside oral exam was used by two evaluators with almost equal teaching experiences. Prior to the tests, the examiners who were already familiar with the criteria underwent additional calibration, including analytic rubrics for scoring. The evaluators independently assessed the students’ rubric parameters for a composite restoration at each step during the clinical phase, including time spent for the completion of the examination and scoring process. However, assessors were invited to do the assessments and scoring separately/individually.

The analytic rubric used in the study was based on a 4-point scale for the evaluation of three major rubric parameters of a class II composite restoration on posterior teeth. The scoring of each parameter for pre-operative procedures (improper infection control, inadequate pain control, and rubber dam isolation not stable and optimal), cavity preparation steps (outline severely weakens cusps/marginal ridges/lack of treatment of fissures, proximal and/or gingival extensions are in contact or obviously overextended, damage to the adjacent tooth requiring restoration, cavo-surface angles are grossly improper, incomplete caries removal, pulpal floor and/or axial wall is critically shallow or critically deep, significant over-convergence or divergence of walls, and improper management of mechanical pulp exposure), and restoration procedures (class II composite restoration), which included improper matricing and wedging, incorrect placement of lining and base, excess/deficient restorative material on surfaces and/or margins, improper shade matching, poor occlusal/axial/proximal anatomy, and gross mutilation of hard or soft tissue was evident.

The grading was calculated as “none of the critical errors observed,” “any one of the critical errors observed,” “any two of the critical errors observed,” and “more than two of any critical errors observed” and scored as 4, 3, 2, and 1, respectively. The sum was calculated out of the total. The total for diagnosis steps, cavity preparation, composite restoration, time spent, and chairside oral exam were 10, 20, and 20 points, respectively Table 1 . The time needed for managing the all-clinical steps for the class II composite restoration was calculated. It was divided into ˂ 90, 90–120, 121–150, and ≥ 151 min, with scores of 4, 3, 2, and 1, respectively. The total score for time management was 4 points (Table 1 ). The details of the rubric parameters as pre-operative procedures, cavity preparation steps, and restoration procedures are presented in Table 2 .

The final point for scoring was the chairside oral exam parameter after completion of the class II composite restoration. It involved clarity and fluency, evidence and structure, and comprehension and overall understanding. The chairside oral exam parameters were calculated as excellent, good, average, poor, and unsatisfactory and scored as 5, 4, 3, 2, and 1, respectively. The sum was calculated out of the total (15 points) for each student, as shown in Table 3 . The overall sum of each rubric parameter was 69 points for class II composite restoration, as shown in Table 1 (54 points) and Table 3 (15 points).

This analytic rubric used resembled a grid in which the parameters were listed in the leftmost column and levels of grading (performance) were listed across the row using numbers along with the descriptive tags. Each of the three parameters and their questions were graded individually, and the rightmost column was filled with the particular grade against each parameter. The sum of all grades for every parameter was used as the total score of the individual student for each parameter and its question alone (Table 1 ). Each printed logbook sheet was used for each student’s clinical case assessment, and the hard copies were numbered according to the student university identification card and their CGPA assigned to the male and female contributing students.

Cumulative grade point average for participants

A duplicate of the participants’ last total CGPA grades was collected from the student academic affairs at the college. Table 4 shows the criteria reference rule used by College of Dentistry, Jazan University [ 34 ]. Data were categorized and analyzed concerning males and females’ performance.

Reliability and intraclass correlation coefficient test

The authors calculated the reliability of internal consistency utilizing Cronbach’s α coefficient. The outcomes showed that the content reliability of the overall scale was internally consistent. The value of the reliability coefficient of the data collection tool (analytic rubric) was 87–88%, indicating that the items (criteria, level of achievements, and rating) used in the rubric were internally consistent. All measurements were exposed to intra-examiner reliability with intraclass correlation coefficient (ICC) values [ 35 ].

Data analysis

Statistical package for the social sciences (SPSS) version 26.0 (SPSS, Inc., Chicago, IL, USA) was used for data entry and statistical analysis of the obtained data. Descriptive statistics of both evaluators and the different analytic rubric parameters of class II composite restoration for posterior teeth were calculated as the mean and standard deviation (SD). An independent t-test was used to calculate the differences between the student groups in relation to the investigators, and the rubric parameters were used to compare scores for each parameter and all parameters together.

The Kappa test was used to assess inter-rater reliability. A reliability test was used to evaluate the ICC the parameters. Pearson’s correlation coefficient test was used to measure the association among CGPA, evaluators, and gender participants for analytic rubric parameters of class II composite restoration. A p-value < 0.05 was considered a cutoff point for statistical significance.

The analytic rubric parameters for the class II composite restorations in the clinical operative dentistry course by 5th-year dental students for the 2022/2023 and 2023/2024 academic years were analyzed in this study, and the results are presented in Table 5 . Two evaluators evaluated the analytic rubric parameters for 34 students (17 males and 17 females) for 2022/2023 and 75 students (31 males and 44 females) for 2023/2024.

Descriptive statistics on students’ scores recorded by the two evaluators are presented in Table 3 . The two evaluators carried out pre-operative procedures, cavity preparation, restoration steps, time management, and chairside oral exam steps for the two academic years for males and females out of 69 (Table 5 ). Almost no differences in the rubric parameter scores were recorded by genders during both academic years by the evaluators and for all clinical steps. For the first evaluator, the rubric scores were 59.26 ± 6.05 and 61.23 ± 6.15/59.41 ± 4.56 and 60.15 ± 6.03 for males and females for the 2022–2023 and 2023–2024 academic years, respectively. For the second evaluator, the rubric scores were 59.58 ± 5.65 and 61.34 ± 5.27/59.56 ± 4.50 and 62.21 ± 6.10 for males and females for the 2022–2023 and 2023–2024 academic years, respectively (Table 5 ).

The overall rubric score was slightly higher in females than in males, with 59.42 ± 5.71 and 61.28 ± 5.35/59.49 ± 4.34 and 61.18 ± 5.76 for males and females for the 2022–2023 and 2023–2024 academic years, respectively (Table 6 ). Independent t-tests showed no significant differences between genders during the two academic years and the overall average score, with p = 0.158 and p = 0.342 for the 2022/2023 and 2023/2024 academic years, respectively (Table 6 ).

Table 7 shows the Kapp test results for the analytic rubric parameters of a class II composite restoration, comparing the assessments of two evaluators (Evaluator 1 and Evaluator 2) across two academic years (2022/2023 and 2023/2024). For pre-operative procedures, values were 0.617 in 2022/2023 and 0.608 in 2023/2024. For cavity preparation, values were 0.620 in 2022/2023 and 0.561 in 2023/2024. For restoration steps, values were 0.649 in 2022/2023 and 0.616 in 2023/2024.

Table 8 shows the inter-item correlation coefficients between different rubric parameters for a class II composite restoration for the academic years 2022/2023 and 2023/2024. In 2022/2023, the highest correlation was between pre-operative procedures and cavity preparation (0.358), the correlation between pre-operative procedures and restoration steps was 0.236, the correlation between time management and the chairside oral exam was 0.373, and all other correlations were below 0.200. In 2023/2024, the highest correlation was again between pre-operative procedures and cavity preparation (0.417). The correlation between time management and the chairside oral exam was 0.414, the correlation between restoration steps and time management was 0.206, and the correlation between pre-operative procedures and restoration steps was − 0.125, indicating a weak negative relationship. All other correlations were below 0.200.

Table 9 shows the Pearson correlation coefficients for CGPA, total scores from Evaluator 1, total scores from Evaluator 2, and overall average scores for the academic years 2022/2023 and 2023/2024. In 2022/2023, CGPA was significantly correlated with the total score from Evaluator 2 (0.368, p < 0.01) and the overall average score (0.302, p < 0.01). The total scores from Evaluator 1 and Evaluator 2 were highly correlated (0.824, p < 0.01), as well as their correlations with the overall average score (0.960 and 0.949, respectively, both p < 0.01). In 2023/2024, the correlations between CGPA and other variables were not statistically significant. The correlations between the total scores from Evaluator 1 and Evaluator 2 (0.808, p < 0.01) and their correlations with the overall average score (0.949 and 0.952, respectively, both p < 0.01) remained high and significant.

Dental education is a complicated process that combines theoretical instruction and hands-on preclinical and clinical training to develop competent dental professionals. Dental students rely on the guidance and feedback provided by their instructors and a grading system to track their progress and development. Self-assessment of one’s performance is a direct and straightforward approach for students to evaluate their growing competency throughout their learning process [ 36 ]. Existing research has suggested that students who receive instruction using rubrics may learn more effectively than those who do not [ 31 , 37 ]. This phenomenon is attributed to the ability of rubrics to shift students’ focus and help them identify the critical key steps in procedures, ultimately leading to improved performance once they enter the clinical environment [ 37 ]. Accordingly, the present study aimed to investigate the use of an analytical rubric-based assessment scheme for evaluating undergraduate dental students’ performance of class II composite restoration in different academic years.

Rubrics are scoring tools that define the criteria for evaluating students’ work and the corresponding performance levels [ 38 ]. The key distinction of rubrics compared with other grading systems is that they provide detailed performance definitions for each criterion across different levels of success, allowing for comprehensive feedback to students [ 39 ]. Two main categories of rubrics may be distinguished: holistic and analytical. In holistic rubrics, a single score is given to the student’s performance, with descriptions provided for all performance levels [ 40 , 41 ]. The focus is on the overall performance, and minor mistakes can be overlooked. By contrast, analytic rubrics offer information about the student’s achievement levels across various dimensions or criteria. This type of rubric allows for a detailed profile of the student’s strengths and weaknesses in a particular area [ 12 ]. Analytic rubrics are more commonly used than holistic rubrics, as they are considered a more reliable and functional scoring tool in which the evaluator assigns a score to each of the dimensions being assessed in the task [ 41 ]. In the present study, we used analytic rubrics.

The interaction between treatment and gender in terms of rubric use is not entirely clear. Some studies have found that the use of rubrics can have different effects on males and females, with females in the treatment group reporting higher self-efficacy than males [ 42 , 43 ]. In the present study, the overall average scores were slightly higher for females than for males, but the differences were not statistically significant (Table 6 ). Therefore, the first part of the null hypothesis was accepted. This result was in accordance with a study conducted by Liang et al. among Japanese dental students, which found no significant difference in grades between male and female students [ 28 ]. However, a study conducted among Saudi students showed that female students exhibited significantly higher presentation performance using rubrics compared with their male counterparts, leading to significantly significant differences between males and females in their grading scores [ 44 ].

Gender is a significant individual factor that has been the subject of research, yielding diverse outcomes. While the study conducted by Andrade et al. [ 29 ] identified meaningful differences associated with gender, the subsequent research by Andrade et al. did not observe such differences [ 30 ]. This inconsistency may be attributed to various influencing factors, including motivation and confidence.

Rubrics have been reported to increase the consistency of judgment when assessing performance and enhancing the consistency of scoring across students, assignments, and between raters [ 9 , 12 ]. Rubrics provide a valid means of evaluating complex competencies that cannot be achieved through conventional written tests without sacrificing reliability [ 45 ]. The results of the present study demonstrated a generally moderate to substantial level of agreement between the two evaluators on the analytic rubric parameters for class II composite across the two time periods, similar to other studies [ 12 , 26 , 46 ]. The low Kappa values for cavity preparation and restoration steps in 2023/2024 indicated a potential decrease in agreement between the evaluators compared with the previous year. Factors such as the clarity and specificity of the rubric criteria and the training and calibration of raters can influence the level of inter-rater reliability achieved [ 10 ]. Furthermore, using a detailed rating scale, such as the five-level scale used in this study, can make it difficult for raters to apply it consistently [ 40 ].

Rubric performance levels are typically labeled with descriptive adjectives that determine the degree of performance, providing consistent, objective assessment and enhanced feedback to students on their expected performance [ 14 ]. The use of five performance levels in the present study allows for a detailed evaluation, going beyond a simple pass/fail or good/bad dichotomy and clearly communicating the expected standards to students. This transparency helps students understand what is required to achieve different levels of success, and it allows evaluators to provide specific, actionable feedback on areas for improvement [ 13 , 43 ]. Students can effectively self-assess and identify learning gaps when they understand the rubric’s performance levels.

While it evaluates the performance quality in each clinical step, it assigns a numerical score for each level, enabling students to gauge their performance and strive for higher grades. This system offers valuable insights into the students who excelled in the procedures and those whose performance was merely satisfactory [ 33 ]. Research has shown that using rubrics in dental education can lead to higher inter-rater reliability levels than subjective evaluation methods [ 12 ]. Thus, the unique features of rubrics make them practical tools for enhancing the accuracy of performance assessments and for facilitating the formative assessment process, which entails using assessment data to inform students about their performance and to assist in their progress [ 47 ]. However, rubrics are time-consuming, which could be stressful for both teachers and students and might influence the evaluator’s decision-making and the students’ performance, thus hindering task performance [ 47 , 48 ].

The longevity of class II composite restorations is heavily influenced by the knowledge, skills, and performance of the dental professional responsible for diagnosis, decision-making, quality of isolation, tooth preparation, and restoration [ 49 ]. Inter-item correlation analysis revealed that the pre-operative procedures, cavity preparation, and time management maintained strong to moderate positive correlations with several other rubric parameters across both years examined (Table 8 ). This result suggested that students’ proficiency in these areas was highly predictive of their overall performance in this procedure. However, restoration steps exhibit a shift, moving from moderate positive correlations with pre-operative procedures and cavity preparation in the earlier year to a negative relationship with pre-operative procedures and a weaker correlation with cavity preparation in the later year, potentially due to differences in the level of manual dexterity and psychomotor skills among the student groups in different years [ 50 ].

Research has suggested that dental students who achieve high CGPA may not necessarily perform as well in their practical procedures, and the opposite is true [ 51 ]. The present study was aligned with the finding of previous research where CGPA showed a moderate, positive relationship with the total evaluator score ( r = 0.368, p = 0.001) and overall average ( r = 0.302, p = 0.009) in the 2022/2023 academic year. This result indicated that a high CGPA is associated with high overall rubric scores. Therefore, the null hypothesis was partially rejected in 2022/2023 but failed to be rejected in 2023/2024 as the correlations were not statistically significant. These results contradicted a previous study by Bindayel et al., who examined the reliability of using rubrics to assess undergraduate students’ orthodontic presentations. That research found no significant correlation between students’ final course grades and the rubric-based grades assigned by instructors for the presentations [ 23 ].

Gudipaneni et al. documented a substantial sex variance in professional competence among dental students, with men being 91% less likely to display professionalism than women. Furthermore, the investigators determined that academic performance is a strong predictor of health promotion skills, as students with a high CGPA in their theoretical coursework are 3.4 times more likely to exhibit competence in this domain relative to those with an average CGPA [ 52 ]. Also, Agou et al. stated that dental undergraduates with higher CGPAs in their last year of study are more likely to achieve superior grades on the comprehensive final case assessment than their counterparts. Additionally, the study revealed a significant advantage for female dental students, who were more than twice as likely to earn higher scores on the culminating clinical evaluation compared to their male counterparts [ 53 ].

Additional research is needed to further elucidate the relationship among gender, CGPA, and the use of rubrics as assessment tools. Studies examined the impact of using rubrics in combination with self-and peer assessment activities on the quality of class II composite restorations students perform. Moreover, comparative analyses are recommended to evaluate the effectiveness of analytic rubric parameters versus other traditional evaluation systems, such as checklists [ 54 ], global rating scales [ 5 ], structured rating scales [ 4 ], and enhanced personal protective equipment on student operators experience and restorative procedure [ 55 ].

Limitations and future scope of the study

The limitations of the study should be considered when interpreting the findings. First, the sample size was relatively small, which could restrict the generalizability of the results. Second, the rubric was only tested in a single academic setting, so its reliability across different educational environments remains to be evaluated. We highly recommended a study involving different dental institutions that use rubrics to improve overall students’ academic achievement.

Almost all students (males and females) scored well in most of the parameters, and near to excellent performance was observed in some parameters, with females slightly outperforming males. The study found no significant differences in performance between male and female dental students on various class II composite restoration rubric parameters, with females scoring higher overall than males. Inter-rater agreements ranged from moderate to substantial. Interestingly, the analysis revealed a positive correlation between the student’s CGPA and their scores from Evaluator 2, as well as the overall average scores. Thus, academic achievement was associated with a strong performance on the evaluated restoration tasks.

Data availability

All data supporting the findings of this study are available from the corresponding author upon reasonable request. All data supporting the findings of this study are available from the corresponding author upon reasonable request.

McGleenon EL, Morison S. Preparing dental students for independent practice: a scoping review of methods and trends in undergraduate clinical skills teaching in the UK and Ireland. Br Dent J. 2021;230(1):39–45. https://doi.org/10.1038/s41415-020-2505-7 .

Article Google Scholar

Hallak JC, Ferreira FS, de Oliveira CA, Pazos JM, Neves TDC, Garcia PPNS. Transition between preclinical and clinical training: perception of dental students regarding the adoption of ergonomic principles. PLoS ONE. 2023;18(3):e0282718. https://doi.org/10.1371/journal.pone.0282718 .

Kramer GA, Albino JE, Andrieu SC, Hendricson WD, Henson L, Horn BD, Neumann LM, Young SK. Dental student assessment toolbox. J Dent Educ. 2009;73(1):12–35. PMID: 19126764.

Uoshima K, Akiba N, Nagasawa M. Technical skill training and assessment in dental education. Jpn Dent Sci Rev. 2021;57:160–3. https://doi.org/10.1016/j.jdsr.2021.08.004 .

Henrico K, Makkink AW. Use of global rating scales and checklists in clinical simulation-based assessments: a protocol for a scoping review. BMJ Open2023;13:e065981. https://doi.org/10.1136/bmjopen-2022-065981

Bremer A, Andersson Hagiwara M, Tavares W, Paakkonen H, Nyström P, Andersson H. Translation and further validation of a global rating scale for the assessment of clinical competence in prehospital emergency care. Nurse Educ Pract 2020;47. https://doi.org/10.1016/j.nepr.2020.102841

Seo S, Thomas A, Uspal NG. A Global Rating Scale and Checklist Instrument for Pediatric Laceration Repair. MedEdPORTAL. 2019 Febr 27;15:10806. https://doi.org/10.15766/mep_2374-8265.10806

Ilgen JS, Ma IWY, Hatala R, Cook DA. A systematic review of validity evidence for checklists versus global rating scales in simulation-based assessment. Med Educ. 2015;49:161–73. https://doi.org/10.1111/medu.12621 .

O’Donnell JA, Oakley M, Haney S, O’Neill PN, Taylor D. Rubrics 101: a primer for Rubric Development in Dental Education. J Dent Educ. 2011;75(9):1163–75. https://doi.org/10.1002/j.0022.0337.2011.75.9.tb05160.x .

Jonsson A, Svingby G. The use of scoring rubrics: reliability, validity and educational consequences. Educ Res Rev. 2007;2(2):130–44. https://doi.org/10.1016/j.edurev.2007.05.002 .

Licari FW, Knight GW, Guenzel PJ. Designing evaluation forms to facilitate student learning. J Dent Educ. 2008;72(1):48–58.

Escribano N, Belliard V, Baracco B, Da Silva D, Ceballos L, Fuentes MV. Rubric vs. numeric rating scale: agreement among evaluators on endodontic treatments performed by dental students. BMC Med Educ. 2023;23(1):197. https://doi.org/10.1186/s12909-023-04187-3 .

Abiad RS. Rubrics for practical endodontics. J Orthod Endod. 2017;3:1. https://doi.org/10.21767/2469-2980.100039 .

Olson J, Krysiak R. Rubrics as Tools for Effective Assessment of Student Learning and Program Quality. In: 2021. pp. 173–200. ISBN 978-1-7998-7655-7. https://doi.org/10.4018/978-1-7998-7653-3.ch010